4919

Transformer-based image quality improvement of radial undersampled lung MRI data from post-COVID-19 patients

Maximilian Zubke1,2, Robin A Müller1,2, Marius Wernz1,2, Filip Klimeš1,2, Frank Wacker1,2, and Jens Vogel-Claussen1,2

1Institute of Diagnostic and Interventional Radiology, Hannover Medical School, Hannover, Germany, 2Biomedical Research in Endstage and Obstructive Lung Disease Hannover (BREATH), German Center for Lung Research (DZL), Hannover, Germany

1Institute of Diagnostic and Interventional Radiology, Hannover Medical School, Hannover, Germany, 2Biomedical Research in Endstage and Obstructive Lung Disease Hannover (BREATH), German Center for Lung Research (DZL), Hannover, Germany

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Machine Learning/Artificial Intelligence

Currently, MR-based ventilation imaging relying on radial 3D-stack-of-stars spoiled gradient echo sequence requires a fairly long acquisition time of 8 minutes, which may impact clinical translation. Therefore, a shorter acquisition time is desired. In this study, a novel deep learning approach called transformer was evaluated for image restauration of radial undersampled lung images from 16 post-COVID-19 patients. For each patient, images resulting from 4- and 8 minutes acquisitions were provided. A transformer was trained to translate the 4-minute-version to the corresponding 8-minute-version and led to a significant image quality improvement, demonstrated by three complementary image similarity metrics.Introduction

MR-based functional lung imaging enables a ventilation assessment of post-COVID-19 patients without radiation and without contrast-agent1. However, the acquisition of necessary high-quality images, acquired using 3D-stack-of-stars spoiled gradient echo sequence and reconstructed using parallel imaging and compressed sensing, requires 8 minutes of scan time1. Reducing this to 4 minutes would be beneficial for young children or patients for whom the scan time plays an essential role. However, a reduction to as half as many spokes results in image artifacts. In addition to compressed sensing, deep learning has been introduced as a novel building block of MR-reconstruction pipelines addressing this issue.2 Recently, a novel neural network architecture, called transformer3, has outperformed state-of-the-art neural networks in several tasks3,4.For image processing, the Shifted-Window-Transformer5 (Swin Transformer) has been developed and already applied in context of brain-MR reconstruction6. In this study, a similar Swin Transformer has been evaluated in context of lung-MR reconstruction. The transformer was trained with pairs of radial 3D-stack-of-stars spoiled gradient echo MR images corresponding to 4 minutes and to 8 minutes of acquisition time. The improvement of the 4 minute version has been measured using three complementary image similarity metrics.Methods

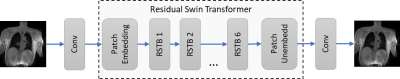

16 post-Covid-19 patients (6 female, age range: 23-68) underwent MR imaging at 1.5 T (MAGNETOM Avanto; Siemens Healthcare, Erlangen, Germany) using a prototype stack-of-stars spoiled gradient echo sequence with golden-angle increment (FOV 50 x 50 cm2, TE / TR 0.81/1.9 msec, flip angle 3.5°, matrix size 128 x 128) over a period of eight minutes. Approximately 40 respiratory phases were reconstructed for each patient using parallel imaging and compressed sensing, with sparsity exploited along the temporal (respiratory state) and spatial dimension. Reconstruction was performed using Berkeley Advanced Reconstruction Toolbox (BART)7. The k-space data acquired over 8 minutes has been retrospectively undersampled, by randomly excluding 50% of acquired spokes. Based on the resulting undersampled k-space data, the same number of respiratory phases was reconstructed using the same strategy as for the original data. This dataset is further referred to as the 4-minute version. The included patient records consist of 64(± 6.2) x 39(± 4) 2D-images. A Swin Transformer with image matrix size 128x128x1, embedding size 96, and 6 residual Swin-transformer blocks (RSTB) having 6 attention heads each has been constructed. The resulting network architecture is summarized in Figure 1. Next, the transformer has been trained using MR images resulting from 4 minutes of radial sampling as input and corresponding images from 8 minutes of radial sampling as ground truth. Loss was calculated by Charbonier-Loss function8.For transformer training, 21.772 2D-images from 9 post-COVID-19 patients (female: 3, mean age rage: 23-68) were used.

The evaluation was conducted with 17.676 images from 7 unseen post-COVID-19 patients (female: 3, mean age: 48).

The image quality improvement from the transformer output in comparison to the original 4-minute version was assessed by the mean-squared-error (MSE), peak-signal-to-noise-ratio (PSNR) and AlexNet-based learned-perceptual-image-patch-similarity 9 (LPIPS).

Results

As exemplarily shown in Figure 2, the Swin Transformer reduces streak artifacts caused by radial undersampling. This significant correction has been more distinctly captured by LPIPS than MSE (see Table 1). In addition, a moderate improvement (+2) of PSNR could be noted. A detailed image analysis of the seven patients (see Table 2) showed, that the transformer improved the MSE for at least 70%, the PSRN for at least 65% and the LPIPS for at least 98% of 2D-images of each patient. Meaning that, if a patient record consists of 1000 images, after post-processing by the transformer, at least 700 images would be more similar to the corresponding images of the 8-minute version than the same 700 images from the initial 4-minute version are.Discussion

The results demonstrate, that state-of-the-art deep learning approaches such as transformer networks are able to improve dynamic lung MR image quality without knowing the temporal dimension nor having access to acquired raw data. However, it may be possible that an integration of this additional information would lead to a further improvement.Conclusion

A Swin Transformer is able to decrease the difference between images acquired in 4 minutes using 3D-stack-of-stars spoiled gradient echo sequence to images acquired with twice as many spokes using the same sequence in 8 minutes. The image quality improvement from the original 4-minute version to the 4-minute version after post-processing by the transformer is significant.Acknowledgements

No acknowledgement found.References

- Klimeš F, Voskrebenzev A, Gutberlet M et.al. 3D phase‐resolved functional lung ventilation MR imaging in healthy volunteers and patients with chronic pulmonary disease. Magnetic Resonance in Medicine. 2021; 85(2), 912-925. doi: 10.1002/mrm.28482

- Chen Y, Schönlieb CB, Lió P et.al. AI-based reconstruction for fast MRI—a systematic review and meta-analysis. Proceedings of the IEEE. 2022; 110(2), 224-245. doi: 10.1109/JPROC.2022.3141367

- Vaswani A, Shazeer N, Parmar N et.al. Attention is all you need. Advances in neural information processing systems. 2017; 30. doi: 10.48550/arXiv.1706.03762

- Parmar N, Vaswani A, Uszkoreit J et.al. Image Transformer. Proceedings of the 35th International Conference on Machine Learning, PMLR. 2018;80,4055-4064. doi: 10.48550/arXiv.1802.05751

- Liu Z, Lin Y, Cao Y et.al. Swin transformer: Hierarchical vision transformer using shifted windows. Proceedings of the IEEE/CVF International Conference on Computer Vision. 2021, 10012-10022. doi: 10.48550/arXiv.2103.14030

- Huang J, Fang Y, Wu Y et.al. Swin transformer for fast MRI. Neurocomputing. 2022,493, 281-304. doi: 10.48550/arXiv.2201.03230

- Uecker M, Ong F, Tamir JI et al. Berkeley advanced reconstruction toolbox. Proc Intl Soc Mag Reson Med. 2015,2486.

- Barron JT. A general and adaptive robust loss function. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019, 4331-4339. doi: 10.48550/arXiv.1701.03077

- Zhang R, Isola P, Efros A et.al. The unreasonable effectiveness of deep features as a perceptual metric. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018, 586-595. doi: 10.48550/arXiv.1801.03924

Figures

Figure 1: The neural network consists of a initial 2D-convolutional layer, followed by a cascade of 6 residual Swin Transformer blocks (RSTB). The transformer part uses patch embedding having dimension 96. Finally, the network ends with a final convolutional layer.

Figure 2: Pixel differences between the 4-minute and the 8-minute version (a) are mainly caused by streaking artifacts. Furthermore, dynamic regions (heart, lung) show increased error. Artifacts and especially reconstruction errors within the lung can be reduced by the Swin Transformer as shown in (b).

Table 1: Comparison of images from 4 minutes acquisition time with same images improved by the transformer. Decreased MSE and LPS as well as increased PSNR mark an improvement.

Table 2: Image improvement rate per patient measured by three metrics. A result value of 1.0 means that the distance between all images of the transformer result and the 8-minute version has decreased. On average, the transformer reduces the MSE for 73% of the 2D-images of the patient.

DOI: https://doi.org/10.58530/2023/4919