4891

HiFNet: Hierarchical feature sharing network for multi-echo GRE denoising1Seoul National University, Seoul, Korea, Republic of

Synopsis

Keywords: Susceptibility, Susceptibility

A new denoising network, HiFNet, for complex valued multi-echo GRE image denoising, is proposed. This network shared network features hierarchically from the first echo image to the last echo image, utilizing the redundancy in multi-echo GRE images along the echo dimension. When tested with synthetic noise denoising and real-world denoising experiments, the proposed network shows better performance than a network with no featuring or feature sharing in reverse order, demonstrating the effectiveness of the proposed feature sharing.Introduction

For MR image denoising, deep learning methods have been gaining popularity, outperforming conventional denoising methods1-3. These denoising methods, however, were designed to process a single MR image, which may not be optimum in multi-echo images such as multi-echo GRE or multi-echo SE that have shared information between echoes. In this study, we proposed a new denoising network structure, which utilized this redundancy in the echo dimension. The proposed network utilizes the network features of previous echo images, which have a higher signal-to-noise ratio, to further improve denoising performance. The proposed method is referred to as HiFNet hereafter. We evaluated the network performance using synthetic noise-added data and real-world data.Methods

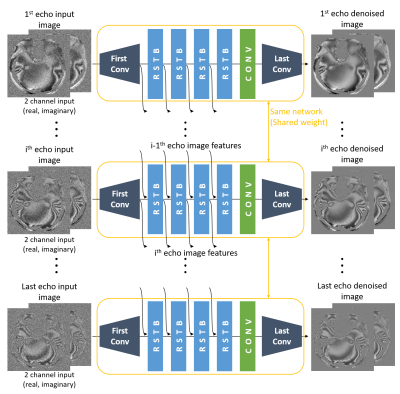

[Network structure] For a network structure, SwinIR, a state-of-the-art network in single image denoising, was used4. It consisted of a first convolutionl layer, followed by 4 Residual Swin Transformer Blocks (RSTB), and a last convolutional layer. RSTB consisted of 6 Swin transformer layers, which performed attention in a 8 $$$\times$$$ 8 local window. A skip connection was applied from network input to output of the last convolutional layer. To utilize the redundancy of complex valued multiple echo images, a hierarchical feature-sharing structure was introduced (Fig. 1). When the ith echo image was denoised, the network features of the first convolutional layer and three features of RSTB of the i-1th image were concatenated at the corresponding features of the ith echo image. The features of the ith echo image were then utilized for the denoising of i+1th echo image. For the 1st echo image, zeros were utilized for previous echo features. The network weights were shared for all echo images. For the training of the network, Coil2Coil3, a self-supervised method that applied Noise2Noise5 by generating a pair of noisy images from phased-array coil images, was utilized.[Network training] Complex valued multi-echo GRE images from6 were utilized (IRB approved) for training and evaluation, which have 4 subjects in 6 different head orientations with the following protocols: resolution = 1 $$$\times$$$ 1 $$$\times$$$ 1 mm3, TR = 38 ms, TE = 7.70:5.03:32.85 ms (6 echos). Additionally, a total of 32 subjects were acquired (IRB approved) using the following protocols: resolution = 0.7 $$$\times$$$ 0.7 $$$\times$$$ 0.7 mm3, TR = 40 ms, TE = 4.52:6.1:28.92 ms (5 echos). For network training, 3 subjects of multi orientation data and 28 subjects of single orientation data were used while the rest of the data were used for the test. AdamW optimizer with a learning rate of 1e-3 was used for network parameter optimization.

[Denoising of synthetic noise-added data and real-world data] First, to evaluate the denoising performance quantitatively, the denoising experiment was performed by adding synthetic noise to MR images. Dataset was generated by estimating M0, T2*, and frequency offset and field map from the test data. As a preprocessing, the obtained data were normalized to have a normal distribution. Then noise, which has a standard deviation of 1.5, was generated and added to the data. Second, denoising experiments were performed on real-world images (i.e., original test data). A new network was trained with no additional noise.

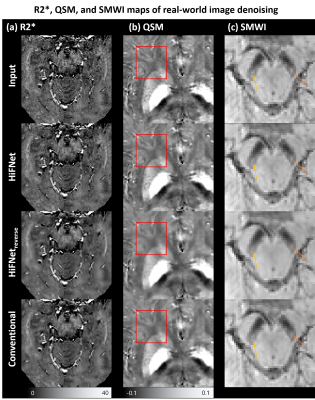

[Evaluation] For the evaluation of denoising performance, PSNR and SSIM were compared. Additionally, R2* and quantitative susceptibility mapping (QSM) maps were estimated with and without denoising. For comparison, conventional single echo denoising network and, a network with the reverse direction feature sharing (i.e., from last to first echo), referred to as HiFNetreverse, were tested. For real-world images, susceptibility map-weighted image (SMWI) maps were additionally estimated.

Results

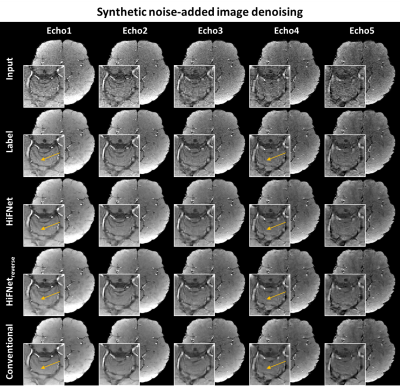

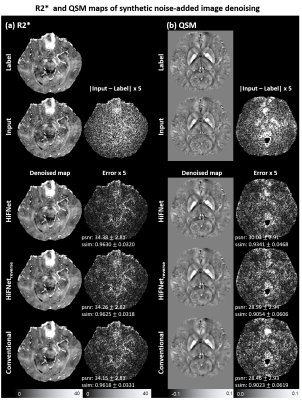

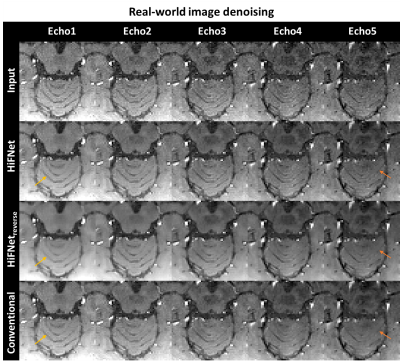

HiFNet successfully denoised the synthetic noise (Fig. 2) and real-world noise (Fig. 4). In the denoising of synthetic noise-added images, the proposed network shows better performance (psnr: 30.01 $$$\pm$$$ 1.64; ssim: 0.9695 $$$\pm$$$ 0.0019) than the conventional network (psnr: 29.74 $$$\pm$$$ 1.61, p < 0.001; ssim: 0.9679 $$$\pm$$$ 0.0020, p < 0.001), which does not shared the network features between echos. HiFNetreverse, the reverse direction feature sharing network from last echo to first echo, showed performance degradation (psnr: 29.88 $$$\pm$$$ 1.63, p < 0.001; ssim: 0.9684 $$$\pm$$$ 0.0019, p < 0.001). The denoised images of the synthetic noise denoising experiment (Fig. 2) show that HiFNet preserves more details than HiFNetreverse and the conventional network, showing the effects of feature sharing. In R2* and QSM maps, the same trends were observed (Fig. 3). In denoising of real-world noise (Fig. 4), the proposed method successfully improves all the echo images, also in R2*, QSM, and SMWI maps (Fig. 5).Conclusion and Discussion

In this study, we proposed a denoising network for complex valued multi-echo GRE images, HiFNet, which shared the network features between echo images hierarchically from first echo to last echo image. The proposed network shows better performance in synthetic noise-added denoising experiments and preserves more details in real-world denoising experiments for multi-echo GRE images, R2*, QSM, and SMWI maps, demonstrating the effects of hierarchical feature sharing. Furthermore, the same network was used to denoise the various echo images, hence the network is generalized from the number of echoes, enabling wide use of the method.Acknowledgements

This work was supported by the National Research Foundation of Korea(NRF) grant funded by the Korea government(MSIT) (No. NRF-2022R1A4A1030579) and RadiSen.References

[1] L. Gondara, "Medical image denoising using convolutional denoising autoencoders," in 2016 IEEE 16th international conference on data mining workshops (ICDMW), IEEE, pp. 241-246. 2016.

[2] M. Kidoh et al., "Deep learning based noise reduction for brain MR imaging: tests on phantoms and healthy volunteers," Magnetic Resonance in Medical Sciences, vol. 19, no. 3, p. 195, 2020.

[3] J. Park et al., "Coil2Coil: Self-supervised MR image denoising using phased-array coil images," arXiv preprint arXiv:2208.07552, 2022.

[4] J. Liang, J. Cao, G. Sun, K. Zhang, L. Van Gool, and R. Timofte, "SwinIR: Image restoration using swin transformer," in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1833-1844. 2021.

[5] J. Lehtinen et al., "Noise2noise: Learning image restoration without clean data," arXiv preprint arXiv:1803.04189, 2018.

[6] Shin, H. G et al., 𝜒-separation: Magnetic susceptibility source separation toward iron and myelin mapping in the brain. NeuroImage, 240, 118371. 2021.

Figures