4799

A Physics-informed Conditional Wasserstein Autoencoder to Quantify Uncertainties in Accelerated 2D Dynamic Radial MRI1Physikalisch-Technische Bundesanstalt (PTB), Braunschweig and Berlin, Germany, 2Department of Biomedical Engineering, Technical University of Berlin, Berlin, Germany, 3School of Imaging Sciences and Biomedical Engineering, King’s College London, London, United Kingdom

Synopsis

Keywords: Image Reconstruction, Cardiovascular, Radial Acquisition

Uncertainty quantification (UQ) can provide important information about deep learning algorithms and help interpret the obtained results. UQ for multi-coil dynamic MRI is challenging due to the large scale of the problem and scarce training data. We approach these issues by learning distributions in a lower dimensional latent space using a conditional Wasserstein autoencoder while utilizing the MR data acquisition model and by exploiting spatio-temporal correlations of the cine MR images. Our results indicate excellent image quality accompanied with uncertainty maps that correlate well with estimation errors.Introduction

Uncertainty quantification (UQ) provides a promising method of characterizing deep learning (DL) algorithms. In 2D dynamic multicoil radial MRI, the lack of large training datasets due to the expensive acquisition process and large-scale of the problem poses major challenges in performing UQ, which involves exploring entire distributions of possible outputs given some input instead of yielding just single estimates. Previous works1 has demonstrated the usage of Monte Carlo dropout with aleatoric2 (MC-Drop+Ale) to accomplish this. However, MC-Drop+Ale assumes Gaussian posterior2,3 distributions and uncorrelated pixel-wise reconstructions3, which are not necessarily true. To this end, we propose to use a conditional Wasserstein autoencoder (cWAE) that does not impose such assumptions. Here, the distributions are learned in a lower dimensional latent space that requires less computations than in the original image space. The cWAE is a conditional extension of the Wasserstein autoencoder (WAE) framework4, that generalizes adversarial autoencoders5, and have better sample quality than variational autoencoders6. Our work further incorporates the radial acquisition forward model into the network architecture7, as well as spatiotemporal8 learning, in order to learn efficiently with limited data.Methods

Problem formulation: We denote the complex-valued dynamic MR images reconstructed from fully sampled $$$k$$$-space as $$$\mathbf{x}_{\mathrm{f}}\in\mathbb{C}^{N}=\mathbb{C}^{N_{x}\cdot\,N_{y}\cdot\,N_{t}}$$$. Given the reconstruction $$$\mathbf{x}_{\mathrm{I}}\in\mathbb{C}^{N}$$$ from radially undersampled $$$k$$$-space, our objective is to learn the conditional distribution $$$p(\mathbf{x}_{\mathrm{f}}|\mathbf{x}_{\mathrm{I}})$$$. For this purpose, we consider $$$\mathbf{x}_{\theta}\sim p_{\theta}(\mathbf{x}_{\theta}|\mathbf{x}_{\mathrm{I}})$$$ that are generated by $$$p_{\theta}$$$. Similar to [4], we propose to reformulate Kantorovich's formulation of the optimal transport problem into a conditional autoencoder framework, and define the following objective-$$W_{\mathrm{cWAE}}(p(\mathbf{x}_{\mathrm{f}}|\mathbf{x}_{\mathrm{I}}),p_{\theta}(\mathbf{x}_{\theta}|\mathbf{x}_{\mathrm{I}}))=\,\underset{q:q_{Z}=p_{Z}}{\displaystyle{\inf}}\,\mathbb{E}_{(\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})\sim\,p(\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})}\mathbb{E}_{\mathbf{z}\sim\,q(\mathbf{z}|\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})}\{c(\mathbf{x}_{\mathrm{f}},f_{\theta}(\mathbf{z},\mathbf{x}_{\mathrm{I}}))\},\tag{1}$$

where $$$c(\cdot,\cdot):\mathbb{C}^{N}\times\mathbb{C}^{N}\rightarrow\mathbb{R}$$$ is a $$$L2$$$ loss in our case, $$$f_{\theta}$$$ is a deterministic function of $$$\mathbf{z}$$$ and $$$\mathbf{x}_{\mathrm{I}}$$$, and $$$q$$$ is the distribution of latent variable $$$\mathbf{z}\sim q(\mathbf{z}|\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})$$$ such that its marginal $$$q_{Z}:=\int q(\mathbf{z}|\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})p(\mathbf{x}_{\mathrm{f}}|\mathbf{x}_{\mathrm{I}})\mathrm{d}\mathbf{x}_{\mathrm{f}}$$$ is identical to $$$p_{z}:=p(\mathbf{z}|\mathbf{x}_{\mathrm{I}})$$$. To allow for a numerically feasible computation (1) is relaxed as-

$$W_{\mathrm{cWAE}}(p(\mathbf{x}_{\mathrm{f}}|\mathbf{x}_{\mathrm{I}}),p_{\theta}(\mathbf{x}_{\mathrm{\theta}}|\mathbf{x}_{\mathrm{I}}))=\,\underset{q}{\displaystyle{\inf}}\,\mathbb{E}_{(\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})\sim\,p(\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})}\mathbb{E}_{\mathbf{z}\sim\,q(\mathbf{z}|\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})}\{c(\mathbf{x}_{\mathrm{f}},f_{\theta}(\mathbf{z},\mathbf{x}_{\mathrm{I}}))\}+\mathcal{D}(q_{Z},p_{Z}),\tag{2}$$

where $$$\mathcal{D}$$$ is any divergence measure in the latent space. Here, the optimal $$$q$$$ that minimizes (2) can be interpreted as an encoder that encodes $$$\mathbf{z}$$$ which is decoded back as $$$\mathbf{x}_{\theta}=f_{\theta}(\mathbf{z},\mathbf{x}_{\mathrm{I}})$$$, provided $$$q_{Z}$$$ is as close as possible to the $$$p_{Z}$$$ with respect to $$$\mathcal{D}$$$.

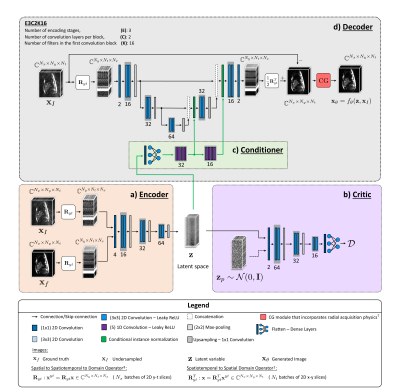

Implementation: As shown in Fig. 1, XT,YT networks8 were used to parameterize a deterministic encoder $$$q(\mathbf{z}|\mathbf{x}_{\mathrm{I}},\mathbf{x}_{\mathrm{f}})$$$, and a deterministic decoder $$$f_{\theta}(\mathbf{z},\mathbf{x}_{\mathrm{I}})$$$. For reconstruction, the decoder utilizes the knowledge encoded in $$$\mathbf{z}$$$ in the form of conditional information through conditional instance normalization9 layers with learned parameters. The output $$$f_{\theta}$$$ is equipped with a data-consistency module which incorporates the model of a radial MR data acquisition7. As $$$\mathcal{D}$$$, the 1-Wasserstein distance was chosen and a WGAN which provides stable training was implemented10. In the sense defined by this metric, the WGAN framework tries to ensure that $$$q_{Z}$$$ is as close as possible to the assumed $$$p_{Z}=\mathcal{N}(\mathbf{0},\mathbf{I})$$$. During inference, we only employ the decoder by drawing $$$\mathbf{z}\sim\mathcal{N}(\mathbf{0},\mathbf{I})$$$ given some $$$\mathbf{x}_{\mathrm{I}}$$$, and compute pixel-wise standard deviations of the output samples, which serves as a measure of uncertainty of the reconstruction network.

Dataset: The networks in our study were trained, validated, and tested on data from 11, 4, and 4 subjects, respectively. This individually amounted to 144, 36, and 36 complex-valued cine MRI images with dimensions $$$320\times\,320\times\,30$$$.

Results

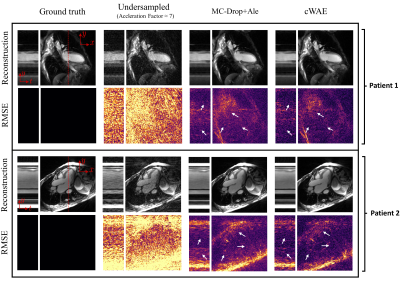

In Fig. 2, we visually compare the reconstruction quality of the cWAE with the MC-Drop+Ale method for two example patient slices. As identified by the white arrows, we see a decrease of RMSE for the proposed cWAE compared to MC-Drop+Ale. Furthermore, Fig. 3 shows that the cWAE has a superior image quality compared to MC-Drop+Ale in terms of NRMSE, PSNR and SSIM11.By using the WGAN framework to learn $$$p_{Z}=\mathcal{N}(\mathbf{0},\mathbf{I})$$$ in the latent space, as divergence $$$\mathcal{D}$$$ in Fig. 4a decreases with the progression of iterations, Fig. 4b shows that an arbitrary encoded slice starts to resemble a standard normal distribution.

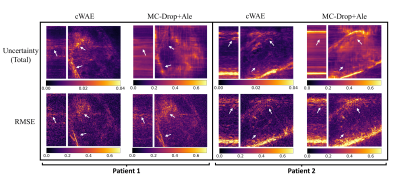

Figure 5 presents a comparison of uncertainty maps generated by the cWAE and MC-Drop+Ale for the same reconstructed slices as in Fig. 2. As can be seen, the uncertainty maps of the cWAE appear sharper than the MC-Drop+Ale, and they correlate well with the corresponding RMSE maps.

Discussion

The dropout rate is also an optimizable variable in the MC-Drop+Ale method2,3,12, which may cause optimizers to miss information when set too high. Our large-scale problem makes extensive hyper-parameter searches difficult, hence we fixed the dropout rate to 0.2 based on preliminary experiments. Unlike MC-Drop+Ale, cWAE does apply dropouts and utilizes all the learnable parameters to efficiently capture relevant information from the training dataset.As shown in Fig. 2 and 3, this helps in achieving promising performance without extensive hyper-parameter tuning. Figure 4 illustrates the successful learning of a standard normal distribution in a lower dimensional latent space. This is much more computationally efficient and stable than learning a complex multimodal distribution in the higher-dimensional original image space. Further, based on the strong correlation of uncertainties provided by cWAE with the RMSE in Fig. 5, they can potentially be utilized to improve subsequent post-processing techniques (e.g. segmentation).

Conclusion

Using cWAE, we successfully quantified uncertainties that correlate well with RMSE in a high-dimensional and computationally demanding application of 2D multi-coil dynamic MRI by learning the distribution in smaller latent space while yielding high image quality.Acknowledgements

We gratefully acknowledge the support of the German Research Foundation, project number GRK2260, BIOQIC.References

[1] Brahma S, Schäffter T, Kolbitsch C, Kofler A. Data-Efficient Uncertainty Quantification for Radial Cardiac Cine MR Image Reconstruction. In ISMRM Abstract 2022 (No. 1840).

[2] Kendall A, Gal Y. What uncertainties do we need in bayesian deep learning for computer vision?. Advances in neural information processing systems 2017; 30.

[3] Gal Y, Ghahramani Z. Dropout as a bayesian approximation: representing model uncertainty in deep learning. In: International conference on machine learning, PMLR. ;2016:1050-1059

[4] Tolstikhin I, Bousquet O, Gelly S, Schölkopf B. Wasserstein Auto-Encoders. In 6th International Conference on Learning Representations (ICLR) 2018.

[5] Makhzani A, Shlens J, Jaitly N, Goodfellow I, Frey B. Adversarial Autoencoders. In International Conference on Learning Representations (ICLR) 2016.

[6] Welling M, Kingma DP. Auto-encoding variational bayes. In International Conference on Learning Representations (ICLR) 2014.

[7] Kofler A, Haltmeier M, Schaeffter T, Kolbitsch C. An end-to-end-trainable iterative network architecture for accelerated radial multi-coil 2D cineMRimage reconstruction. MedicalPhysics2021; 48(5): 2412–2425.

[8] Kofler A, Dewey M, Schaeffter T, Wald C, Kolbitsch C. Spatio-temporal deep learning-based undersampling artefact reduction for 2D radial cine MRI with limited training data. IEEE Trans Med Imaging 2019; 39(3): 703–717.

[9] Dumoulin V, Shlens J, Kudlur M. A Learned Representation For Artistic Style. In International Conference on Learning Representations (ICLR) 2017.

[10] Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. In International conference on machine learning 2017 Jul 17 (pp. 214-223). PMLR.

[11] Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 2004; 13(4): 600–612.

[12] Gal Y. Uncertainty in deep learning. PhD thesis, University of Cambridge 2016.

Figures