4787

Transferable Deep Learning for Fast MR Imaging1Radiology, Mayo Clinic at Arizona, Phoenix, AZ, United States, 2CIDSE, Arizona State University, Tempe, AZ, United States

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Data Processing, Reconstruction

Artificial intelligence (AI) applications in the field of magnetic resonance imaging have been implemented in routine clinical practice. However, MRI still faces a practical and persistent challenge: its long acquisition time. This has led to two prominent issues in health care: high cost and poor patient experience. Long acquisition time is also a source of degraded imaging quality (e.g., motion artifacts). In the study, we propose to develop novel Deep Learning (DL) architectures combined with transfer learning capabilities to address the above challenge and apply this newly developed AI technique for image reconstruction in MRI with very fast imaging acquisition.

Introduction

Magnetic Resonance Imaging (MRI) is widely used in the diagnosis of oncological, neurological, musculoskeletal and other diseases. Despite many years of technological development, MRI still faces a practical and persistent challenge: its long acquisition time. This has led to two prominent issues in health care: high cost and poor patient experience. Long acquisition time is also a source of degraded imaging quality (e.g., motion artifacts). Deep learning (DL) approaches have been attempted for accelerating MRI in recent years [1,2,3]. In terms of conventional reconstruction metrics, promising preliminary results have been reported. However, existing approaches are still far from clinical adoption due to the following practical barriers: (1) lack of large-scale labelled datasets needed for training lesion-specific models; (2) data heterogeneity due to different body parts; (3) variability in sensor characteristics due to different vendors and/or MRI system generations; and (4) domain-dependent evaluation metrics for assessing clinical significance of the reconstruction quality. In this study, we developed a feature refinement-based transfer learning approach [4, 5] for vendor transfer in medical image with superfast imaging acquisition, and the results demonstrate that our proposed approach improves reconstruction quality significantly in a shorter scanning time and with limited data.Materials and Methods

A convolutional Neural Network (CNN) is implemented to learn the differences between the aliased images and the original images, employing a modification of the widely used U-Net architecture. Considering the limited data availability, transfer learning was introduced. In the transfer learning model, we not only transfer knowledge in the form of model parameters (PT) to refine the representation learning for the target dataset/domain, but also we applied the feature representation transfer (FT) from the source to refine the target datasets. By enforcing weight regularization, we also ensure that there is no drastic change to the parameters, hence avoiding overfitting to the target dataset and facing the problem of catastrophic forgetting. 270 ACR T1 phantom imaging datasets (135 on GE 3.0T MR Scanner, and 135 on Siemens 3.0T MR scanner) were collected with different slice thickness, orientations, and spatial resolutions were used to validate our newly developed AI based MRI reconstruction model and transfer learning approach. Peak signal to noise ratio (PSNR) and structural index similarity (SSIM) are used in image quality assessment.Results

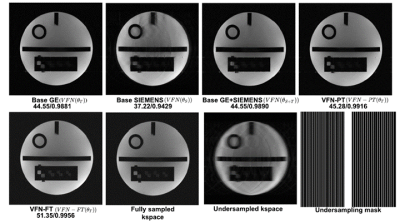

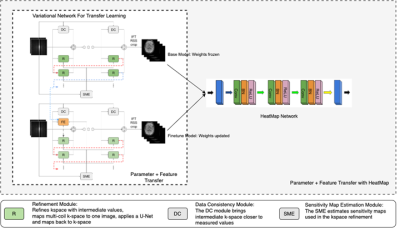

We extend Variational Feedback Network (VFN) [3] with the Feature Extraction (FE) module. The newly proposed AI reconstruction architecture is shown in Figure 1. Experiments were designed to transfer the mapping knowledge learned from phantom datasets acquired from Siemens MR scanner to these scanned on GE MR scanner with the same standard ACR phantom. We consider Siemens phantom data as the source dataset (100 training volumes) and GE datasets as our target (20 training volumes). Due to space limitation, we report outcomes from only the following models: (1) 4x under-sampled k-space reconstruction; (2) VFN (Siemens100) is the baseline model, which was trained on Siemens 100 dataset from scratch; (3) VFN (GE20) is the baseline model, which was trained on GE 20 dataset from scratch; (4) FT-VFN was a model fine-tuned with GE 20 dataset with feature transfer learning; (5) PT-VFN was a model trained based on GE 20 dataset with weight transfer learning from the model 2; The typical reconstructed images and results are shown in Figure 2 and Table 1, which suggest that our proposed PT-VFN and FT-VFN algorithm performs better than the one without transfer learning.Discussion and Conclusions

We have demonstrated successful vendor transfer with our proposed algorithm. Both PT-VFN and FT-VFN deep learning models perform better with high PSNR and SSIM than the model without transfer learning algorithm. The proposed method is also able to predict the high-resolution details that seem blurred/smugded in other networks. The feature transfer in combination with weight transfer and regularization may provide an added benefit, such as (1) Low resolution k-space is refined to a significant degree by extracting high-level features from source dataset. This allows adding higher details to a noisy low-resolution k-space. (2) The feature transfer and weight regularization help to maintain the parameters in a space that is generalizable for both source dataset and target dataset.Acknowledgements

No acknowledgement found.References

1.P.L.K. Ding, Z. Li, Y. Zhou, B. Li, "Deep residual dense U-Net for resolution enhancement in accelerated MRI acquisition", Medical Imaging: Image Processing 2019.

2.M. J. Muckley et al., “Results of the 2020 fastMRI Challenge for Machine Learning MR Image Reconstruction”, IEEE Trans. On Medical Imaging, Vol. 40, No. 9, September 2021.

3.Sriram, Anuroop, et al. “End-to-end variational networks for accelerated MRI reconstruction.” International Conference on Medical Image Computing and Computer-Assisted Intervention, Springer, 2020, 64--73.

4.Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Joan Puigcerver, Jessica Yung, Sylvain Gelly, and Neil Houlsby. Large scale learning of general visual representations fortransfer. arXiv preprint arXiv:1912.11370, 2(8), 2019.

5.Simon Kornblith, Jonathon Shlens, and Quoc V Le. Do better imagenet models transfer better? In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2661–2671, 2019.

Figures

Figure 1: The architecture of transferable variation feedback network. In this diagram, Base Model refers to which is trained on source dataset . During training of on , only is updated. is used to transfer knowledge from other datasets to refine the low-resolution data available for . For each of the modules, the parameter initialization strategy is represented for all the three methods.