4736

Motion artifact assessment for magnetic resonance imaging using a learned combination of deep learning model predictions.1Pattern Recognition Lab, Friedrich-Alexander-University Erlangen-Nuremberg, Erlangen, Germany, 2Siemens Healthineers, Erlangen, Germany

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Artifacts, Image quality

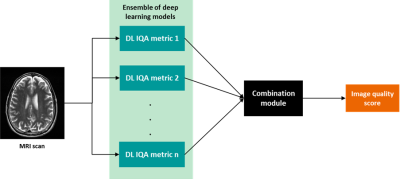

Magnetic resonance imaging (MRI) is a powerful imaging modality, but susceptible to various image quality problems. Today, technicians conduct image quality assurance (IQA) during scan-time as a manual, time-consuming, and subjective process. We propose a method towards adaptable automated IQA of MR images without the need of a large, annotated image database for training. Our method implements a machine learning-based module that uses multiple predictions from an ensemble of deep learning models trained with image quality metrics. The sensitivity of this method to detect image quality problems is adaptable to clinical requirements of the end user.

Introduction

Magnetic resonance imaging (MRI) is a powerful imaging modality that provides high soft-tissue contrast without harmful radiation. However, MR images can be subject to image quality problems like artifacts arising from inadequate settings, patient incompliance or scanner deficiencies. Technicians conduct image quality assurance during scan-time as a manual, time-consuming, and subjective process. Incorrect quality assessment happens particularly in stressful situations and for inexperienced technologists. An automated and objective image quality assessment (IQA) method available at the scanner could provide great benefit to ensure the quality of acquired images. In MR, the image characteristics and perception of adequate image quality varies greatly over anatomy, diagnostic task, and field strength. Radiologists also have individual (subjective) preferences of acceptable image quality and sensitivity to image quality problem severity. Despite great progress in the field of automated IQA1-4 it remains a big challenge to find a method robust enough to adapt to the variable image quality requirements of end users.Methods

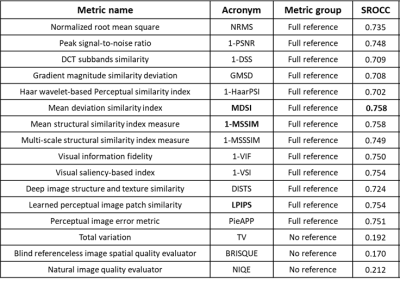

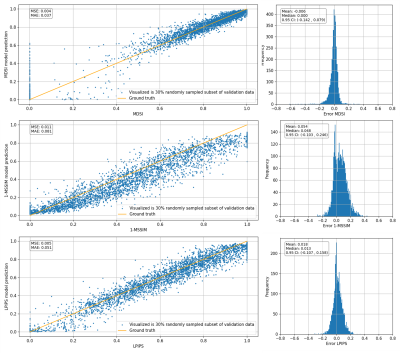

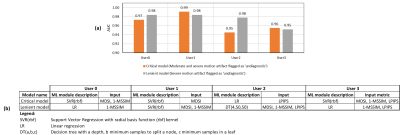

In this work we propose a two-step approach (Fig. 1) for adaptable automated IQA. The first step is an image analysis module comprised of an ensemble of deep learning (DL) models that are trained to predict image quality metrics (IQMs) for a given input image. The second step is a machine learning (ML) based user-adaptable module that uses these IQM predictions and outputs a final image quality prediction that suits the user’s sensitivity to image quality problem severity. This ML module can be trained on a small, annotated dataset from the user. An implementation of the proposed method is demonstrated for patient motion artifact assessment on a T2 weighted brain image database with simulated patient motion. Images from 100 patients were obtained from the Human Connectome Project5 database. Motion artifacts were introduced into the original datasets using a custom-tailored routine6 considering time-dependent translation and k-space sampling order. The simulated motion varied in frequency and severity to cover realistic scenarios over 141,598 2D image slices. A small subset (6279 images, i.e. <5%) was annotated for severity of motion artifact by 4 experienced radiologists on a discrete scale of 1 (good) to 3 (severe) which were rescaled to a range from 0 to 1. Data with and without clinical annotations were split into training (4956 images, 66581 images) and validation (1323 images, 11452 images), respectively. State-of-the-art IQMs7-21 (Fig. 2) were computed for all pairs of original and simulated images using the PIQ22 library. The IQMs were clipped at the 97th percentile and normalized to a range between 0 and 1. These IQMs were evaluated for their correlation to expert annotations for motion artifact assessment and the top-three correlated IQMs (Fig. 2), MDSI, 1-MSSIM and LPIPS were selected to train the DL models. The image analysis module consisted of three DenseNet-12123 regression models, each model was trained on one IQM. Data augmentation (rotation by 90°, 180°, 270°, and horizontal and vertical flips) was performed on the training data. After hyperparameter search, all models were trained with step-decay learning rates of 0.007 for first 250 epochs followed by 10-5 for 150 epochs using MSE loss and SGD optimizer. Trainings were stopped using an early stopping criterion if the validation loss did not decrease below the global minimum for 40 epochs. Various configurations of decision trees (DT), support vector regression (SVR), and linear regression (LR) models, were trained to map predictions from DL-based IQM models to individual rater’s annotations. Training data for the ML module was class balanced by under-sampling the over-represented categories.Results and discussion

The IQMs evaluated in this work (Fig. 2) demonstrate good correlation to expert assessment of motion artifact severity. These easy-to-understand IQMs are also efficient to compute for a large image database, hence ideal as labels for training DL-based motion artifact assessment models. The distribution of predicted metric against actual normalized metric and the distribution of errors (Fig. 3) indicate that the DL models predict IQM values accurately. The ML modules trained with individual user’s annotations demonstrate very high AUC (>0.95) in classifying images as ‘diagnostic’ and ‘undiagnostic’ at two ground truth thresholds 0.3 and 0.7 respectively (Fig. 4). The two models (critical and lenient model) presented for each user demonstrate different sensitivities to motion artifact severity. The critical model maps predicted IQM values to a final image quality classification that flags images with moderate to severe motion artifact as ‘undiagnostic’ whereas the lenient model would only flag images with severe motion artifact as ‘undiagnostic’. Thus, with the proposed method utilizing a small, annotated training dataset, the end-user can select an IQA model suited to their clinical requirements.Conclusion

The proposed method creates IQA models trained on individual end user’s subjective preference of image quality using a small, annotated dataset for training. This method can be applied to adapt IQA models for a broad range of anatomies, diagnostic tasks, and image quality problems that can be easily simulated (e.g., noise, Gibbs ringing, blurring). Such models trained on simulated data may not perform optimally on real images, this gap can be bridged by fine-tuning using a small, annotated database of real images. In future work, the robustness of the proposed model can be tested on clinical data.Acknowledgements

Data collection and sharing for this project was provided by the Human Connectome Project (HCP; Principal Investigators: Bruce Rosen, M.D., Ph.D., Arthur W. Toga, Ph.D., Van J. Weeden, MD). HCP funding was provided by the National Institute of Dental and Craniofacial Research (NIDCR), the National Institute of Mental Health (NIMH), and the National Institute of Neurological Disorders and Stroke (NINDS). HCP data are disseminated by the Laboratory of Neuro Imaging at the University of Southern California.References

1. Lorch, B.: Automated detection of motion artefacts in mr imaging using decision forests. Journal of Medical Engineering. (2017)

2. Essess, S.: Automated image quality evaluation of T2-weighted liver MRI utilizing deep learning architecture. Journal of magnetic resonance imaging: JMRI. 47, (2017)

3. Kastryulin, S.: Image Quality Assessment for Magnetic Resonance Imaging. arXivPreprint arxiv.2203.07809. (2022)

4. Sciarra, A.: Reference-less SSIM Regression for Detection and Quantification of Motion Artefacts in Brain MRIs. Medical Imaging with Deep Learning. (2022)

5. Essen, V.: The WU-Minn Human Connectome Project: An overview. NeuroImage. 80:62-79, (2013)

6. Braun, S.: Motion Detection and Quality Assessment of MR images with Deep Convolutional DenseNets. Proc. ISMRM. 2715, (2018)

7. Peak signal-to-noise ratio https://en.wikipedia.org/wiki/Peak_signal-to-noise_ratio/

8. Balanov, A.: Image quality assessment based on DCT subband similarity. 2015 IEEE International Conference on Image Processing (ICIP). (2015)

9. Xue, W. Gradient Magnitude Similarity Deviation: A Highly Efficient Perceptual Image Quality Index. arXiv:1308.3052. (2013)

10. Reisenhofer, R.: A Haar wavelet-based perceptual similarity index for image quality assessment. arXiv:1607.06140. (2018)

11. Nafchi, H.: Mean Deviation Similarity Index: Efficient and Reliable Full-Reference Image Quality Evaluator. IEEE. (2016)

12. Mean structural similarity index measure https://en.wikipedia.org/wiki/Structural_similarity/

13. Wang, Z.: Multiscale structural similarity for image quality assessment. The Thirty-Seventh Asilomar Conference on Signals, Systems & Computers. (2003)

14. Sheikh, H.: Image information and visual quality. IEEE Transactions on Image Processing. 15 430-444, (2006)

15. Zhang, L.: VSI: A Visual Saliency-Induced Index for Perceptual Image Quality Assessment. IEEE Transactions on Image Processing. 23 42704281, (2014)

16. Ding, K.: Image Quality Assessment: Unifying Structure and Texture Similarity. IEEE Transactions on Pattern Analysis and Machine Intelligence. (2020)

17. Zhang, R.: The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. arXiv:1801.03924, (2018)

18. Prashnani, E.: PieAPP: Perceptual Image-Error Assessment through Pairwise Preference. arXiv:1806.02067, (2018)

19. Total variation https://www.wikiwand.com/en/Total_variation_denoising

20. Mittal, A.: No-Reference Image Quality Assessment in the Spatial Domain. IEEE Transactions on Image Processing. 21 4695-4708, (2012)

21. Mittal, A.: Making a Completely Blind Image Quality Analyzer. IEEE Signal Processing Letters. 20209-212, (2013)

22. Pytorch image quality library https://piq.readthedocs.io/en/latest/

23. Huang, G.: Densely Connected Convolutional Networks. arXivPreprint

arXiv:1608.06993. (2016)

Figures

Fig. 2. Correlation between normalized IQM and expert annotations on annotated validation data (1323 images). SROCC24 quantifies correlation to expert annotations. Metric groups full reference need distorted and clean image for computation, no reference need a single image. Negatively correlated metrics to clinical annotations were reversed (e.g., 1-MSSIM).

Fig. 3: Distribution of predicted scores against actual normalized value for MDSI, 1-MSSIM and LPIPS on unannotated validation data (11452 images). Scatter plots on the left display distribution of predicted values against actual normalized metric values, text in top-left box reports MSE and MAE values. Histograms on the right show distribution of error (predicted score – actual metric), text on top left of the image report the mean, median, and 95% confidence interval for the distribution.

Fig. 4. (a) Comparison of AUC for critical and lenient models for each user on annotated validation data (1323 images). (b) Details of the optimal ML model configuration for critical and lenient model for each user.