4520

Full and weak supervision networks for meniscus segmentation and multi classification based on MRI: data from the Osteoarthritis Initiative1Department of Medical Imaging, The Third Affiliated Hospital of Southern Medical University, GuangZhou, China, 2Electronics and Communication Engineering, Sun Yat-sen University, GuangZhou, China, 3Philips Healthcare, GuangZhou, China

Synopsis

Keywords: Joints, Joints, meniscus

Quantitative MRI of meniscus morphology (such as MOAKS system) have shown clinical relevance in the diagnosis of osteoarthritis. However, it requires a large workload, and often lead to deviation due to reader’s subjectivity. Therefore, based on automated segmentation of six horns of meniscus using fully and weakly supervised networks, we established two-layer cascaded classification models that can detect the meniscal lesions and further classify them into three types, and finally achieved excellent performance. This can improve the efficiency and accuracy of using quantitative MRI to study KOA in the future.Purpose and Introduction

Meniscal abnormality is associated with knee pain, knee osteoarthritis (KOA), and knee structural damage[1]. Magnetic Resonance Imaging (MRI) is the most common imaging method to assess meniscus morphology[2]. MRI Osteoarthritis Knee Score (MOAKS)[3] is one of the most common semi-quantitative scoring systems for meniscus evaluation, which is helpful to understand the meniscus phenotype of KOA. MOAKS system requires a large workload and often leads to deviation due to the reader’s subjectivity. In recent years, deep learning (DL) has been widely used to analyze MR images, such as meniscus segmentation and damage classification[4][5][6]. However, it is tedious and time-consuming to sketch the Region of Interest (ROI) manually. Therefore, our study aims to develop a fully automated method to segment knee menisci into six horns: anterior horn, body, and posterior horn in both medial meniscus (MM) and lateral meniscus (LM) based on weak supervisory networks. Then we classify meniscal injury into 3 grades: no tear, tear, and maceration according to the six meniscus horns.Materials and Methods

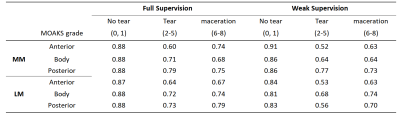

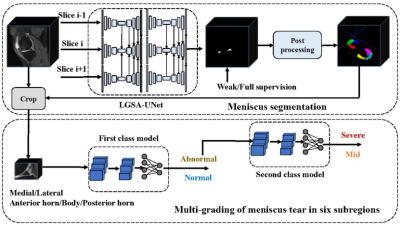

The images and data in this study were obtained from the Osteoarthritis Initiative (OAI). We randomly selected 128 knee MRI data at baseline from the incident osteoarthritis, progression, and total knee replacement cohorts of the OAI to establish the segmentation model. Out of participants from these three cohorts from OAI, after excluding participants without MRI data or MOAKS measurement, 1534 knee joint MRIs at baseline were included for the classification model. We evaluated study participants on saggital Intermediate-Weighted Turbo Spin-Echo (IW TSE) MRI scans. According to MOAKS, menisci are scored on MM and LM for the anterior, body, and posterior horn; abnormalities of the meniscus were scored as 0=normal meniscus, 1=signal abnormality that is not severe enough to be considered a meniscal tear, 2=radial tear, 3=horizontal tear, 4=vertical tear, 5=complex tear, 6=partial maceration, 7=progressive partial maceration, 8=complete maceration. On this basis, we classified meniscus injury into 3 grades: no tear (0, 1), tear (2-5), and maceration (6-8) according to the six horns.The overall method we proposed is shown in Figure 1. Firstly, our proposed segmentation network LGSA-UNet based on localization guidance and siamese adjustment is used to segment the meniscus as a whole. Secondly, the meniscus is divided into six subregions using a post-processing algorithm. Finally, based on the subregion segmentation results, we crop the original knee image and input it into the two-layer cascaded classification models for tear grading. In the training of the LGSA-UNet, we use our proposed weak labels to generate pseudo labels through regional growth, which greatly reduces the time of labeling. The pseudo labels and real labels are used for weak supervision and full supervision respectively. We used the Dice coefficient to evaluate the accuracy of automatic meniscus segmentation. Performance metrics for the classification model included area under the curve (AUC), sensitivity, and specificity.

Results

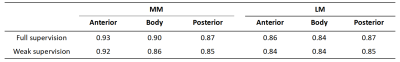

Segmentation performanceFor the full supervision, the segmentation model achieved high Dice scores of 0.93, 0.90, 0.87, 0.86, 0.84, and 0.87 for the anterior, body, and posterior horns on MM and LM, respectively; and for the weak supervision, the DICE scores are 0.92, 0.86, 0.85, 0.84, 0.84 and 0.85 for the anterior, body and posterior horn on MM and LM, respectively (Table 1).

Classification performance

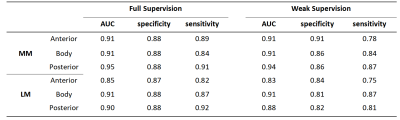

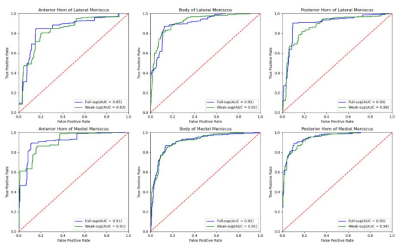

For binary classification, based on the fully supervised approach, the AUC values are 0.91, 0.91, 0.95, 0.85, 0.91, and 0.90 for the anterior horn, body, and posterior horn of the MM and LM; for the weak supervision, the AUC values are 0.91, 0.91, 0.94, 0.83, 0.91 and 0.88 for the anterior horn, body, and posterior horn of the MM and LM. Binary classification AUC values, accuracy, and sensitivity can be observed in Table 2. As for multi-classification, AUC values based on full supervision reached 0.6-0.9, and AUC values based on weak supervision reached 0.5-0.9 (Table 3). The ROC curves of binary classification are shown in Figure 2.

Conclusion

Deep learning models based on both full and weak supervision achieved excellent accuracy in segmentation and classification, improving the efficiency and accuracy of clinicians to make diagnoses, and are helpful to understand the correlation between quantitative MRI technology and clinic.Acknowledgements

Not applicable.References

[1] Englund M, Guermazi A, Roemer FW, et al. Meniscal tear in knees without surgery and the development of radiographic osteoarthritis among middle-aged and elderly persons: The Multicenter Osteoarthritis Study. Arthritis Rheum. 2009;60(3):831-839. doi:10.1002/art.24383

[2] Lecouvet F, Van Haver T, Acid S, et al. Magnetic resonance imaging (MRI) of the knee: Identification of difficult-to-diagnose meniscal lesions. Diagn Interv Imaging. 2018;99(2):55-64. doi:10.1016/j.diii.2017.12.005

[3] Hunter DJ, Guermazi A, Lo GH, et al. Evolution of semi-quantitative whole joint assessment of knee OA: MOAKS (MRI Osteoarthritis Knee Score) [published correction appears in Osteoarthritis Cartilage. 2011 Sep;19(9):1168]. Osteoarthritis Cartilage. 2011;19(8):990-1002. doi:10.1016/j.joca.2011.05.004

[4] Norman B, Pedoia V, Majumdar S. Use of 2D U-Net Convolutional Neural Networks for Automated Cartilage and Meniscus Segmentation of Knee MR Imaging Data to Determine Relaxometry and Morphometry[J]. Radiology, 2018,288(1):177-185.

[5] Bien N, Rajpurkar P, BallR RL, et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet[J]. PLoS Med, 2018,15(11):e1002699.

[6] Tack A, Shestakov A, Lüdke D, et al. A Multi-Task Deep Learning Method for Detection of Meniscal Tears in MRI Data from the Osteoarthritis Initiative Database[J]. Front Bioeng Biotechnol, 2021,9: 747217.

Figures