4031

Adaptive Deep Learning MR Image Enhancement with Property Constrained Unrolled Network

Zechen Zhou1, Ryan Chamberlain1, Praveen Gulaka1, Enhao Gong1, Greg Zaharchuk1, and Ajit Shankaranarayanan1

1Subtle Medical Inc, Menlo Park, CA, United States

1Subtle Medical Inc, Menlo Park, CA, United States

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Machine Learning/Artificial Intelligence

An unrolled network with explicit MR image degradation modeling and physical property constraints, termed PGDNet, is proposed for adaptive image denoising and deblurring. Preliminary evaluation demonstrated that PGDNet can achieve similar/superior image quality enhancement compared to the conventional task-specific networks, and can outperform others in joint denoising and deblurring tasks. PGDNet provides a promising solution for adaptive MR image denoising and deblurring to restore the image quality of accelerated clinical MR scans.Introduction

Deep Learning (DL) based image enhancement approaches1-3 provide promising and efficient solutions to restore the image quality for accelerated MR scans. However, DL models usually struggle to generalize to low-quality (LQ) images that deviate from the training data. Also, a single DL model might not adapt well for both denoising and deblurring/super-resolution tasks. Recent DL methods4,5 with explicit degradation modeling that can jointly estimate the degradation kernel and the high-quality (HQ) image have demonstrated good blind super-resolution performance. In this work, we propose a proximal gradient descent (PGD) based unrolled network with injection of degradation kernel properties for MR image denoising and deblurring, and propose to supervise kernel estimation by directly using the input LQ image to avoid the inaccuracy from the kernel pre-calibration. Preliminary performance evaluations are compared with traditional DL methods.Methods

Blind MR Image Restoration with PGDNetThe blind MR image restoration problem can be formulated as:

$$argmin_{k,x} \frac{1}{2}|| y - k \ast x||_2^2 + \psi(k) + \phi(x),$$

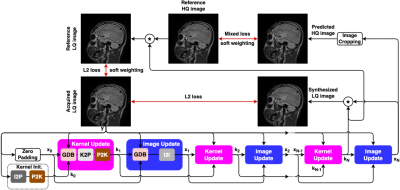

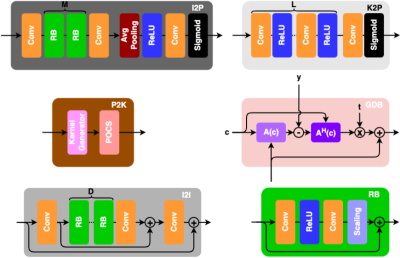

where $$$k$$$ and $$$x$$$ correspond to the degradation kernel and HQ image, and constraints on $$$k$$$ and $$$x$$$ are represented by $$$\psi(k)$$$ and $$$\phi(x)$$$. An iterative solver based on alternating optimization with PGD algorithm can be converted into an unrolled network termed PGDNet, whose overall architecture is illustrated in Figure 1. PGDNet consists of several kernel update and image update modules (four in this work). Each kernel/image update module contains a gradient descent block (GDB) and a kernel-to-kernel (K2K)/image-to-image (I2I) block. Figure 2 illustrates the detailed network structure for each module/block. Shared weights were used across different kernel/image update modules. In addition, the initial HQ image is estimated by zero padded LQ image, while a network transforms the LQ image into an initial degradation kernel.

Injection of Property Constraints

The K2K block is divided into a learnable residual network based kernel-to-parameter (K2P) block and a parameter-to-kernel (P2K) block by leveraging physical properties to form the degradation kernel. Four kernel properties are applied by projection onto convex sets (POCS): 1) the point spread function (PSF) of the degradation kernel is generated by a parametric generalized Gaussian function to approximate the low-pass/full-pass filtering profile for deblurring/denoising problem; 2) the degradation kernel has a limited size (i.e. 15x15); 3) the sum of the kernel is 1; 4) the kernel is real-valued.

Training Loss Design

For the predicted HQ image output, mixed losses measuring the difference against the reference HQ image are used, including L1 loss, structural similarity (SSIM) loss, perceptual loss, and total variation loss. A soft weighting derived from the paired LQ and HQ images is used in all terms of mixed losses to compensate for the residual misalignment.

For the denoising task, the predicted degradation kernel is explicitly compared with the delta function using the L2 loss. For the deblurring task, two more L2 losses measured against the input LQ image are applied to implicitly supervise the output kernel by using: 1) synthesized LQ image (i.e. convolution of predicted HQ image and predicted degradation kernel); 2) reference LQ image (i.e. convolution of reference HQ image and predicted degradation kernel). The same soft weighting strategy is also used in the second loss term.

Data and Experiments

356 paired fully sampled and undersampled (i.e. fewer phase encodings or number of averages) MR images were collected for training (#pairs for deblurring: 101, #pairs for denoising: 193, #pairs for both: 18) and testing (#pairs for deblurring: 11, #pairs for denoising: 29, #pairs for both: 4).

Three different models were trained in Pytorch: 1) A EDSR model1 trained with all data pairs as the baseline for denoising and deblurring tasks; 2) A EDSR model fine-tuned from the previous model using the deblurring data pairs as the super-resolution expert (SRE) model for deblurring task; 3) A PGDNet trained with all data pairs to compare the inference performance on a separate test set with the other 2 models. Quantitative PSNR and SSIM metrics were used to evaluate the accuracy of the model outputs.

Results

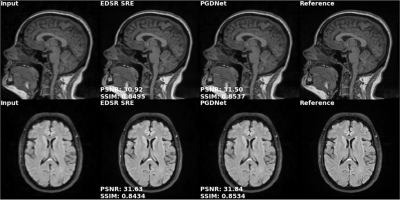

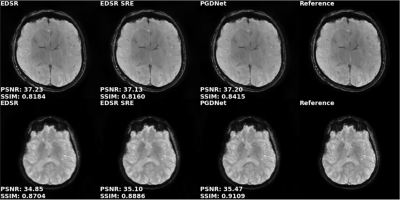

In denoising tasks (figure 3), PGDNet can better preserve small structures, and prevent over-smoothing, particularly the trabecular bone textures in MSK cases. In deblurring tasks (figure 4), PGDNet can still achieve similar/superior performance compared to the EDSR SRE model, and show improved structural consistency with the target image. Compared to EDSR and EDSR SRE, PGDNet demonstrates its adaptation and robustness in the joint denoising and deblurring tasks (figure 5). Quantitative measurements also supported the perceptual assessment.Discussion and Conclusion

PGDNet introduced an explicit degradation modeling for MR image denoising and deblurring, which ensures the data consistency to improve the model adaptation and generalizability. In addition, physical property constraints reduce the parameter search space for training convergence and stability improvements. To fully leverage the data information, the paired LQ and HQ images are both used to supervise the model training. Experimental results show that PGDNet outperforms the conventional DL based denoising and deblurring methods, particularly resolving the gaps in joint tasks. PGDNet provides an adaptive image enhancement solution to restore image quality for rapid MR clinical scans.Acknowledgements

No acknowledgement found.References

- Lim, B., Son, S., Kim, H., Nah, S. and Mu Lee, K., 2017. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 136-144).

- Liu, Z., Mao, H., Wu, C.Y., Feichtenhofer, C., Darrell, T. and Xie, S., 2022. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 11976-11986).

- Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S. and Guo, B., 2021. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 10012-10022).

- Huang, Y., Li, S., Wang, L. and Tan, T., 2020. Unfolding the alternating optimization for blind super resolution. Advances in Neural Information Processing Systems, 33, pp.5632-5643.

- Luo, Z., Huang, Y., Li, S., Wang, L. and Tan, T., 2021. End-to-end alternating optimization for blind super resolution. arXiv preprint arXiv:2105.06878.

Figures

Figure 1: Overall flowchart of PGDNet.

Figure 2: Detailed architecture of each block in different modules of PGDNet.

Figure 3: Comparison of denoising performance between EDSR and PGDNet. Top: T1w MSK; Bottom: DWI b1000 brain. Columns from left to right: LQ input, EDSR, PGDNet, HQ reference. Note the PSNR and SSIM measurements are provided for EDSR and PGDNet columns.

Figure 4: Comparison of deblurring performance between EDSR super-resolution expert (SRE) model and PGDNet. Top: T1w brain; Bottom: T2 FLAIR brain. Columns from left to right: LQ input, EDSR SRE, PGDNet, HQ reference. Note the PSNR and SSIM measurements are provided for EDSR SRE and PGDNet columns.

Figure 5: Comparison of joint denoising and deblurring performance across EDSR, EDSR super-resolution expert (SRE) and PGDNet from two SWI cases (top and bottom). Columns from left to right: EDSR, EDSR SRE, PGDNet, HQ reference. Note the PSNR and SSIM measurements are provided for EDSR, EDSR SRE and PGDNet columns.

DOI: https://doi.org/10.58530/2023/4031