3893

Joint Estimation of Coil Sensitivity and Image by Using Untrained Neural Network without External Training Data1Computer and Information Science, University of Massachusetts Dartmouth, North Dartmouth, MA, United States, 2Neuroradiology, Barrow Neurological Institute, Phoenix, AZ, United States, 3Electrical, Computer and Biomedical Engineering, University of Rhode Island, Kingston, RI, United States, 4Computer Science, University of Georgia, Athens, GA, United States

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Parallel Imaging

Training data for MRI reconstruction are difficult to be acquired in clinical practice. In addition, machine learning or deep learning-based MRI reconstruction suffers the distribution shift problem between training data and testing data. Generalization error always exists, so reconstructed images are unstable. We proposed a joint estimation of coil sensitivity and image using the prior of an untrained neural network (UNN). Coil sensitivity map improvement gradually enhances the UNN prior and the image to be reconstructed in an iterative optimization process. The method outperforms other MRI reconstruction methods by suppressing noise and aliasing artifacts.Introduction

Training data are difficult to be acquired in clinical practice. In addition, machine learning or deep learning-based MRI reconstruction suffers the distribution shift problem between training data and testing data. Generalization error always exists, so reconstructed images are unstable 1. An untrained neural network does not need to use any external data for building a model, so it is attractive for MRI reconstruction. Current I-UNN 2 and k-UNN 3 methods have shown satisfactory performance. Compared to I-UNN, k-UNN incorporates more physical priors and therefore further improves the reconstructed image quality. One physical prior used in the k-UNN is the coil sensitivity smoothness. However, coil sensitivity estimation is still an open problem. Accurate coil sensitivity smoothness including both magnitude and phase information is expected to further improve k-UNN reconstruction performance. We proposed to incorporate the prior coil sensitivity smoothness in a joint reconstruction process, and accurate coil sensitivity and reconstructed image can be iteratively obtained.Methods

The proposed joint estimation of coil sensitivity and image is presented in Figure 1. Undersampled multi-coil k-space data are fed into a UNN for generating the initially reconstructed image. Then, the iterative reconstruction alternatively improves coil sensitivity maps and the image to be reconstructed under the JSENSE framework 4. A coil data encode the Fourier Transform of the distribution of the spatial spins with the coil sensitivities. In addition, background phase effects generated by the B0 field inhomogeneity, flow, and pulse sequence settings also influence the effective coil sensitivities. The imaging equation of JSENSE 4 becomes $$$ E(a)f=d $$$ (1), where $$$a$$$ represents unknown actual coil sensitivities, $$$d$$$ is the acquired k-space data from all actual coils, the encoding matrix is $$$E$$$ containing the operations of Fourier encoding, undersampling k-space, and coil sensitivities, and $$$f$$$ denotes the image to be reconstructed. The encoding matrix $$$E$$$ can be replaced by UNN or SENSE or their alternative combinations during the iterative optimization process. In the proposed method, coil sensitivity as a prior is gradually improved which improves the reconstructed image and coil sensitivity itself. UNN prior also becomes accurate in the iterative optimization process.The iterative optimization process contains the following steps. (1) Self-calibration of coil sensitivities is used for generating the initial actual and virtual coil sensitivity maps. (2). SENSE reconstruction is applied on the aliasing coil images with undersampled k-space data to initially recover the missing data. (3). The k-UNN reconstructs the image, as the UNN prior, using the same undersampling pattern in the step (2). (4) The UNN prior is added to replace the SENSE image in the iterative JSENSE optimization process. (5). Coil sensitivity maps are updated. (6) Repeat steps (3) to (5) until a pre-defined number of iterations is achieved. (7) Output the final image for quality evaluation.

Results

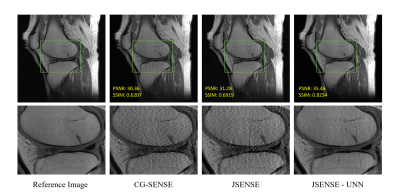

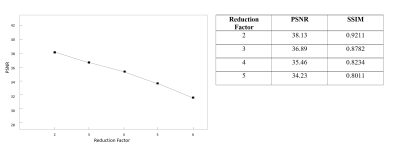

A 32-channel brain dataset and a knee dataset 5 are used for reconstructing the images with the proposed method. ACS 20 and the reduction factor 4 are used for undersampling multi-coil k-space data. Self-calibration coil sensitivity map estimation creates the initial sensitivity profiles. After 6 iterations of the joint estimation of coil sensitivity maps and the image, brain image reconstruction results are shown in Figure 2. It is seen JSENSE-UNN outperforms JSENSE and CG-SENSE by suppressing noise and aliasing artifacts. For the knee images, as shown in Figure 3, joint estimation of coil sensitivity maps and the image with UNN prior can enhance the signal-to-noise ratio (SNR) compared to CG-SENSE and JSENSE reconstructions. Because our method does not need any supervised training with external training data, no supervised learning model-based MRI reconstruction method is compared in Figures 2 and 3. For the reduction factors R = 2, 3, 4, and 5, quantitative evaluation values of PSNR and SSIM are shown in Figure 4. It is seen that the proposed method shows higher values at low reduction factors and lower values at high reduction factors. This observation is consistent with other parallel reconstruction, compressed sensing, and deep learning reconstruction methods. Because high reduction factors degrade the quality of reconstructed images.Discussion

UNN reconstruction and SENSE reconstruction are interleaved in the iterative optimization process. It is not clear whether an optimal interleaved combination of two reconstructions can further improve the image quality. Incorporation of the UNN prior at different stages of the joint estimation of coil sensitivity maps and the image can influence the quality of the final image estimated.Conclusion

The UNN prior is incorporated into the joint estimation of coil sensitivity maps and the image. The method is the unsupervised learning mode, and external training data are not needed. It may avoid the obstacle that training data are difficult to be acquired in clinical practice.Acknowledgements

No acknowledgement found.References

1. Antun V, Renna F, Poon C, Adcock B, and Hansen AC. On instabilities of deep learning in image reconstruction and the potential costs of AI. PNAS. 2020; 117(48):30088-30095.

2. Darestani MZ and Heckel R. Accelerated MRI with un-trained neural networks. IEEE Trans. Comput. Imaging. 2021;7:724 – 733.

3. Cui Z, Jia S, Zhu Q, Liu C, et al. K-UNN: k-space interpolation with untrained neural network. arXiv preprint arXiv:2208.05827 (2022).

4. Ying L, and Sheng J. Joint image reconstruction and sensitivity estimation in SENSE (JSENSE). Magn Reson Med. 2007;57(6):1196-1202.

5. Knoll F, et. al. fastMRI: a publicly available raw k-space and DICOM dataset of knee images for accelerated MR image reconstruction using machine learning. Radiol Artif Intell. 2020;2(1): e190007.

Figures