3763

Automatic segmentation of myocardial 3D whole-heart T1 and T2 maps using a nnU-Net.

Carlos Velasco1, Roman Jakubicek2, Alina Hua1, Anastasia Fotaki1, René M. Botnar1,3, and Claudia Prieto1,3

1School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2Department of Biomedical Engineering, Brno University of Technology, Brno, Czech Republic, 3Institute for Biological and Medical Engineering, Pontificia Universidad Católica de Chile, Santiago, Chile

1School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2Department of Biomedical Engineering, Brno University of Technology, Brno, Czech Republic, 3Institute for Biological and Medical Engineering, Pontificia Universidad Católica de Chile, Santiago, Chile

Synopsis

Keywords: Segmentation, Myocardium

The high amount of data obtained from a single 3D whole heart multiparametric scan (up to ~40 slices per parametric map) increases considerably the time required to segment and analyse the quantitative maps. Thus, an automated segmentation tool for these maps is desirable to perform this otherwise prohibitively laborious task. In this work, we leverage the potential of nnU-Net to perform fast, automated segmentation of 3D whole-heart simultaneous T1 and T2 maps and show its feasibility to predict segmentation masks with comparable quality while shortening the segmentation and analysis time by ~100x.Introduction

Myocardial tissue characterization via T1 and T2 mapping plays an important role in the evaluation of many cardiovascular diseases1. In the clinical setting, single parameter quantitative maps such as T1 and T2 are obtained from sequential 2D scans under separate breath-holds2. In recent years, novel multiparametric approaches have shown the feasibility to produce co-registered 3D whole-heart joint T1 and T2 maps from a single free-breathing acquisition3,4. These 3D whole-heart multiparametric approaches promise comprehensive assessment of myocardial tissue alterations, while simplifying scan planning and waiving the need for breath-holds. Nevertheless, the higher amount of data obtained from a single 3D whole heart multiparametric scan (up to ~40 slices per parametric map) increases considerably the time required to segment and analyse the quantitative maps. Thus, an automated segmentation tool for these maps is desirable to perform this otherwise prohibitively laborious task. Here, we leverage the potential of nnU-Net to perform fast, automated segmentation of 3D whole-heart simultaneous T1 and T2 maps.Methods

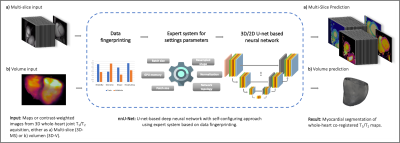

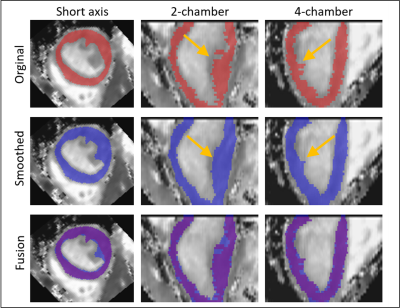

The proposed deep learning based approach consists of an nnU-Net5 which is trained to receive as input T1 maps, T2 maps, T1-weighted images or T2-weighted images indistinctively, in the format of either 2D slices or 3D volumes (Figure 1). The main advantage of this network based on a U-net is the self-configuring architecture and the setting of hyperparameters in relation to the input data. The network has been trained from scratch on training and testing data, which contain a set of multiparametric 1x1x2 mm3 3D whole-heart joint T1/T2 maps and corresponding T1/T2 contrast-weighted images of N = 34 subjects with suspected cardiovascular disease, in a training/testing ratio 28/6. Each subject comprised four 3D volumes (i.e., T1 map, single T1-weighted image (highest myocardial-blood contrast), T2-map and single T2-weighted image), of ~40 slices each. Since the maps were inherently co-registered3, the ground-truth manual segmentation was generated only for one volume per subject, annotated in the short-axis orientation by an experienced clinical reader. These annotations were extended to the three remaining volumes. Each slice took approximately 45s to be segmented by the expert, and ~1250 slices were segmented in total. All scans were acquired on a 1.5T scanner (MAGNETOM Aera, Siemens Healthcare, Erlangen, Germany), written consent was obtained from all subjects and the study followed guidelines approved by the National Research Ethics Service. The nnU-Net was trained on both 3D slice-by-slice and 3D volume inputs, denoted as “3D-MS” and “3D-V” trainings, during 50 epochs and with an initial learning rate of 0.02. Since the manual annotations were generated slice-by-slice, the resulting annotated volumes presented discontinuities (see Figure 2) that could be hindering an optimal training, especially for the 3D-V case. Hence, the annotated masks were morphologically smoothed by a series of 3D morphological operations (dilatation, closing, opening and erosion) ensuring greater 3D connectivity between two adjacent slices, and the nnU-Net was trained again with these new smoothed “3D-MS-s” and “3D-V-s” datasets. Dice scores were computed on the testing dataset for all four trainings to compare manual segmentation against predicted masks results.Results

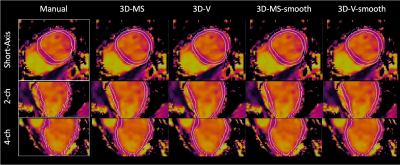

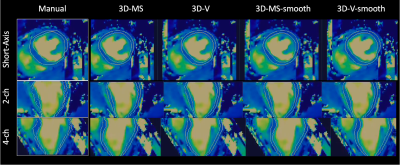

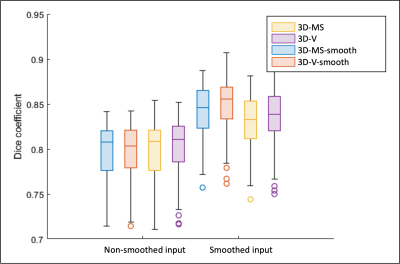

Figures 3 and 4 show predicted masks for T1 and T2 maps respectively at the short-axis mid-ventricular level, as well as in long-axis 2-chamber and 4-chamber for all 4 trainings. Dice scores of each trained network are shown in Figure 5. For the testing dataset, the best quantitative results in terms of Dice score were achieved after smoothing the input dataset, more specifically by using a smoothed 3D volume (3D-V-s). Segmentation time was ~45s per slice for manual segmentation vs. ~0.5s per slice for the proposed nnU-Net approach.Discussion

Here we demonstrate the feasibility of a nnU-Net to perform automated myocardial segmentation on 3D joint T1/T2 maps and weighted images, shortening the segmentation and analysis time by ~100x, compared to manual segmentation. An input of a whole 3D volume for segmentation, rather than separated slices provided better results. Likewise, morphological smoothing of the input volume has shown to provide better Dice scores for both 3D slice-by-slice and 3D volume inputs. Future analyses are warranted to provide a more complete evaluation of the predicted volumes against an expert manual segmentation.Acknowledgements

This work was supported by the following grants: (1) EPSRC P/V044087/1; (2) BHF programme grant RG/20/1/34802, (3) Wellcome/EPSRC Centre for Medical Engineering (WT 203148/Z/16/Z), (4) Millennium Institute for Intelligent Healthcare Engineering ICN2021_004, (5) FONDECYT 1210637 and 1210638, (6) IMPACT, Center of Interventional Medicine for Precision and Advanced Cellular Therapy, ANID FB210024.References

1. Kim, P.K. et al., Korean J. Radiol. 2017.

2. Messroghli, D.R. et al., JCMR 2017.

3. Milotta, G. et al., MRM 2020.

4. Qi, H. et al., MRI 2019.

5. Insensee, F. et al., Nat. Methods, 2021

Figures

Figure 1. Schematic depiction of the proposed framework. A a) 3D multi-slice or b) 3D volume is used as input of a nnU-net, which extracts a ‘dataset fingerprint’, i.e., a set of dataset-specific properties such as image size, voxel information, intensities…. After network prediction corresponding predicted segmentation masks are provided, either a) slice-by-slice or b) volumes.

Figure 2. Representative T1 map plus annotated mask (left: short axis, middle: two chamber, right: four chamber) subject with suspected cardiovascular disease showing difference between original (top, red masks) and smoothed (middle, blue masks) annotated datasets used for training. Bottom row depicts fused masks to show differences between annotations. Yellow arrows point to a representative area where discontinuities in the annotations along apex-base direction are observed and corrected by smoothing the volumes.

Figure 3. Representative view (top: short axis, middle: two chamber, bottom: four chamber) of T1 maps from a subject with suspected cardiovascular disease. White contours depict the segmentation masks (left boxes: manual segmentation drawn slice by slice by an experienced clinical reader, right: predicted segmentations provided by nnU-net trained with: 3D multi-slice, 3D volume, 3D-multi-slice smoothed and 3D-volume-smoothed inputs).

Figure 4. Representative view (top: short axis, middle: two chamber, bottom: four chamber) of T2 maps from a subject with suspected cardiovascular disease. White contours depict the segmentation masks (left boxes: manual segmentation drawn slice by slice by an experienced clinical reader, right: predicted segmentations provided by nnU-net trained with: 3D multi-slice, 3D volume, 3D-multi-slice smoothed and 3D-volume-smoothed inputs).

Figure 5. Dice scores of four proposed trained nnU-nets( yellow: 3D-mult-slice, purple: 3D-volume, blue: 3D-multi-slice smoothed, red: 3D-volume smoothed). Each bar plot groups Dice scores of prediction masks for T1 and T2 non-smoothed (left) and smoothed (right) inputs. Lines depicted on each box denote median and [1st, 3rd] quartiles, whiskers denote 5% and 95% of the population, and circles represent outliers that were also taken into consideration for calculations.

DOI: https://doi.org/10.58530/2023/3763