3762

A-Eye: Towards a large-scale MRI-based model of the complete eye

Jaime Barranco1,2, Hamza Kebiri1,2, Óscar Esteban3, Raphael Sznitman4, Oliver Stachs5, Sönke Langner6,7, Benedetta Franceschiello8,9,10, and Meritxell Bach Cuadra1,2,10

1CIBM Center for Biomedical Imaging, Lausanne, Switzerland, 2Radiology, Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 3Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 4ARTORG Center for Biomedical Engineering, University of Bern, Bern, Switzerland, 5Ophthalmology, Rostock University Medical Center, Rostock, Germany, 6Institute for Diagnostic and Interventional Radiology, Rostock University Medical Center, Rostock, Germany, 7Diagnostic Radiology and Neuroradiology, University of Greifswald, Greifswald, Germany, 8School of Engineering, Institute of Systems Engineering, HES-SO Valais-Wallis, Sion, Switzerland, 9The Sense Innovation and Research Center, Lausanne and Sion, Switzerland, 10These authors provided equal last-authorship contribution, Lausanne, Switzerland

1CIBM Center for Biomedical Imaging, Lausanne, Switzerland, 2Radiology, Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 3Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 4ARTORG Center for Biomedical Engineering, University of Bern, Bern, Switzerland, 5Ophthalmology, Rostock University Medical Center, Rostock, Germany, 6Institute for Diagnostic and Interventional Radiology, Rostock University Medical Center, Rostock, Germany, 7Diagnostic Radiology and Neuroradiology, University of Greifswald, Greifswald, Germany, 8School of Engineering, Institute of Systems Engineering, HES-SO Valais-Wallis, Sion, Switzerland, 9The Sense Innovation and Research Center, Lausanne and Sion, Switzerland, 10These authors provided equal last-authorship contribution, Lausanne, Switzerland

Synopsis

Keywords: Segmentation, Neuro, Eye, ophthalmology

This work comparatively evaluates two approaches for the automated segmentation of eye structures from 3D T1-weighted MRI data of the whole human head (N=1210). Quantitative results on a validation sub-set with manual annotations provide accurate results for lens and globe and set the first median Dice (DSC) benchmarks for optic-nerve (0.91), muscles (0.58 to 0.76) and fat (0.67 and 0.75). The ability of our framework to automatically extract state-of-the-art measurements, such as the axial length, paves the way to accurately identify and compute new biomarkers of the eye via MRI.Introduction

Ophthalmic diagnostic and treatment planning is generally guided by 2D imaging modalities such as fundus photography, optical coherence tomography and ultrasound. MRI of the eye (referred to as MReye) is raising a lot of interest1, as it provides a comprehensive view of the 3D anatomy, enables the assessment of tissue and offers undistorted imaging of orbital structures behind the eyeball, such as eye muscles, fats, and the optic nerve. Therefore, there is a strong need to develop a large-scale, comprehensive model of the eye and ocular cavity. Previous work explored statistical models2,3, deep learning methods4,5, combinations thereof6,7, and clustering techniques8. However, sample sizes were limited (generally 12<N<70 subjects) and they relied on a multi-contrast MRI setting. These studies were mostly focused on tumors and the globe and lens as eye sub-structures, with the exception of 3,4 which included the optic nerve. Here, we present two well-known image processing techniques for the MReye segmentation, namely atlas-based registration and supervised deep learning. We analyze the ability of these methods to extract a complete automated segmentation of many eye structures (lens, globe, optic nerve, extraocular muscles and fat). To do so, we only rely on T1-weighted (T1w) MRI, at the scale of a thousand subjects. We also introduce the automated estimation of a key ophthalmic biomarker (eg. myopia, cataract), the axial length.Methods

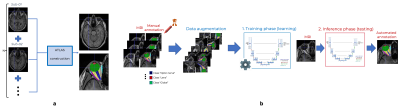

Materials. We use a random subset of healthy volunteers (N=1210, 56.13 y.o., female 616, male 594) who underwent whole-body MRI for the population-based Study of Health in Pomerania (SHIP, Germany)9,10. T1w-imaging were acquired at 1.5T Magnetom Avanto (Siemens Medical Solutions, Erlangen, Germany), 12-channel head coil: 1mm3; FOV 256x256mm2; TR=1900ms; TI=1100ms; TE=3.37ms. All participants gave informed written consent. The study was approved by the Medical Ethics Committee of Greifswald’s University and followed the Declaration of Helsinki. During MRI examination, subjects were not paying attention to any specific viewing direction. Manual annotations (9 regions-of-interest, ROIs, Figure 2a) were performed on 35 subjects. Atlas-based segmentation (Method A). Segmentation is achieved with registration (antsRegistrationSyN11) of a custom template (made of 5 subjects, Figure 1a) into the T1w image of the subjects, in a multi-level approach: (i) linear registration to determine a bounding box containing the eye, (ii) nonlinear registration within the bounding box. Atlas labels are projected back into the individuals’ spaces. Supervised deep learning (Method B). A 3D U-Net-based13,14 architecture was selected (Figure 1b) with convolutions of stride 2x2x2 in the downsampling phase and it was trained to predict 10 output channels, each corresponding to a different label (including background). Dice loss with Adam optimization15 and learning rate of 1e-3 were used. Among 35 annotated subjects, 27, 4 and 4 were used for training, validation and pure testing respectively. The training set was extensively augmented using Monai16, i.e. rotations, scaling, foreground cropping, intensity scaling and random cropping. Evaluation. Segmentation agreement metrics (Dice Similarity Coefficient (DSC), ROI volume similarity, and Hausdorff distance17) were reported on N=30 (method A), and N=4 (method B). DSC between method A and B is also done (N=1210). Axial-length extraction18 is defined as the orthogonal axis projected from the front central point of the lens towards the back of the eye (in the fovea). Here, we estimated axial length from segmentations with method A and B (4% outliers in B) in comparison with the reported ophthalmic measurements in 93 adults from MRI20.Results

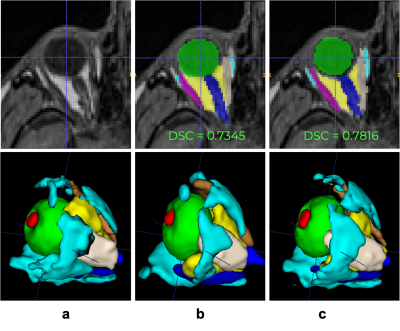

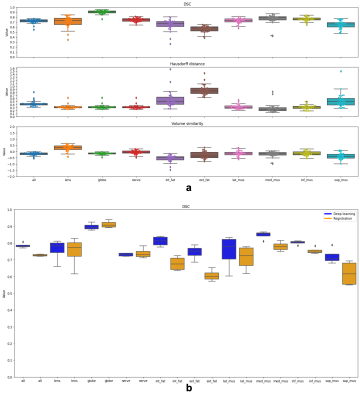

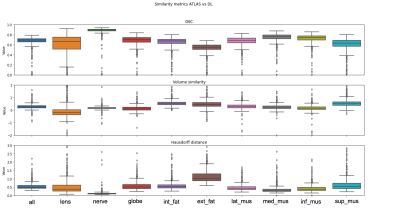

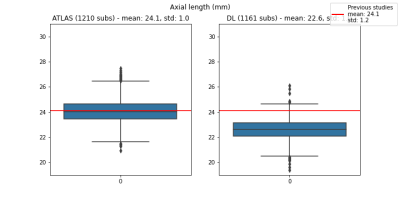

Atlas-based segmentation robustly extracted the 9 ROIs on new data. Figure 2b presents visual label results. Figure 3a presents similarity metrics on the manually annotated dataset (N=30 remaining labeled subjects). Median DSC values for lens and globe are 0.73, 0.74 respectively, while we establish new median DSC benchmarks for optic-nerve (0.91), muscles (0.58 to 0.76) and fat (0.67 and 0.75). DL-based segmentation showcased potential to replace atlas-based segmentation. Figure 2c reports visual label results. Figure 3b reports DL accuracy on 4 subjects as compared to manual annotations and in comparison with atlas-based performance. Despite the small sample size, DL has better performance for fat and muscles. Figure 4 presents DSC on (N =1210) of method A versus B. The DSC shows both segmentations are highly similar (DSC=0.9) for the optic nerve while much more variability is present for all other ocular structures (median DSC between 0.55 and 0.76). Axial-length agreement with ground-truth. Figure 5 shows the estimated axial length (Method A/Method B; mean 24.1 土 1/ 22.6 土 1.0 ). Agreement with reported average axial length measured from MRI (24.1 土 1.2 20) was higher for atlas based segmentation.Discussion

We assessed two new methods to segment lens, vitreus, extraocular muscles, optic nerve and fats based on T1w MRI. This is the first time feasibility on a large scale dataset (N=1210) is reported. We aim at increasing deep learning accuracy by incorporating additional data augmentation and non-supervised pre-training. Nevertheless, our results show key ophthalmic biometry (axial length) can be performed, thus demonstrating the capability to automatically infer biometric measurements from MReye segmentations.Acknowledgements

This work was supported by the Gelbert Foundation and the Swiss National Science Foundation (project 205321-182602). We acknowledge the CIBM Center for Biomedical Imaging, a Swiss research center of excellence founded and supported by CHUV, UNIL, EPFL, UNIGE, HUG and the Leenaards and Jeantet Foundations.References

- T. Niendorf, J.-W. M. Beenakker, S. Langner, K. Erb-Eigner, M. Bach Cuadra, E. Beller, J. M. Millward, T. M. Niendorf, O. Stachs, Ophthalmic magnetic resonance imaging: where are we (heading to)?, Current Eye Research (2021) 1–20.C.

- Ciller, S. I. De Zanet, M. B. Rüegsegger, A. Pica, R. Sznitman, J.- P. Thiran, P. Maeder, F. L. Munier, J. H. Kowal, M. B. Cuadra, Automatic Segmentation of the Eye in 3D Magnetic Resonance Imaging: A Novel Statistical Shape Model for Treatment Planning of Retinoblastoma, International Journal of Radiation Oncology*Biology*Physics 92(2015) 794–802. doi:10.1016/j.ijrobp.2015.02.056.URL: https://linkinghub.elsevier.com/retrieve/pii/S0360301615002990.

- H.-G. G. Nguyen, R. Sznitman, P. Maeder, A. Schalenbourg, M. Peroni, J. Hrbacek, D. C. Weber, A. Pica, M. Bach Cuadra, Personalized Anatomical Eye Model From T1-Weighted Volume Interpolated Gradient Echo Magnetic Resonance Imaging of Patients With Uveal Melanoma, International Journal of Radiation Oncology*Biology*Physics 102 (2018b) 813– 820. doi:10.1016/j.ijrobp.2018.05.004.

- H.-G. Nguyen, A. Pica, P. Maeder, A. Schalenbourg, M. Peroni, J. Hrbacek, D. C. Weber, M. B. Cuadra, R. Sznitman, Ocular Structures Segmentation from Multi-sequences MRI Using 3D Unet with Fully Connected CRFs, in: D. Stoyanov, Z. Taylor, F. Ciompi, Y. Xu, A. Martel, L. Maier-Hein, N. Rajpoot, J. van der Laak, M. Veta, S. McKenna, D. Snead, E. Trucco, M. K. Garvin, X. J. Chen, H. Bogunovic (Eds.), Computational Pathology and Ophthalmic Medical Image Analysis, Lecture Notes in Computer Science, Springer International Publishing, 2018a, pp. 167–175. doi:10.1007/978-3-030-00949-6 20.

- Strijbis, V.I.J., de Bloeme, C.M., Jansen, R.W. et al. Multi-view convolutional neural networks for automated ocular structure and tumor segmentation in retinoblastoma. Sci Rep 11, 14590 (2021). https://doi.org/10.1038/s41598-021-93905-2

- Ciller C, De Zanet S, Kamnitsas K, Maeder P, Glocker B, Munier FL, et al. (2017) Multi-channel MRI segmentation of eye structures and tumors using patient-specific features. PLoS ONE 12(3): e0173900

- Huu-Giao Nguyen, Alessia Pica, Jan Hrbacek, Damien C. Weber, Francesco La Rosa, Ann Schalenbourg, Raphael Sznitman, Meritxell Bach Cuadra, A novel segmentation framework for uveal melanoma in magnetic resonance imaging based on class activation maps, Proceedings of The 2nd International Conference on Medical Imaging with Deep Learning, PMLR 102:370-379, 2019.

- Hassan MK, Fleury E, Shamonin D, Fonk LG, Marinkovic M, Jaarsma-Coes MG, Luyten GPM, Webb A, Beenakker JW, Stoel B. An Automatic Framework to Create Patient-specific Eye Models From 3D Magnetic Resonance Images for Treatment Selection in Patients With Uveal Melanoma. Adv Radiat Oncol. 2021 Apr 3;6(6):100697

- P. Schmidt, R. Kempin, S. Langner, A. Beule, S. Kindler, T. Koppe, H. Vo ̈lzke, T. Ittermann, C. Jürgens, F. Tost, Association of anthropometric markers with globe position: A population-based MRI study, PloS one 14 (2019) e0211817.

- Völzke, H., Alte, D., Schmidt, C. O., Radke, D., Lorbeer, R., Friedrich, N., et al. (2011). Cohort Profile: The Study of Health in Pomerania. Int. J. Epidemiol. 40, 294–307. doi: 10.1093/ije/dyp394.

- Avants, B. B., Tustison, N., & Song, G. (2009). Advanced normalization tools (ANTS). Insight j, 2(365), 1-35.

- Holmes CJ, Hoge R, Collins L, Woods R, Toga AW, Evans AC. “Enhancement of MR images using registration for signal averaging.” J Comput Assist Tomogr. 1998 Mar-Apr;22(2):324–33. http://dx.doi.org/10.1097/00004728-199803000-00032

- Ronneberger, O., Fischer, P. and Brox, T., 2015, October. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention (pp. 234-241). Springer, Cham.

- Çiçek, Ö., Abdulkadir, A., Lienkamp, S.S., Brox, T. and Ronneberger, O., 2016, October. 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computer-assisted intervention (pp. 424-432). Springer, Cham.

- Kingma, D.P. and Ba, J., 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- MONAI Consortium MONAI: Medical Open Network for AI; Zenodo, 2022. Available online: https://zenodo.org/record/5728262.

- Maier-Hein, L., Reinke, A., Christodoulou, E., Glocker, B., Godau, P., Isensee, F., ... & Jäger, P. F. (2022). Metrics reloaded: Pitfalls and recommendations for image analysis validation. arXiv preprint arXiv:2206.01653.

- Lam, A.K.C., Chan, R., Pang, P.C.K., 2001. The repeatability and accuracy of axial lengthand anterior chamber depth measurements from the IOLMasterTM. Ophthalmic Physiol. Opt. 21, 477–483. https://doi.org/10.1016/S0275-5408(01)00016-3.

- Bhardwaj, V., & Rajeshbhai, G. P. (2013). Axial length, anterior chamber depth-a study in different age groups and refractive errors. Journal of clinical and diagnostic research: JCDR, 7(10), 2211.

- Wiseman, S.J., Tatham, A.J., Meijboom, R. et al. Measuring axial length of the eye from magnetic resonance brain imaging. BMC Ophthalmol 22, 54 (2022). https://doi.org/10.1186/s12886-022-02289-y

Figures

Figure 1 (a) - Atlas-based segmentation strategy based on a custom template of 5 subjects. Custom template construction: (1) five highest cross-correlation score annotated subjects were selected after nonlinear registration onto Colin27 atlas12; (2) we generated a template map T1w map with antsMultivariateTemplateConstruction.sh; (3) we projected label maps of each subject onto the template space using the nonlinear transform in (2); (4) we generated a single label map (i.e., our atlas) by voxel-wise majority voting. (b) 3D Unet deep learning architecture.

Figure 2 (a-bottom) Manual annotation on 9 ROI: lens (red), globe (green), optic nerve (dark blue), intraconal fat (yellow), extraconal fat (cyan), lateral rectus muscle (pink), medial rectus muscle (ivory), inferior rectus muscle (purple), and superior rectus muscle (orange). Examples of inference from (b top-bottom) Atlas-based and (c top-bottom) deep learning methods.

Figure 3 (a) Computed similarity metrics on the manually annotated dataset (N=30 remaining labeled subjects). Median DSC values for lens and globe are 0.73, 0.74 respectively, optic-nerve (0.91), muscles (0.58 to 0.76) and fat (0.67 and 0.75). (b) We present DL accuracy on 4 subjects, compared to manual annotations and to atlas-based performance. Despite the small sample size, DL has better performance for fat and muscles.

Figure 4 In this figure we present DSC on the large-scale dataset (N=1210) of segmentations performed via method A versus method B. The DSC score shows that both segmentations are highly similar for the optic nerve (DSC=0.9) while we observe more variability for all other ocular structures (median DSC between 0.55 and 0.76).

Figure 5. This figure contains the estimated axial length via Method A (left boxplot, mean 24.1土1.0) and Method B (right boxplot, mean 22.6土1.0) respectively. Overall, we observe that our measurements are in agreement with the reported average axial length measured from MRI (24.1土1.2), although the concordance is higher for atlas based segmentation (Method A).

DOI: https://doi.org/10.58530/2023/3762