3706

Deep Learning Augmented PROPELLER Reconstruction for Improved MRI Motion Correction1College of Health Science and Environmental Engineering, Shenzhen Technology University, Shenzhen, China

Synopsis

Keywords: PET/MR, Machine Learning/Artificial Intelligence, deep learning

Applying the Periodically Rotated Overlapping Parallel Lines with Enhanced Reconstruction (PROPELLER) technique is one of the strategies to mitigate motion artifacts in MR images. However, due to technical limitations, existing method estimates motion parameters with unsatisfactory results, motion artifacts will still be presented in the final image. Deep learning algorithms are expected to optimize the motion parameter estimation part of PROPELLER technique. We develop a PROPELLER imaging technique incorporating a deep learning model that can provide accurate results and greatly shorten the elapsed time.Introduction

Applying PROPELLER technique is one of the strategies to mitigate motion artifacts in MRI1. It fills the k-space with rotating sets of parallel tracks, and low-frequency information can be repetitively acquired by overlapped trajectories in the k-space center2. However, the existing method1 estimates motion parameters with unsatisfactory results, and motion artifacts will still be presented in the final image, sometimes causing image quality not to meet the diagnosis requirements. Deep learning algorithms have great potential for data processing and image restoration in the medical field and are expected to optimize the motion parameter estimation step. This study develops a deep learning-based method for better PROPELLER motion estimation. We propose a deep learning network called PROPELLER Motion Net, which is able to estimate both rotation and translation precisely. After comparing and analyzing registration results, it is found that the PROPELLER Motion Net can provide results that are closer to the ground truth, and also greatly shorten the running time.Method

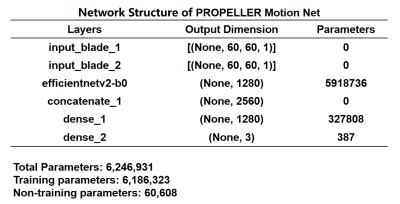

The developed workflow is illustrated in Figure 1. It focuses on in-plane rigid motion correction and replaces the traditional PROPELLER motion estimation step1 with a deep learning network. The model structure is summarized in Figure 2, this PROPELLER Motion Net uses a backbone of EfficientNet-B03 and estimates three motion parameters (rotation degrees and translation pixels in two directions), given two blade images as input.Dataset

The proposed network was trained and evaluated on a simulated PROPELLER dataset using fast MRI brain images4. 10,000 slices from the training dataset were selected for training and 2000 for evaluation without overlap. The simulation parameters were blade size=320x30, number of blades=18, and number of coils=8. The simulated rotation was within ±5 degrees and the simulated translation was within ±5 pixels. Before input to the network, the blade data were center cropped to 30x30 and zero-padded to 60x60 with coil channels combined to obtain blade images.

Training and evaluation

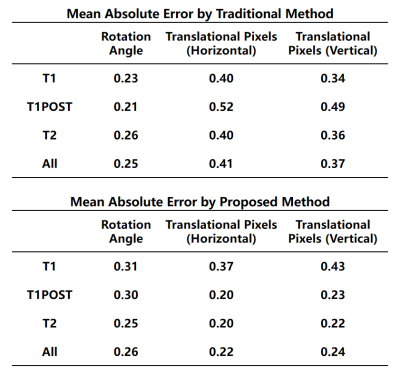

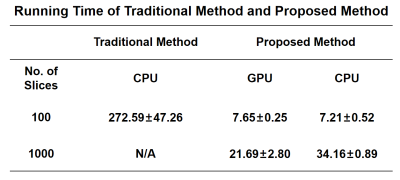

The Adam optimizer was used for training with learning rate decay until convergence. Mean Squared Error (MSE) was chosen as the loss function. Mean Absolute Error (MAE) was used to quantify the estimation accuracy and final image quality was measured by PSNR and SSIM about motion-free images. In addition, the computation time was recorded and compared with the traditional algorithm.

Results

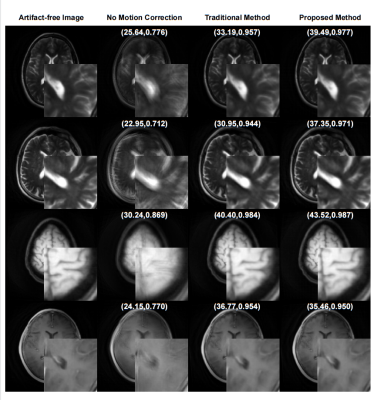

As shown in Figure 3, compared with traditional motion estimation, PROPELLER Motion Net had a similar capability of estimating rotational motion, and excels at translation motion, particularly well for T2-weighted data. As shown in Figure 4, our method results in higher PSNR and SSIM values of the final images than the traditional method in general. Statistics in Figure 5 on the computation time reveal that the proposed method is extremely efficient, several times faster than the traditional approach.Discussion and Conclusion

We demonstrated a deep learning-based method to improve PROPELLER motion correction. This method is in general better than traditional motion estimation in accuracy and considerably faster in computational time. Currently, the model performed not well on T1-weighted data, possibly because fastMRI contains much fewer T1-weighted images than T2-weighted ones. The main limitation of this study is that only simulated data were tested. For real data, motion patterns and noise can be more complicated which deserves further study.Acknowledgements

This study is supported in part by Natural Science Foundation of Top Talent of Shenzhen Technology University (Grants No. 20200208 to Lyu, Mengye) and the National Natural Science Foundation of China (Grant No. 62101348 to Lyu, Mengye).References

[1] Pipe J G . Motion correction with PROPELLER MRI: Application to head motion and free‐breathing cardiac imaging[J]. Magnetic Resonance in Medicine,1999,42(5):963-969.

[2] Forbes KP, Pipe JG, Bird CR, Heiserman JE. PROPELLER MRI: clinical testing of a novel technique for quantification and compensation of head motion. J Magn Reson Imaging. 2001 Sep;14(3):215-22. doi: 10.1002/jmri.1176. PMID: 11536397.

[3] Tan, M. and Le, Q. V., “EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks”, <i>arXiv e-prints</i>, 2019.

[4] FastMRI+:Zhao, R., “fastMRI+: Clinical Pathology Annotations for Knee and Brain Fully Sampled Multi-Coil MRI Data”,arXiv:2109.03812,2021,https://arxiv.org/abs/2109.03812.

Figures