3483

Data Augmentation with Simulated Images for Generalizable Brain MRI Super-Resolution1Biomedical Engineering, Eindhoven University of Technology, Eindhoven, Netherlands, 2MR R&D - Clinical Science, Philips Healthcare, Eindhoven, Netherlands

Synopsis

Keywords: Machine Learning/Artificial Intelligence, Brain, Super resolution

In many medical applications, high resolution images are required to facilitate early and accurate diagnosis. In this paper, we investigate the potential of data augmentation using simulated brain magnetic resonance (MR) images for training a deep-learning (DL) super resolution model that can generalize to brain data from different publicly available sources. Our qualitative visual evaluation results suggest that data augmentation with simulated images can improve the robustness and generalization of the model and decrease the artifacts of the super-resolved images.Introduction

In many medical applications, high-resolution images are required to facilitate early and accurate diagnosis. However, it is not always feasible to obtain images at the desired resolution due to technical, physical, time-constraint, and cost-related limitations. Super-resolution (SR) is an image reconstruction method to restore high-resolution (HR) images from their low-resolution (LR) counterparts [1].In this paper, we investigate the potential of data augmentation using simulated brain magnetic resonance (MR) images for training a deep-learning (DL) super-resolution model that can generalize to brain data from different publicly available sources.

Materials and Methods

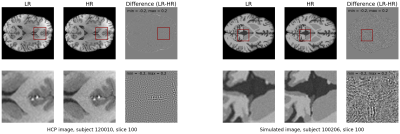

A simulated dataset from the OpenGTN project (https://opengtn.eu/) is used for training. Pairs of HR-LR data are simulated using a physics-based brain MR image simulation framework [2]. This framework utilizes digital phantoms to simulate desired image resolutions and contrasts. 30 simulated subjects (179 slices each) are used for training, 10 for validation, and 10 for testing. A total of 50 unprocessed T1w scans for real subjects from the Human Connectome Project (HCP) dataset [3] with a voxel size of 0.7mm are used as the HR images, where their LR counterparts were obtained via k-space truncation. An example of the HCP and simulated data can be seen in Figure 1. For evaluation of the model generalization to other sources of brain MRI images, the MRBrainS18 challenge dataset [4] and OASIS-1 dataset [5] are used. These datasets only contain LR 1mm voxel size images so there are no ground truth HR images.Our proposed methodology is based on generative adversarial networks (GANs) to learn the mapping between low-resolution (1mm voxel size) and high-resolution (0.7mm voxel size) images. For this study, the generator G from the ESRGAN [6] is used to generate HR images from the LR input. This generator consists of Residual in Residual Dense Blocks (RRDB), which are comprised of residually connected blocks of convolution and activation layers, as can be seen in Figure 2a. The discriminator network D, as shown in Figure 2b, tries to distinguish between the generated SR images and the original HR images.

Experiments and Results

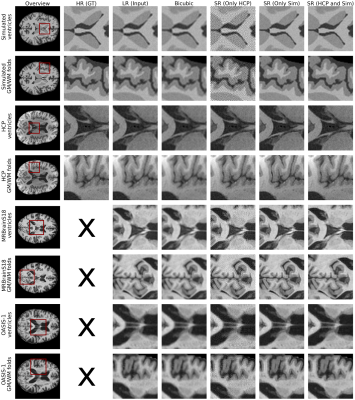

We investigate the effects of combining HCP and simulated data on the generalization of the model, which is evaluated on the test data from different sources unseen during training. Specifically, models are trained using only 30 HCP subjects, only 30 simulated subjects, and finally on the combination of 30 HCP and 30 simulated subjects.The qualitative results are shown in Figure 3, in addition to the bicubic interpolation as a reference. The model trained only on HCP images performs well on HCP test images but introduces artifacts and patterns in the test images from the other sources. Similarly, the model trained solely on simulated data shows visually the best performance on the simulated test images. This model introduces fewer artifacts on images of other sources, showing only sometimes a smoothing of tissues, failing to accurately generate the HR image characteristics. The model trained on a combination of both the HCP and simulated data appears to perform the best across different datasets, showing similar performance on HCP and simulated images as their counterparts, but without artifacts. The model trained on the combined data generates good results on MRBrainS18 and OASIS-1 images, suggesting that the addition of the simulated data can improve the generalization of the model.

Discussion and Conclusion

In this paper, we investigate the usability of the simulated brain MR images for training a generalizable super-resolution model that can deal with images coming from different sources. Our qualitative visual evaluation results suggest that data augmentation with simulated images can improve the robustness of the model and decrease the artifacts of the super-resolved images. Quantitative analysis of the results and detailed comparison between models, as well as exploring the optimum ratio between real and simulated data remain for future research.Acknowledgements

This research is a part of the OpenGTN project, supported by the European Union in the Marie Curie Innovative Training Networks (ITN) fellowship program under project No. 764465.

References

[1] Plenge, Esben, et al. "Super‐resolution methods in MRI: can they improve the trade‐off between resolution, signal‐to‐noise ratio, and acquisition time?." Magnetic resonance in medicine 68.6 (2012): 1983-1993.

[2] A. Ayaz et al., “Brain MR image simulation for deep learning based medical image analysis networks,” Submitted to NeuroImage (Under Review)

[3] Van Essen, David C., et al. "The WU-Minn human connectome project: an overview." Neuroimage 80 (2013): 62-79.

[4] Mrbrains18 — grand challenge on MR brain segmentation at MICCAI 2018. [Online]. Available: https://mrbrains18.isi.uu.nl/.

[5] Marcus, Daniel S., et al. "Open Access Series of Imaging Studies (OASIS): cross-sectional MRI data in young, middle-aged, nondemented, and demented older adults." Journal of cognitive neuroscience 19.9 (2007): 1498-1507.

[6] Wang, Xintao, et al. "ESRGAN: Enhanced super-resolution generative adversarial networks." Proceedings of the European conference on computer vision (ECCV) workshops. 2018.

Figures