2933

Accelerating MRI Using Vision Transformer with Unpaired Unsupervised Training1Biomedical Engineering, State University of New York at Buffalo, Buffalo, NY, United States, 2Electrical Engineering, State University of New York at Buffalo, Buffalo, NY, United States, 3Program of Advanced Musculoskeletal Imaging (PAMI), Cleveland Clinic, Cleveland, OH, United States, 4Paul C. Lauterbur Research Center for Biomedical Imaging, SIAT CAS, Shenzhen, China

Synopsis

Keywords: Image Reconstruction, Machine Learning/Artificial Intelligence, Unpaired unsupervised training, Transformer

In this abstract, we propose a novel deep-learning reconstruction method that enables training with only unpaired undersampled k-space data without the ground truth. The network utilizes a statistical model for the undersampling artifacts to enable unsupervised learning, and the generative adversarial network to enable unpaired training. In addition, the physics model is incorporated into the transformer network by unrolling the underlying optimization problem. Experiment results based on the fastMRI knee dataset exhibit marked improvements over the existing state-of-the-art reconstructions.Introduction

Many studies have successfully applied deep learning in MR reconstruction from undersampled data1-6. Most of them require a large number of labeled pairs for training a robust reconstruction network, which may be impractical for some MRI applications. In this work, we propose a novel unsupervised method for MRI reconstruction using only unpaired undersampled training data. Our network utilizes GAN7-10 and a statistic model11 to enable unpaired training without the ground truth data. Symmetric encoder-decoder architecture is used as the generator network in GAN, which is implemented by a vision transformer (ViT)12 instead of the convolution neural network (CNN) to capture long-range dependencies. We also integrate such unpaired unsupervised training with Regularization by Denoising (RED)13 to incorporate the physics model into the network. The proposed method shows promising performance compared to the state-of-the-art methods.Method

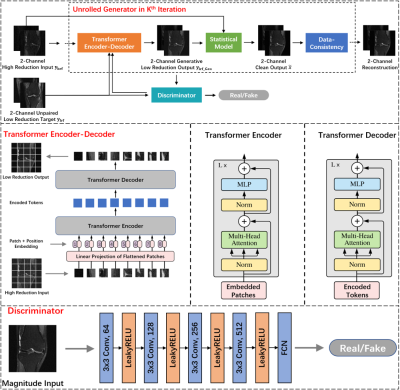

Our proposed method has an adversarial architecture7 with two parts as shown in Figure 1, the unrolled generator (G), and the discriminator (D). The network takes the 2-channel (real & imaginary) complex image with a high reduction factor as input and generates desired reconstructed image in the output. During the training, only undersampled images from high reduction and low reduction are needed without the need for fully sampled ground truth images (i.e., unsupervised), and they don’t have to be paired, meaning none of the training images need to be from the same object. The capability of unsupervised learning is achieved by a statistical model. Suppose we have two different acquisitions from an object. One is undersampled with low reduction, and the zero-filled image is defined as $$$y_{lrf} \equiv x+\mathcal{M} $$$, where x is the true image and $$$\mathcal{M}$$$ the noise-like artifacts. The other is further undersampled with an even higher reduction whose zero-filled image is represented as $$$y_{hrf} \equiv x+\mathcal{M} +\mathcal{N} $$$, where $$$\mathcal{M}$$$ and $$$\mathcal{N}$$$ are both noise-like artifacts independently drawn from a known sampling distribution $$$\mathcal{A}$$$. From a statistical point of view, the expectation of $$$y_{lrf}$$$ given $$$y_{hrf}$$$ can be described as $$\begin{equation}\mathbb{E}\left[ y_{lrf}\left.\right|y_{hrf}\right]=\mathbb{E}\left[ x\left.\right|y_{hrf}\right]+\mathbb{E}\left[ \mathcal{M}\left.\right|y_{hrf}\right]\tag{1}\end{equation}$$ We design the high and low reduction sampling masks such that artifacts $$$\mathcal{M}$$$ and $$$\mathcal{N}$$$ are independently and identically distributed, so they have the same expectation given $$$y_{hrf}$$$, $$$\mathbb{E}\left[ \mathcal{M}\left.\right|y_{hrf}\right]=\mathbb{E}\left[ \mathcal{N}\left.\right|y_{hrf}\right]$$$. Eq. (1) becomes $$\begin{equation}\begin{aligned}2\mathbb{E}\left[ y_{lrf}\left.\right|y_{hrf}\right]&= \mathbb{E}\left[ x\left.\right|y_{hrf}\right]+\left (\mathbb{E}\left[ x\left.\right|y_{hrf}\right]+ \mathbb{E}\left[ \mathcal{M}\left.\right|y_{hrf}\right] + \mathbb{E}\left[ \mathcal{N}\left.\right|y_{hrf}\right] \right )\\&=\mathbb{E}\left[ x\left.\right|y_{hrf}\right]+\mathbb{E}\left[ x+\mathcal{M}+\mathcal{N}\left.\right|y_{hrf}\right]\\&=\mathbb{E}\left[ x\left.\right|y_{hrf}\right]+y_{hrf}\end{aligned} \end{equation}\tag{2}$$ Therefore, the truth image x can be estimated by doubling the statistically estimated low-reduction image (from the high-reduction one) and subtracting the high-reduction image. The statistical estimation is implemented by a transformer encoder-decoder.The above explains the paired case. To achieve unpaired training, GAN architecture is used to generate and discriminate the low-reduction image from a high-reduction input based on the statistical information obtained from the set of low-reduction images that are unrelated to the set of high-reduction inputs. Figure 1 illustrates the architecture of the proposed method where the kth iterations are unrolled to one training epoch. The unrolled generator consists of 3 modules: (1) Transformer encoder-decoder module implemented with a ViT architecture. (2) Statistical module which reconstructs the MR image by doubling the transformer’s low reduction output and subtracting its high reduction input. (3) Data-consistency module that incorporates the Fourier transform relationship in the network. Specifically, derived from the RED, the reconstruction is further updated as follows:$$\begin{equation} x=\left ( A^{H}A+\beta I \right ) ^{-1} \left (A^{H}y_{hrf}+\beta \tilde{x} )\right )\tag{3}\end{equation} $$ where $$$\tilde{x}$$$ is the output of the statistical module, A is the measurement matrix that contains the undersampling process and Fourier transform, and $$$\beta$$$ is a regulation factor. The loss for the generator is $$\begin{equation}\min_{\theta_{g}} \mathbb{E}\left [ \left \| y_{hrf} - G\left ( y_{hrf};\theta_{g} \right )\right \| _{2} -\lambda _{1}\mathbb{E}\left [ D\left ( G\left ( y_{hrf} \right ) ; \theta_{d} \right ) \right ] + \lambda _{2} \mathbb{E}\left [ \left \| y_{hrf} - x_{rec} \right \| _{2} \right ] \right ]\tag{4}\end{equation} $$ where G is the transformer encoder-decode with the parameter $$$\theta _{g} $$$, and D is the discriminator with parameter $$$\theta _{d} $$$, $$$x_{rec}$$$ is the final reconstruction after unrolled modules, $$$\lambda _{1}$$$ and $$$\lambda _{2}$$$ are the weights in the loss function. The discriminator loss is $$\begin{equation}\min_{\theta_{g}} \mathbb{E}\left [ D\left ( G\left ( y_{hrf} \right ) ; \theta_{d} \right ) \right ] -\mathbb{E}\left [ D\left ( y_{lrf};\theta _{d} \right ) \right ] +\eta \mathbb{E}\left [ \left ( \left \| \bigtriangledown_{\hat{y}_{hrf}} D\left ( \hat{y}_{hrf};\theta _{d} \right ) \right \| -1 \right ) ^{2} \right ] \tag{5}\end{equation}$$

Results

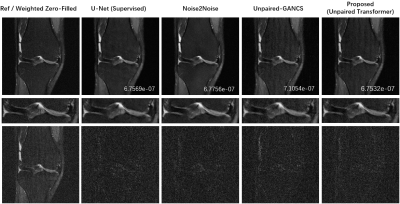

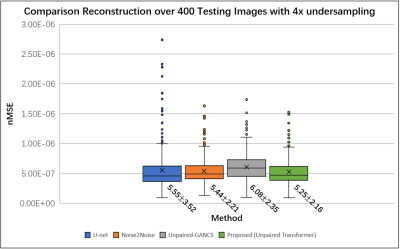

We used the raw single-coil k-space from the knee fastMRI dataset14-15 that is available publicly at https://fastmri.org/. In particular, we randomly selected 200 sagittal knee MR scans and only 20 central images were selected for each subject that had anatomy, which gave a total of 4000 images for training. We further selected another 20 different subjects in the fastMRI dataset and picked 20 central images for each subject, which gave additional 400 images for testing. The training data and testing data have image size 320x320. All images are complex-valued.Figure 2 shows reconstructions from 4× undersampled data using supervised U-net, unsupervised Noise2Noise, unpaired supervised GANCS, and proposed method with Transformer GAN. Figure 3 shows the boxplots of the normalized mean squared errors (nMSEs) of reconstructions for 400 testing images. It is seen that the reconstructions of the proposed method agree with ground truth image well.

Acknowledgements

This work was supported in part by the NIH/NIAMS R01 AR077452 and NIH U01 EB023829.References

1. S. Wang, Z. Su, L. Ying, X. Peng, S. Zhu, F. Liang, D. Feng and D. Liang, "Accelerating magnetic resonance imaging via deep learning," 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), 2016, pp. 514-517.

2. B. Zhu, J. Z. Liu, S. F. Cauley, B. R. Rosen, M. S. Rosen, "Image reconstruction by domain-transform manifold learning," Nature, vol. 555, pp. 487–492, 2018.

3. Y. Yang, J. Sun, H. Li, and Z. Xu, "ADMM-CSNet: A Deep Learning Approach for Image Compressive Sensing," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, no. 3, pp. 521-538, 2020.

4. K. Hammernik, T. Klatzer, E. Kobler, M. P. Recht, D. K. Sodickson, T. Pock and F. Knoll. "Learning a variational network for reconstruction of accelerated MRI data," Magn. Reson. Med., vol. 79, no. 2, pp. 3055-3071, 2018.

5. J. Zhang and B. Ghanem, "ISTA-Net: Interpretable Optimization-Inspired Deep Network for Image Compressive Sensing," 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018, pp. 1828-1837.

6. H. K. Aggarwal, M. P. Mani and M. Jacob, "MoDL: Model-Based Deep Learning Architecture for Inverse Problems," in IEEE Transactions on Medical Imaging, vol. 38, no. 2, pp. 394-405, 2019.

7. M. Arjovsky; S. Chintala; L. Bottou, "Wasserstein Generative Adversarial Networks". 34th International Conference on Machine Learning (ICML), vol. 70, pp. 214–223, 2017.

8. J. -Y. Zhu, T. Park, P. Isola and A. A. Efros, "Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks," 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 2242-2251.

9. K. Lei, M. Mardani, J. M. Pauly, and S. S. Vasanawala, "Wasserstein GANs for MR Imaging: From Paired to Unpaired Training," in IEEE Transactions on Medical Imaging, vol. 40, no. 1, pp. 105-115, 2021.

10. G. Oh, B. Sim, H. Chung, L. Sunwoo and J. C. Ye, "Unpaired Deep Learning for Accelerated MRI Using Optimal Transport Driven CycleGAN," in IEEE Transactions on Computational Imaging, vol. 6, pp. 1285-1296, 2020.

11. N. Moran, D. Schmidt, Y. Zhong and P. Coady, "Noisier2Noise: Learning to Denoise From Unpaired Noisy Data," 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 12061-12069.

12. A. Dosovitskiy, L. Beyer, et al, "An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, " 2021 International Conference on Learning Representations (ICLR), 2021.

13. Y. Romano, M. Elad, and P. Milanfar, "The little engine that could: Regularization by denoising (RED)," SIAM J. Imag. Sci., vol. 10, no. 4, pp. 1804–1844, 2017.

14. J. Zbontar, F. Knoll, A. Sriram, T. Murrell, Z. Huang, M. J. Muckley, ... and Y. W. Lui, "fastMRI: An Open Dataset and Benchmarks for Accelerated MRI," arXiv preprint arXiv:1811.08839, 2018.

15. F. Knoll, J. Zbontar, A. Sriram, M. J. Muckley, M. Bruno, A. Defazio, ... and Y. W. Lui, "fastMRI: A Publicly Available Raw k-Space and DICOM Dataset of Knee Images for Accelerated MR Image Reconstruction Using Machine Learning," Radiology: Artificial Intelligence, vol. 2, no. 1, e190007, 2020.

Figures