2531

MRGazerII: Camera-free Decoding Eye Movements from Functional Magnetic Resonance Imaging1Center for Biomedical Imaging, University of Science and Technology of China, Hefei, China, 2Anhui Medical University, Hefei, China, 3GE Healthcare, Shanghai, China

Synopsis

Keywords: Machine Learning/Artificial Intelligence, fMRI, eye movement

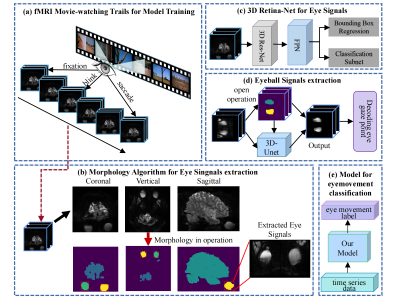

Eye movements reflect changes in human behavior and thought to some extent, but many functional magnetic resonance imaging (fMRI) studies are limited by equipment and do not perform eye movement tracking. Recently, a deep learning method has been proposed for the regression problem of a single volume's gaze point. In this paper, we propose an end-to-end pipeline called MRGazerⅡ, which includes eye signal extraction, eye-movement behavior recognition and gaze point regression from fMRI scanning slices. The method was tested on the human connectome project (HCP) fMRI dataset and achieved the desired performance.Introduction

Eye movements directly reflect our thoughts and play key roles in numerous human cognitive researches1. Therefore, experimental designs using fMRI combined with eye tracking provide a window into brain cognition and disease diagnosis2-4. However, eye tracking is merely used for the MRI compatible eye trackers are expensive and complicated. Recently, Frey et al. designed an exciting CNN model 'DeepMReye' which performed camera-free eye gaze point tracking5. But DeepMReye performs at sampling interval of one time of repetition (around 1 second) and did not detect other typical eye movements such as blink and saccade. Therefore, we propose an eye-movement deep learning prediction pipeline, MRGazerII, which read in raw fMRI slices and report the eye movement states of blink, saccade, and fixation point at the sampling interval of a few tens of milliseconds(Fig 1).Methods

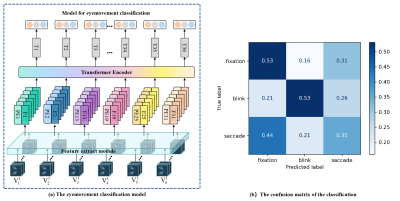

The movie-watching fMRI data we used were from the Human Connectome Project (HCP) 7T release6, in which each subject watched four movies with their eye movements recorded. After removing incomplete eye imaging data, we obtained a total of 416 fMRI runs from 158 subjects. The binary morphology method was then used to segment the human brain and the corresponding eye domains in the fMRI images. The intermediate 6-layer slices of the eye balls were extracted and fed into the deep neural network for the eye movement prediction.The ResNet-CBAM was used to extract image features of each time point, and the features was then fed into a Transformer encoder to achieve fusion of features in the temporal domain7,8. Finally, frame-level prediction was made after a fully connected layer(Fig 2 a). The slices labelled as fixation were then inputted into another DNN for gaze point prediction. Adam was selected as the optimizer algorithm, in which the optimal parameter values for the learning rate weight decay was set for 1e-4, and the mixup method is also used to prevent overfitting9. The dataset was categorized into training and validating group at cross-subject level.

Results

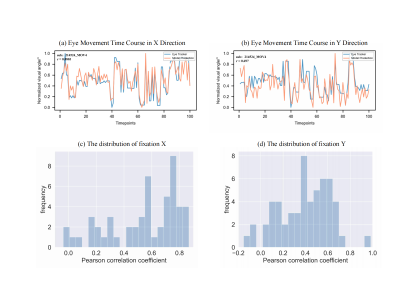

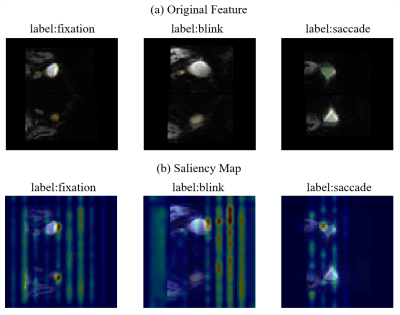

The proposed model achieves an accuracy of 0.48 in the classification of eye movements with f1-scores of 0.56, 0.51 and 0.34 for the fixation, blink and saccade respectively (Fig 2 b). A limitation of the MRI based eye tracking methods is that only a few slices (6 slices in the present work) contain eye balls within a whole-brain fMRI volume (85 slices in the present work), thus they neglect the eye movements happening within the slices without eye balls. Thus, to evaluate the temporal performance, the proportion of the three types of eye movements were calculated within a sliding window of 12 seconds from the model prediction and eye-tracking files. The results show that the prediction of fixation (averaged r=0.28) and blink (averaged r=0.46) were highly correlated with the records of the eye tracker (Fig 3 a-d), while the prediction of saccade (averaged r=0.05) was not good (Fig 3 e, f). The gaze-point regression also achieved high performance, with average correlation coefficients of 0.39 and 0.46 in the x and y directions (Fig 4). A visualization analysis was then applied, and the results showed that the saliency features were mainly within the eye balls and their anterior-posterior adjacent areas (Fig 5), suggesting that the model took use of eye movement artifacts in the images.Discussion and Conclusion

A deep learning pipeline, MRGazerII, for predicting eye movement states was proposed in the present work. The proposed method was able to predict fixation, blink, saccade and fixation point in an acceptable accuracy at sampling interval of tens of milliseconds. The visualization analysis reported saliency areas in eye balls and adjacent anterior-posterior areas, suggesting the features related to eye motion artifacts. The relatively poor performance of classification of saccade may be due to the hetereogenety of eye movements, and a further work including more subtypes of eye movements, such as microsaccades10 and slow eye closure11, may improve the performance. A multi-dataset validation should also be applied in the further work. In conclusion, the proposed MRGazerII, a camera-free eye tracking method, is able to report typical eye movements at temporal interval of tens of milliseconds and would be helpful to future fMRI & eye-movement analysis.Acknowledgements

The authors are grateful to all study participants.References

1 Klein, C. & Ettinger, U. Eye movement research: An introduction to its scientific foundations and applications. (Springer Nature, 2019).

2 LaConte, S., Glielmi, C., Heberlein, K. & Hu, X. in Proc. Intl. Soc. Magn. Reson. Med.

3 Koba, C., Notaro, G., Tamm, S., Nilsonne, G. & Hasson, U. Spontaneous eye movements during eyes-open rest reduce resting-state-network modularity by increasing visual-sensorimotor connectivity. Netw Neurosci 5, 451-476 (2021).

4 Goto, Y. et al. Saccade eye movements as a quantitative measure of frontostriatal network in children with ADHD. Brain Dev-Jpn 32, 347-355, doi:10.1016/j.braindev.2009.04.017 (2010).

5 Frey, M., Nau, M. & Doeller, C. F. Magnetic resonance-based eye tracking using deep neural networks. Nat Neurosci 24, 1772-1779, doi:10.1038/s41593-021-00947-w (2021).

6 Van Essen, D. C. et al. The WU-Minn Human Connectome Project: An overview. Neuroimage 80, 62-79, doi:10.1016/j.neuroimage.2013.05.041 (2013).

7 He, K. M., Zhang, X. Y., Ren, S. Q. & Sun, J. Deep Residual Learning for Image Recognition. Proc Cvpr Ieee, 770-778, doi:10.1109/Cvpr.2016.90 (2016).

8 Woo, S. H., Park, J., Lee, J. Y. & Kweon, I. S. CBAM: Convolutional Block Attention Module. Lect Notes Comput Sc 11211, 3-19, doi:10.1007/978-3-030-01234-2_1 (2018).

9 Zhang, H., Cisse, M., Dauphin, Y. N. & Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. (2017).

10 Martinez-Conde, S., Otero-Millan, J. & Macknik, S. L. The impact of microsaccades on vision: towards a unified theory of saccadic function. Nat Rev Neurosci 14, 83-96, doi:10.1038/nrn3405 (2013).

11 Poudel, G. R., Innes, C. R. H., Bones, P. J., Watts, R. & Jones, R. D. Losing the struggle to stay awake: Divergent thalamic and cortical activity during microsleeps. Hum Brain Mapp 35, 257-269, doi:10.1002/hbm.22178 (2014).

Figures