1599

Low-field MR Multitasking for an integrated abdominal MRgRT imaging framework1Radiology, University of Southern California, Los Angeles, CA, United States, 2Bioengineering, University of California, Los Angeles, Los Angeles, CA, United States, 3Electrical Engineering, University of Southern California, Los Angeles, CA, United States, 4University of California, Los Angeles, Los Angeles, CA, United States, 5Radiation Oncology, University of Southern California, Los Angeles, CA, United States, 6Siemens Medical Solutions, Los Angeles, CA, United States, 7Cedars-Sinai Medical Center, Los Angeles, CA, United States

Synopsis

Keywords: Low-Field MRI, Radiotherapy

MR applications in radiation therapy has shown great promise for highly conformal treatment. However, MRgRT in the abdomen remains challenging due to motion and the sheer number of organs. In this work, we have demonstrated at 0.55T an integrated multi-task MR platform for adaptive radiation planning and treatment monitoring in the abdomen. We propose using a hybrid GRE-TSE sequence, from a single "pre-beam" scan we are able to generate a volumetric, multi-contrast and motion resolved images for adaptive treatment planning as well as enabling multi-contrast 3D real-time display during treatment.Introduction

MR-guided radiation therapy (MRgRT) holds great potential for personalized, biologically adaptive radiotherapy1. However, critical challenges related to onboard image acquisitions remain in the current abdominal MRgRT workflow: 1) In the pre-beam adaptive planning phase, no respiration correlated (i.e., 4D-MR) or real-time volumetric sequences are commercially available to assess target and organs-at-risk (OARs) position with respect to respiratory phases. Several 4D-MR sequences2–5 under research investigation are all single-contrast weighted, limiting target/OARs delineation. 2) In the beam-on phase, real-time tracking is focused only on the tumor target using a 2D sequence. A few 3D real-time imaging methods6–8 have recently been proposed but suffer from the same single-contrast-weighting problem. 3) The sequences used for pre-beam adaptive planning and beam-on real-time tracking are often different in resolution and contrast, potentially bringing inconsistency between planning and treatment.Addressing these challenges is perhaps a more urgent need at relatively low fields9 (e.g., 0.35T, 0.55T, or 1T) as some MRgRT systems (i.e. MR-Linacs) come at low fields and yet the research of the MR imaging community has long been focused on ≥1.5T. In this study, we developed a novel low-field MR Multitasking (MR-MT) imaging framework that afford both 4D-MR and real-time 3D images with multiple simultaneous contrast weightings and superior latency. We demonstrated at 0.55T its feasibility in enabling an integrated MRgRT workflow.

Method

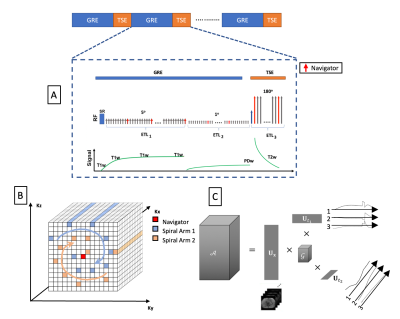

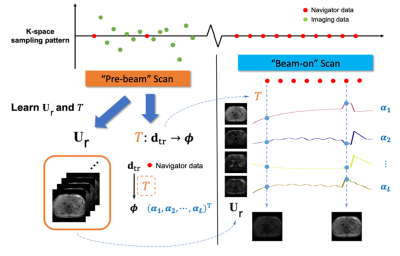

MR Sequence:This work builds on recent MR-MT techniques with proof-of-concept demonstrated at 3T – multi-task MR simulation10 and single projection driven real-time multi-contrast (SPIDERM) imaging11. To overcome the tissue property differences at low field, a hybrid GRE and TSE sequence with a Cartesian spiral-in k-space trajectory was implemented in order to generate T1w, T2w and PDw contrasts(Fig. 1). There are 10 lines in each spiral arm, with the 10th line located at the k-space center being used as the navigator. The sequence was implemented using Pulseq.12Reconstruction workflow:The MR-MT sequence, can serve both as the “pre-beam” scan for adaptive planning and the “beam-on” scan for real-time monitoring. From the “pre-beam” scan, we can reconstruct 1) multi-contrast respiratory phase-resolved images for adaptive planning and 2) lean a subject-specific spatial subspace and a linear transformation between navigator data and temporal subspace coordinates. Specifically, using MR-MT, a low-rank tensor (LRT) technique13, we can efficiently encode an image of these multiple MR spatiotemporal dimensions into linear combinations of the spatial coefficients Ur , as shown in Fig. 1C. In this case,$$\mathcal{A}=\mathbf{U}_{\mathbf{r}} \times \Phi$$. For motion-resolved images for treatment planning 𝒜 is the tensor representing a multidimensional image 𝒜(r ,tm, tc) with r being the physical dimensions [x,y,z], and tm, tc being the temporal motion and contrast dimensions. The combined temporal factor $$\Phi=\mathcal{G} \times_2 \mathbf{U}_{\mathrm{m}} \times_3 \mathbf{U}_{\mathrm{c}}$$ is determined from Bloch-constrained low-rank tensor completion and motion binning from the navigator data, where $$$\mathcal{G}$$$ is the core tensor, and the $$$\mathbf{U}$$$ matrices contain basis functions for each dimension. For real-time images, 𝒜 is the tensor representing volumetric real-time image 𝒜(r ,t), with t being the contrast-motion combined dimension. The real-time temporal factor $$$\mathcal{G}$$$ can be extracted directly from SVD of the navigator data. We then determine separated for real-time and motion resolved applications by $$\widehat{\mathbf{U}}_{\mathbf{r}}=\arg \min _{\mathbf{U}_{\mathbf{r}}}\left\|\mathbf{d}-\Omega\left(\Phi \times_1 \mathbf{E U}_{\mathbf{r}}\right)\right\|_2^2+\lambda R\left(\mathbf{U}_{\mathbf{r}}\right)$$ Where $$$\mathbf{d}$$$ is the measured imaging data, $$$\Omega$$$ is the spiral-in Cartesian sampling operator, $$$ \mathbf{E}$$$ is the multi-channel spatial encoding operator, and $$$\lambda$$$ is the Lagrange multiplier for $$$R\left(\mathbf{U}_{\mathbf{r}}\right)$$$, a wavelet regularizer. From the “beam-on” scan, with a learned $$$\mathbf{U}_{\mathbf{r}}$$$ from retrospective real-time application of the “pre-beam” scan, only navigator data (center k-space line) is needed to generate the real-time $$$\Phi$$$ and thus the multi-dimensional images. Furthermore, the real-time generated $$$\Phi$$$ can be projected onto a Bloch simulated subspace to “freeze” the real-time image to a specific contrast while preserving real-time respiratory motion (Fig. 2).

In-vivo study: Six volunteers were scanned on a whole body 0.55T system (prototype Magnetom Aera, Siemens Healthineers, Erlangen, Germany) equipped with high-performance shielded gradients (45 mT/m amplitude, 200 T/m/s slew rate). Imaging parameters: FOV = 256(SI) x 358(LR) x 256(AP) mm3, resolution = 1.6 x 1.6 x 3.2mm3, TR/TE = 6.0/3.0 ms, flip angles = 5/1(GRE) and 180(TSE refocusing), segment lengths = 150/150/100.

Results

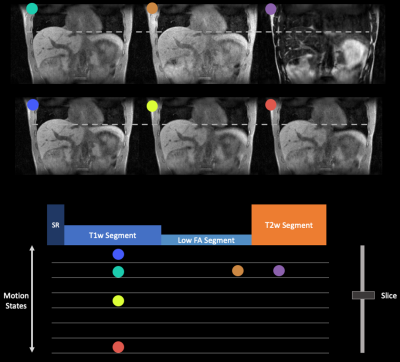

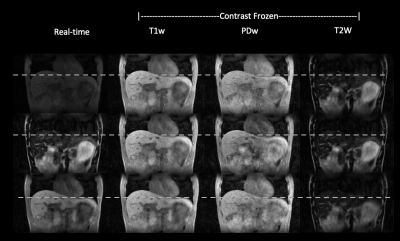

Figure 3 shows the respiratory phase-resolved, multi-contrast, volumetric images reconstructed from the “pre-beam” scan for adaptive planning. Note that one can select any combination of contrast weighting, motion states, and slice. Figure 4 demonstrates the simultaneous multi-contrast real-time tracking capability of the proposed MT-MR technique. We demonstrate that we can freeze the contrast and preserve respiratory motion information even the contrast is constantly changing. Therefore, one can select a tumor or OAR tailored contrast weighting for optimal real-time tracking.Discussion

In this study, we developed a novel MR-MT sequence that has showed great potential to serve major imaging tasks in a typical low-field MRgRT workflow. An integrated MRgRT imaging framework based on this sequence may bring image consistency throughout the daily treatment course, and can be even expanded by including MR simulation, thus helping streamline the entire MR-based RT workflow and improve the overall accuracy and outcome. Rigorous validation on a motion phantom and a patient cohort is underway. Further investigation of this technique on a commercial MR-linac system is also warranted.Acknowledgements

We acknowledge grant support from the National Institutes of Health (R01EB029088), USC Ming Hsieh Institute (MHI) Award, the National Science Foundation (#1828736) and research support from Siemens Healthineers.References

1. Otazo, R. et al. MRI-guided Radiation Therapy: An Emerging Paradigm in Adaptive Radiation Oncology. Radiology 298, 202747 (2020).

2. Rank, C. M. et al. 4D respiratory motion-compensated image reconstruction of free-breathing radial MR data with very high undersampling. Magnet Reson Med 77, 1170–1183 (2017).

3. Huttinga, N. R. F., Berg, C. A. T. van den, Luijten, P. R. & Sbrizzi, A. MR-MOTUS: model-based non-rigid motion estimation for MR-guided radiotherapy using a reference image and minimal k-space data. Phys Medicine Biology 65, 015004 (2020).

4. Deng, Z. et al. Four-dimensional MRI using three-dimensional radial sampling with respiratory self-gating to characterize temporal phase-resolved respiratory motion in the abdomen. Magnet Reson Med 75, 1574–1585 (2016).

5. Deng, Z. et al. A post-processing method based on interphase motion correction and averaging to improve image quality of 4D magnetic resonance imaging: a clinical feasibility study. Br J Radiology 92, 20180424 (2019).

6. Huttinga, N. R. F., Bruijnen, T., Berg, C. A. T. V. D., Sbrizzi, A. & Huttinga, N. Real-Time Non-Rigid 3D Respiratory Motion Estimation for MR-Guided Radiotherapy Using MR-MOTUS. Ieee T Med Imaging 41, 332–346 (2022).

7. Feng, L., Tyagi, N. & Otazo, R. MRSIGMA: Magnetic Resonance SIGnature MAtching for real-time volumetric imaging. Magnet Reson Med 84, 1280–1292 (2020).

8. Feng, L. & Liu, F. High Spatiotemporal Resolution Motion-Resolved MRI using XD-GRASP-Pro. in International Society for Magnetic Resonance in Medicine (2020).

9. Marques, J. P., Simonis, F. F. J. & Webb, A. G. Low‐field MRI: An MR physics perspective. J. Magn. Reson. Imaging 49, 1528–1542 (2019).

10. Chen, J. et al. Multi-Task MR Simulation for Abdominal Radiation Treatment Planning: Technical Development. in 2021 Annual Meeting of the International Society for Magnetic Resonance in Medicine(2021).

11. Han, P. et al. Single projection driven real-time multi-contrast (SPIDERM) MR imaging using pre-learned spatial subspace and linear transformation. Phys Medicine Biology 67, 135008 (2022).

12. Layton, K. J. et al. Pulseq: A rapid and hardware‐independent pulse sequence prototyping framework. Magn. Reson. Med. 77, 1544–1552 (2017).

13. Christodoulou, A. G. et al. Magnetic resonance multitasking for motion-resolved quantitative cardiovascular imaging. Nat Biomed Eng 2, 215–226 (2018).

Figures