1211

Usefulness of Deep Learning Models of DWI in Direct Differentiating Malignant and Benign Breast Tumors Without Lesion Segmentation1Kyoto University Graduate School of Medicine, Kyoto, Japan, 2Kyoto University Hospital, Institute for Advancement of Clinical and Translational Science (iACT), Kyoto University Hospital, Kyoto, Japan, 3Kyoto University Faculty of Medicine, Kyoto, Japan, 4A.I.System Research CO.,Ltd., Kyoto, Japan, 5Diagnostic Radiology, Kansai Electric Power Hospital, Osaka, Japan, 6Diagnostic Radiology, Tenri Hospital, Nara, Japan, 7Kyoto University Graduate School of Medi, Kyoto, Japan, 8e-Growth Co., Ltd., Kyoto, Japan, 9Breast Surgery, Kyoto University Graduate School of Medi, Kyoto, Japan

Synopsis

Keywords: Breast, Breast

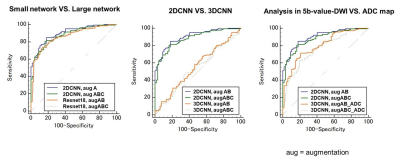

348 women suspected of breast tumors were enrolled, and 206 breast lesions (139 malignant, 67 benign) were further analyzed. Breast 5b-value-DWI was performed, and a deep learning model dedicated to this breast DWI dataset was established. Several comparative experiments were performed; comparison of data augmentations, small network VS. large network, 2DCNN VS. 3DCNN, and analysis in 5b-DWI VS. ADC maps. Augmentations using elastic deformation, affine transform, and Gaussian noise improved diagnostic performance up to AUC=0.90. The use of small CNN and without ADC map also showed higher diagnostic performance (AUC=0.88-0.90), showing AI potential to improve breast DWI diagnostic performance.

Introduction

Contrast-enhanced MRI of the breast, which is currently the mainstream diagnostic method for breast cancer, has high sensitivity with variable specificity, resulting in a relatively high false-positive rate. DWI, which does not require contrast media, has been widely used in differentiating between benign and malignant breast tumors, and monitoring breast lesions(1). Artificial intelligence has been used for MRI to investigate the detectability of breast lesions(2) or differentiation of malignant and benign breast tumors(3), however, there has been little research using artificial intelligence for DWI. We have found the utility of 5b-value-DWI for differentiating malignant and benign breast tumors(4). Thus, our purpose was to build a deep-learning model useful for differentiating benign and malignant breast tumors using diffusion-weighted images with five different b-values. We explored a direct approach to diagnose breast lesions without lesion segmentation.Materials & Methods

-Study design and MRI acquisition-This prospective study included 348 women suspected of breast tumors. Excluding patients who have treatment or with no lesion, 206 breast lesions (139 malignant, 67 benign) were further analyzed. Breast MRI was performed using a 3-T system (MAGNETOM Prisma, Siemens) equipped with a dedicated18-channel breast array coil. DWI was acquired using 5 b values of 0,200,800,1000,1500 sec/mm2; repetition time/echo time,6900/49ms; FOV,330×187mm; matrix,162×92; slice thickness,3.0 mm; acquisition time,2.32min.

-Comparative experiments to explore a deep learning-based algorithm for analyzing DWI-

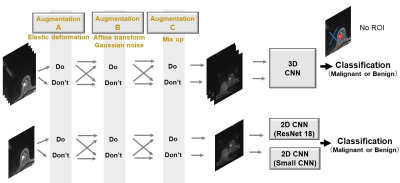

We attempted to build a convolutional neural network (CNN) model that classifies benignity and malignancy from DW images with a much smaller dataset than is commonly used for deep learning. In this situation, it is not certain whether commonly used neural networks with a large number of parameters are effective or not. In addition, suitable data augmentations or preprocessing for DWI are not trivial. Thus, we examined models through several comparative experiments (Figure1).

/Comparison of data augmentations: Several combinations of data augmentations were performed in Training, including:

Augmentation A: random affine transformation and random noise

Augmentation B: mix-up (5)

Augmentation C: random elastic deformation

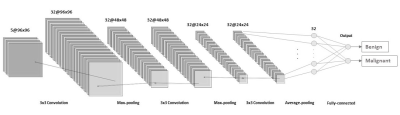

/Small network VS. Large network: The well-known ResNet18 (6) and a smaller network (Figure2) were compared.

/2DCNN VS. 3DCNN: For 2DCNN, the input data to a network is the five b-value images of a slice, and, for inference, the network outputs for each slice in a tumor are aggregated to predict the type of the entire tumor. For 3DCNN, the input data is processed the same way as in the 2DCNN, but five slices in a tumor selected randomly are used as train input data.

/Analysis in 5b-value-DWI VS. ADC maps: Whether the AUC could be improved by using four different ADC images from five different b-values as input was also investigated.

In all experiments, the dataset is 5b-value-DWI with meta-information of the slice numbers where the tumor is located was used as input, and no ROI was provided. In addition, unlabeled slices were randomly selected every epoch and added to the benign data to correct sample imbalance between classes. A network was trained by supervised learning with cross-entropy loss. The data set was divided into ten subsets in tumor units, nine of which were used as train data and one as test data for training and accuracy evaluation respectively, and it was repeated ten times while changing the test data in turn. This entire operation was repeated six times and each predictive rate for malignancy was averaged. PPV, NPV, and AUC for differentiating malignant and benign breast tumors were estimated using ROC analysis. To avoid overestimation, this analysis was done using a network at 100 epochs, and hyperparameters were not turned for each experiment.

Results

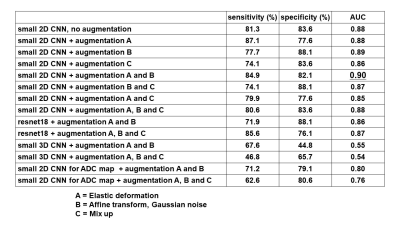

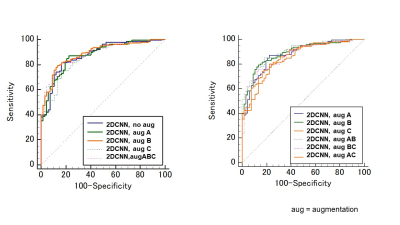

/Comparison of data augmentations: Augmentation A (Elastic deformation) and B (Affine transform, Gaussian noise) slightly improved diagnostic performance (AUC:0.88-0.89) (Figure3, Table1). On the other hand, augmentation C (Mix up) resulted in a slight decrease in AUC (AUC:0.86)./Small network VS. Large network: ResNet18 provided slightly inferior accuracy than small CNN for our dataset (AUC:0.86-0.87 VS. 0.88-0.90), so we used small CNN in subsequent experiments. (Figure4, Table 1)

/2DCNN VS. 3DCNN: The result using 2D CNN resulted in a better AUC than 3D CNN (AUC:0.88-0.90 VS. 0.54-0.55). (Figure4, Table1)

/Analysis in 5b-value-DWI VS. ADC maps: the AUC using ADC maps (0.76-0.80) was lower than AUC using 5b-value-DWI (0.88-0.90) (Figure4, Table1)

Discussion & Conclusion

Breast DWI has been mainly used for machine learning research as a combination with DCE-MRI (3), and this is the first deep learning study investigating the diagnostic performance of breast tumors using breast DWI solely, so far as we know. Notably, the CNN-based model used in this study provided reasonably good performance (up to AUC of 0.90) only with the slice information of lesions, which can omit lesion segmentation usually required in imaging analysis. For our dataset, 2D CNN-based models achieved better accuracy than larger ResNet18 or 3D CNN-based models. This might be attributed to the small size of the dataset used. Experiments comparing data augmentations imply that spatial deformations might be efficient for breast DWI. Diagnostic performance for differentiation of breast tumors with machine learning has varied (7). AI application for breast DWI needs to be further developed, which could be a merit for accurately diagnosing patients suspected of breast cancer.Acknowledgements

This work was supported by AMED Grant Number 22he0422025j0001 and KAKENHI Grant Number 21K07618.References

1. Iima M, Honda M, Sigmund EE, Ohno Kishimoto A, Kataoka M, Togashi K. Diffusion MRI of the breast: Current status and future directions. J Magn Reson Imaging. 2020 Jul;52(1):70–90.

2. Adachi M, Fujioka T, Mori M, Kubota K, Kikuchi Y, Xiaotong W, et al. Detection and diagnosis of breast cancer using artificial intelligence based assessment of maximum intensity projection dynamic contrast-enhanced magnetic resonance images. Diagnostics (Basel). 2020 May 20;10(5):330.

3. Bhowmik A, Eskreis-Winkler S. Deep learning in breast imaging. BJR Open. 2022 May 13;4(1):20210060.

4. Mami Iima, Aika Okazawa, Ryosuke Okumura, Sachiko Takahara, Tomotaka Noda, Taro Nishi, Yuji Nakamoto, and Masako Kataoka. A BI-RADS like lexicon for Breast DWI: Proposal and early evaluation. In: ISMRM, 2021.

5. Zhang H, Cisse M, Dauphin, Y N, and Lopez-Paz D. mixup: Beyond empirical risk minimization. In International Conference on Learning Representations, 2018.

6. He K, Zhang X, Ren S, and Sun J. Deep residual learning for image recognition. CVPR, pp. 770–778, 2016.

7. Meyer-Bäse A, Morra L, Meyer-Bäse U, Pinker K. Current Status and Future Perspectives of Artificial Intelligence in Magnetic Resonance Breast Imaging. Contrast Media Mol Imaging. 2020 Aug 28;2020:6805710.

Figures

Figure 4: ROC curves comparing

/Small network VS. Large network

/2DCNN VS. 3DCNN

/Analysis in 5b-value-DWI VS. ADC maps