0980

Deep Learning-Based Automatic Pipeline for 3D Needle Localization on Intra-Procedural 3D MRI1Department of Radiological Sciences, UCLA, Los Angeles, CA, United States, 2Department of Bioengineering, UCLA, Los Angeles, CA, United States

Synopsis

Keywords: Machine Learning/Artificial Intelligence, MR-Guided Interventions

Needle localization in 3D images during MRI-guided interventions is challenging due to the complex structure of biological tissue and the variability in the appearance of needle features in in-vivo MR images. Deep learning networks such as the Mask Regional Convolutional Neural Network (R-CNN) could address this challenge by providing accurate needle feature segmentation in intra-procedural MR images. This work developed an automatic coarse-to-fine pipeline that combines 2.5D and 2D Mask R-CNN to leverage inter-slice information and localize the needle tip and axis in in-vivo intra-procedural 3D MR images.Introduction

MRI-guided needle-based interventions have an increasing role in targeted biopsies due to the high soft-tissue contrast in MR images1,2. Accurate and rapid needle localization in 3D is crucial for clinical success in MRI-guided interventions3-8. Moreover, in novel procedures such as robotic-assisted interventions, real-time automatic needle tracking is an essential task9,10.Recent work has shown that 2D Mask Regional Convolutional Neural Network (R-CNN) models3 can achieve real-time and accurate needle localization on 2D intra-procedural MRI4-5. Previous work has also investigated 3D deep learning-based methods for automatic needle feature segmentation and localization in 3D MR images6-8. However, training 3D deep learning networks requires a large-scale dataset of 3D MR images, which may not always be available for the intended application.

To overcome this challenge, our objective is to develop a novel coarse-to-fine pipeline that combines 2.5D and 2D Mask R-CNN models, trained with a limited number of datasets, to automatically localize needles in 3D using intra-procedural 3D MR images.

Methods

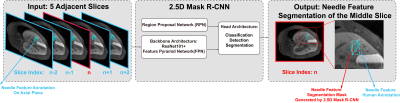

Dataset: In an animal research committee-approved study, we performed MRI-guided needle (Cook Medical, 20-gauge, 15 cm; Invivo, 18-gauge, 10 cm) interventions in the liver in six healthy female pigs (32-36 kg) on a 3T scanner (Prisma, Siemens). During the experiments, the pigs were under anesthesia, and breathing was controlled by a ventilator. Forty-two 3D T1-weighted (T1w) volumetric interpolated breath-hold examination (VIBE) Dixon MRI datasets (TR/TE1/TE2: 3.91/1.23/2.46 ms, field-of-view [FOV]: 346x237 mm2, in-plane resolution: 1.35x1.35 mm2, flip angle [FA]: 9°, axial slab, slice thickness: 1.5 mm, 120 slices, parallel imaging factor: 4, 13-sec breath-held scan) and 420 single-slice golden-angle 2D radial gradient-echo images (TR/TE: [3.8/1.72, 5.08/3, or 6.3/2.85] ms, FOV: 300x300 mm2, in-plane resolution: 1.56x1.56 mm2, FA: 9°, slice thickness: 5 mm, sagittal and axial slices, 100 ms per image) were used for training the deep learning models in the pipeline. Needle feature tips and entry points (at the skin) in T1w-VIBE Dixon water images were annotated in 3D Slicer11 by a researcher according to previously established guidelines4,5. The researcher repeated the annotations with a washout period of two weeks to assess the human intra-reader variation.2.5D Mask R-CNN: We developed a 2.5D Mask R-CNN model (Figure 1) for 3D needle feature segmentation. Stacks of five adjacent axial slices from T1w-VIBE images were used as inputs to capture the inter-slice information while reducing computational costs. The stride of five was selected based on hyperparameter tuning (data not shown). The output of the model is the predicted needle segmentation mask for the central input slice. This process is repeated in a sliding-window fashion across all slices to generate a full 3D needle segmentation.

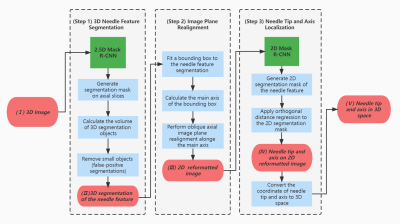

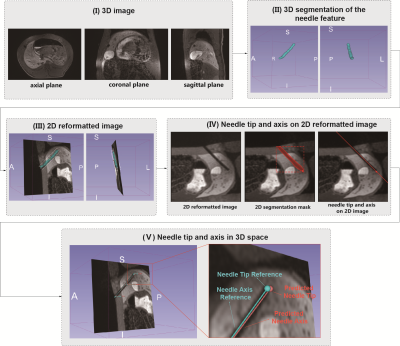

Needle Localization Pipeline: Our proposed pipeline (Figures 2 and 3) takes T1w-VIBE water images as input and localizes the needle feature tip and axis in 3D space via a fully automatic process implemented in 3D Slicer. The 2D Mask R-CNN in step 3 was first trained on 420 single-slice GRE images and then fine-tuned using 42 reformatted 2D images from T1w-VIBE datasets. The combination of 2.5D and 2D networks allows our pipeline to take advantage of a previously developed 2D Mask R-CNN model that achieved accurate results for 2D needle feature segmentation4,5.

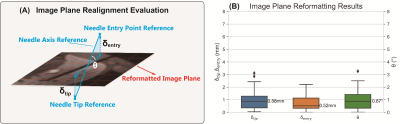

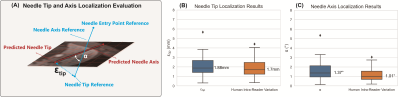

Evaluation Metrics: The 2D image plane realignment accuracy and the needle feature axis and tip localization accuracy in 3D were evaluated by the metrics defined in Figures 4A and 5A.

Results

We performed six-fold cross-validation (seven T1w-VIBE images in each fold) (results in Figures 4 and 5). In n=42 T1w-VIBE images, the range of needle insertion depth was 1.94 cm to 12.26 cm; the range of needle insertion angle (angle between the needle and axial plane) was -87.64° to 2.23°. For all T1w-VIBE images, the needle detection success rate was 100%. The average inference times for 2.5D and 2D Mask R-CNN were 28.45 s/volume (237 ms/slice) and 259 ms/slice, respectively, on an NVIDIA 1080Ti GPU. For needle tip and axis localization, median $$$\xi_{tip}$$$ was 1.88 mm (human intra-reader variation: 1.70 mm), and median $$$\alpha$$$ was 1.37° (human intra-reader variation: 1.01°).Discussion

Compared with 3D networks for needle localization that were trained with large datasets (>250 3D images)6-8, our proposed pipeline used a relatively small set of training images (~40 3D images). The coarse 3D needle feature segmentations using the 2.5D Mask R-CNN enable image plane reformatting with median $$$\theta$$$ < 1o and can be used for updating MRI scan plane orientations12. The proposed pipeline achieved a median needle tip localization error of 1.88 mm (1.39 pixels), which is comparable to the human intra-reader variation and adequate for liver biopsy since clinically-relevant lesions typically have a diameter >10 mm13,14.There are aspects of this work that can be improved. First, the computation time for generating 3D needle feature segmentation could be further accelerated with parallel processing of the multi-slice input sets. Second, the false-positive removal modules could incorporate relative position information of the needle and surrounding tissue to enhance performance. Third, independent testing could be done in the future to further assess the pipeline’s performance.

Conclusion

We developed a deep learning-based automatic pipeline for 3D needle localization on intra-procedural 3D MRI, which achieved accurate needle axis and tip localization compared to human references.Acknowledgements

This work was supported in part by the NIH/NIBIB (R01 EB031934) and Siemens Medical Solutions USA.References

1. Gupta S, Madoff DC. Image-guided percutaneous needle biopsy in cancer diagnosis and staging. Techniques in vascular and interventional radiology. 2007 Jun 1;10(2):88-101.

2. Moore CM, Robertson NL, Arsanious N, Middleton T, Villers A, Klotz L, Taneja SS, Emberton M. Image-guided prostate biopsy using magnetic resonance imaging–derived targets: a systematic review. European urology. 2013 Jan 1;63(1):125-40.

3. He K, Gkioxari G, Dollár P, Girshick R. Mask R-CNN. InProceedings of the IEEE international conference on computer vision 2017 (pp. 2961-2969).

4. Li X, Young AS, Raman SS, Lu DS, Lee YH, Tsao TC, Wu HH. Automatic needle tracking using Mask R-CNN for MRI-guided percutaneous interventions. International Journal of Computer Assisted Radiology and Surgery. 2020 Oct;15(10):1673-84.

5. Li X, Lee YH, Lu DS, Tsao TC, Wu HH. Physics-Driven Mask R-CNN for Physical Needle Localization in MRI-Guided Percutaneous Interventions. IEEE Access. 2021 Nov 15;9:161055-68.

6. Mehrtash A, Ghafoorian M, Pernelle G, Ziaei A, Heslinga FG, Tuncali K, Fedorov A, Kikinis R, Tempany CM, Wells WM, Abolmaesumi P. Automatic needle segmentation and localization in MRI with 3-D convolutional neural networks: application to MRI-targeted prostate biopsy. IEEE transactions on medical imaging. 2018 Oct 18;38(4):1026-36.

7. Faust JF, Krafft AJ, Polak D, Vogel R, Speier P, Behl NG, Ladd ME, Maier F. Fast 3D Passive Needle Localization for MR-Guided Interventions using Radial White Marker Acquisitions and CNN Postprocessing. Proceedings of the 30th Annual Meeting of ISMRM, 2022, (p.1196).

8. Weine J, Breton E, Garnon J, Gangi A, Maier F. Deep learning based needle localization on real-time MR images of patients acquired during MR-guided percutaneous interventions. Proceedings of the 27th Annual Meeting of ISMRM, 2019, (p. 973).

9. Monfaredi R, Cleary K, Sharma K. MRI robots for needle-based interventions: systems and technology. Annals of biomedical engineering. 2018 Oct;46(10):1479-97.

10. Lee YH, Li X, Simonelli J, Lu D, Wu HH, Tsao TC. Adaptive tracking control of one-dimensional respiration induced moving targets by real-time magnetic resonance imaging feedback. IEEE/ASME Transactions on Mechatronics. 2020 May 28;25(4):1894-903.

11. Pieper S, Halle M, Kikinis R. 3D Slicer. In2004 2nd IEEE international symposium on biomedical imaging: nano to macro (IEEE Cat No. 04EX821) 2004 Apr 18 (pp. 632-635). IEEE.

12. Li X, Lee YH, Lu D, Tsao TC, Wu HH. Deep learning-driven automatic scan plane alignment for needle tracking in MRI-guided interventions. Proceedings of the 29th Annual Meeting of ISMRM, 2021, (p. 861).

13. Moche M, Heinig S, Garnov N, Fuchs J, Petersen TO, Seider D, Brandmaier P, Kahn T, Busse H. Navigated MRI-guided liver biopsies in a closed-bore scanner: experience in 52 patients. European radiology. 2016 Aug;26(8):2462-70.

14. Parekh PJ, Majithia R, Diehl DL, Baron TH. Endoscopic ultrasound-guided liver biopsy. Endoscopic ultrasound. 2015 Apr;4(2):85.

Figures