0939

MRI-based Direct PET Attenuation Correction Using Cross-modality Attention Network for Combined PET/MRI1Emory University, Atlanta, GA, United States, 2Memorial Sloan Kettering Cancer Center, New York, NY, United States, 3GE Healthcare, Atlanta, GA, United States, 4Icahn School of Medicine at Mount Sinai, New York, NY, United States

Synopsis

Keywords: PET/MR, Machine Learning/Artificial Intelligence

This study aims to develop an efficient and clinically applicable deep-learning method using routine T1-weighted MRI and non-AC PET to directly synthesize high-quality attenuation-corrected PET without the need of an attenuation coefficient map for simultaneous PET/MRI.Introduction

Using MRI data to derive accurate attenuation correction maps for PET/MRI remains a challenging problem, especially when dealing with individual patients with specific tissue properties and structures of abnormalities1. Conventional template- and atlas-based segmentation methods cannot properly process non-patient specific features and interpatient anatomical variability leading to registration errors2,3. With the simultaneous acquisition of PET and MRI data in a PET/MRI scanner, structural MRI data from clinical sequences should directly guide PET attenuation correction (AC). This work proposes a cross-modality attention pyramid network to use routine T1-weighted MRI to directly aid PET AC without the need for an attenuation coefficient map.Methods

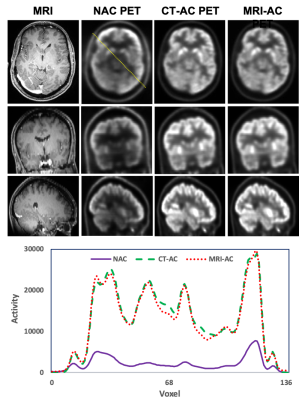

We used a cross-modality attention pyramid network to generate attenuation-corrected PET images from T1-weighted anatomic MRI obtained from the PET/MRI scan to aid direct AC PET estimation from non-AC (NAC) PET. The proposed network integrated a self-attention strategy into a pyramid network for deep salient feature extraction. Two dedicated sub-networks with the same pyramid architecture design were used to extract independent and relevant features from both NAC PET and MRI for the prediction of attenuation-corrected PET images. The outputs of these two sub-networks were compared with the ground truth to calculate two independent pyramid feature loss terms which were the NAC PET-only loss and MRI-only loss. Since the cross-modal deep feature maps obtained from these two sub-networks are complementary to each other, therefore, it is beneficial to combine them in a late fusion sub-network for final attenuation-corrected PET estimation. In the late fusion sub-network, the learned feature maps of each corresponding pyramid level were first concatenated and then highlighted via attention gates. The final loss was calculated by comparing the final PET estimation and the ground truth. PET images obtained from PET/CT from the same subjects using CT-based AC were used as the ground truth for network training. In the inference stage, paired NAC PET and MR image patches were first extracted from the original images. These image patch pairs were then inputted into the trained cross-modal network to obtain the synthetic AC PET patches. Finally, the synthetic AC PET image patches were fused to predict the final corrected PET images. These synthetic PET images were then compared to the ground truth CT-based attenuation-corrected PET images for evaluation. Brain T1 weighted MRI and PET/CT images were selected from 55 subjects to train and test the proposed networks. The trained network was evaluated using leave-five-out cross-validation. Standardized uptake value differences between the attenuation-corrected PET images from MRI-based AC and the ground truth from the PET/CT of the same patients were quantitatively evaluated using metrics including mean absolute error (MAE) and mean error (ME), and normalized cross-correlation (NCC). Statistical analysis was performed to compare the differences between the MRI-aided AC PET estimation and the ground truth.Results

The attenuation-corrected PET images generated using the images obtained from PET/MRI and the proposed deep learning method were matched well with the ground truth images obtained from PET/CT. The average MAE, ME, and NCC between MRI-directly aided AC PET and CT-based AC PET images were 4.57±0.83% and -1.87±0.43%, and 0.95±0.04, respectively. The average ME ranged from -4.27% to 2.29% for all contoured volumes of interest. There was no significant difference between the MRI-aided AC PETs and the reference PET/CTs.Discussion

A challenge with the introduction of PET/MR compared to PET/CT is that MR images cannot be directly related to electron density and cannot be directly converted to 511 keV attenuation coefficients for use in the attenuation correction process. The reason is that the MR voxel intensity is related to proton density rather than electron density, and a one-to-one relationship between them does not exist. The proposed method seeks to directly integrate MR into the AC PET estimation network. Different from the conventional MR-based AC method, which synthesizes CT images from MR images to generate AC maps1, the proposed method makes use of the superior soft tissue contrast and anatomical information provided by MRI, but not rely on MRI for AC map calculation, therefore, is less prone to MRI artifacts and image misalignment. This proposed approach leveraged simultaneously acquired MRI data in the PET/MRI scans with the superior MRI soft-tissue contrast to improve AC PET estimation. This technique could be used for PET attenuation and partial volume correction in integrated PET/MRI imaging applications.Conclusion

We have investigated a novel deep learning-based PET AC method directly using MRI. Experimental validation has been performed to demonstrate its clinical feasibility and accuracy on PET AC estimations comparable to conventional CT-derived PET AC. This technique could be a useful tool for attenuation correction for quantifying PET images when using a PET/MRI scanner.Acknowledgements

No acknowledgement found.References

1. Yang X, Wang T, Lei Y, et al. MRI-based attenuation correction for brain PET/MRI based on anatomic signature and machine learning. Phys Med Biol. 2019 Jan 7;64(2):025001. doi: 10.1088/1361-6560/aaf5e0. PMID: 30524027; PMCID: PMC7773209.

2. Dong X, Wang T, Lei Y, et al. Synthetic CT generation from non-attenuation corrected PET images for whole-body PET imaging. Phys Med Biol. 2019 Nov 4;64(21):215016. doi: 10.1088/1361-6560/ab4eb7. PMID: 31622962; PMCID: PMC7759014.

3. Dong X, Lei Y, Wang T, et al. Deep learning-based attenuation correction in the absence of structural information for whole-body positron emission tomography imaging. Phys Med Biol. 2020 Mar 2;65(5):055011. doi: 10.1088/1361-6560/ab652c. PMID: 31869826; PMCID: PMC7099429.