0814

Segmentation of Brain Structures MR Images via Semi-Supervised Learning1Center for Biomedical Imaging Research, School of Medicine, Tsinghua University, Beijing, China, 2School of Life Science, Beijing Institute of Technology, Beijing, China

Synopsis

Keywords: Segmentation, Brain

The precise segmentation of brain structures is of great importance in quantitatively analyzing brain medical resonance images. In recent years, more and more experts attempt to apply semi-supervised learning to medical image segmentation tasks, since it could make use of the rich unlabeled data. Based on this, we modified a segmentation model based on semi-supervised learning for automatically segmenting brain structures. We tested model on two hippocampus public datasets and results show that our model has considerable segmentation performance compared with that of model trained in a supervised manner, which illustrates the effectiveness and potential of our model.Introduction

Quantitative analysis of brain magnetic resonance images (MRI) has been widely used in the diagnosis, surgical planning, and postoperative analysis of brain diseases1,2 such as Alzheimer's disease3, Parkinson's disease4, and epilepsy5. The precise segmentation of tissues is one of the important steps in quantitative analysis. Supervised deep learning algorithms have shown outstanding performance in the field of image segmentation2. However, its performance relies on abundant labeled images 6,7, and the lack of high-quality labeled data limits the performance of supervised learning algorithms in medical image segmentation tasks. The semi-supervised learning algorithms could make use of the rich unlabeled data of medical images and obtain good segmentation results8, which can better solve the above problems. For example, Zeng et al9 designed a reciprocal learning framework to segment pancreas and left atrium, their results show that teacher model and student model can benefit each other to generate more reliable pseudo labels. Based on this, we modified the model mentioned above and applied it in brain structures MRI segmentation for the first time to reduce the manually annotating efforts.Methods

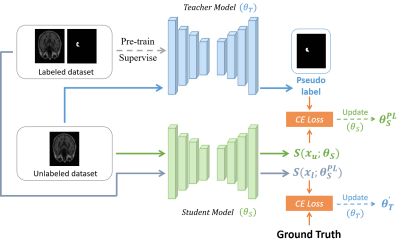

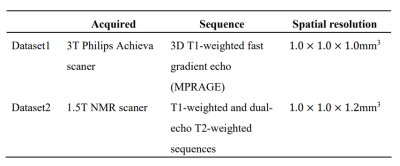

Model: The modified model is composed of a pair of sub-models, the one is named as Teacher model and the other is Student model (Figure 1). Both of sub-models are using V-Net as the backbone, since V-Net is specially designed to solve the problem of medical image segmentation based on 3D volumetric images10. The training process of the model can be divided into four main stages: (1) Pre-train. Firstly, we train a model on labeled dataset in a supervised manner, and the well pre-trained model will be the initial teacher model, we also name it Pre-model. (2) Generate pseudo labels. The prediction of initial Teacher model on unlabeled dataset is regarded as the pseudo label. (3) Optimize Student model’s parameters. We calculate the Cross-Entropy(CE) loss function between Student model's prediction on unlabeled dataset and the pseudo label, after that, we adopt Stochastic gradient descent algorithm to minimize CE loss and therefore updating the parameters of Student model. (4) Optimize Teacher model’s parameters. Finally, Student model segments on labeled dataset, and the prediction are compared with Ground Truth (GT) to calculate CE loss function in order to optimize Teacher model’s parameters, so that Teacher model can generate pseudo labels which are closer to GT. Repeat the above four steps until the iteration converges. Additionally, we train a model on fully labeled data in a supervised manner and name it Refer-model, which acts as a reference.Datasets: Two hippocampus MRI datasets are used to verify the effectiveness of the model (Table 1). Dataset111 includes a psychotic genotype/phenotype project from Vanderbilt University Medical Center. The database includes 90 healthy adults and 105 with non-affective psychosis adults with schizoaffective disorder and schizophrenia. And Dataset212 includes 100 cases with Alzheimer’s disease. After preprocessing, both datasets have 100 cases that could be used for training and testing. Each dataset is divided into training set and testing set according to the ratio of 4:1, and the training set is divided into unlabeled data and labeled data according to the ratio of 4:1.

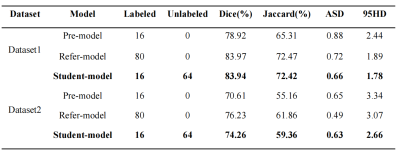

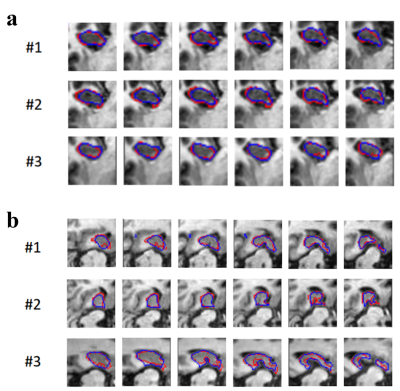

Evaluation metrics: In the inference stage, we only test Student model to evaluate the performance of the modified model. We use Dice, Jaccard, the Average Surface Distance (ASD), and 95% Hausdorff Distance(95HD) to quantitatively evaluate the performance of Student model. Since Dice and Jaccard are calculated by using overlapping areas but ASD and 95HD are related to distance between result and GT, a larger value of Dice and Jaccard represent a better segmentation result, on the contrary, smaller value of ASD and 95HD is expected13,14. Three cases were randomly selected from twenty cases that used for test, and six slices were selected for each case as the hippocampal contour size reaches a relative maximum.

Results

Table 2 shows the comparison of the three models tested on Dataset1 and Dataset2, respectively. We can find that the performance of Student model improves significantly compared with the performance of Pre-model. Besides, Student-model has considerable performance compared with Refer-model. We also perform 2D visualization to more intuitively compare the results with GT (Figure 2). It can be seen that the modified method has a very close result to the GT, which show the effectiveness of the modified model. The Teacher model generates high-quality pseudo labels and completely converges during training process, which makes the model have better segmentation performance (Figure 3). All experiments clearly illustrate that our method can get better results.Discussion and Conclusion

Our study modified a model and applied it to segmenting brain structures for the first time. The model can be trained on a small amount of labeled data and a large number of unlabeled data in a semi-supervised manner, and it could obtain a segmentation performance comparable to that of the model trained on fully labeled data in a supervised manner. Hence, our model helps experts and radiologists to save a lot of time for manually annotating and avoid intra group bias and inter group bias of GT. Other brain structures datasets are expected to be recruited so that we can explore the robustness and generalization of the model in future work.Acknowledgements

No acknowledgement found.References

1. Akkus Z, Galimzianova A, Hoogi A, et al. Deep learning for brain mri segmentation: state of the art and future directions[J]. Journal of Digital Imaging, 2017, 30(4): 449–459.

2. González-Villà S, Oliver A, Valverde S, et al. A review on brain structures segmentation in magnetic resonance imaging[J]. Artificial Intelligence in Medicine, 2016, 73: 45–69.

3. Moradi E, Pepe A, Gaser C, et al. Machine learning framework for early MRI-based Alzheimer's conversion prediction in MCI subjects[J]. Neuroimage, 2015, 104: 398-412.

4. Boutet A, Madhavan R, Elias G J B, et al. Predicting optimal deep brain stimulation parameters for Parkinson’s disease using functional MRI and machine learning[J]. Nature communications, 2021, 12(1): 1-13.

5. Ahmed R, Rubinger L, Go C, et al. Utility of additional dedicated high-resolution 3T MRI in children with medically refractory focal epilepsy[J]. Epilepsy research, 2018, 143: 113-119.

6. Barragán-Montero A, Javaid U, Valdés G, et al. Artificial intelligence and machine learning for medical imaging: A technology review[J]. Physica Medica, 2021, 83: 242-256.

7. Huang Z, Li Q, Lu J, et al. Recent advances in medical image processing[J]. Acta Cytologica, 2021, 65(4): 310–323.

8. Cheplygina V, de Bruijne M, Pluim J P W. Not-so-supervised: a survey of semi-supervised, multiinstance, and transfer learning in medical image analysis[J]. Medical image analysis, 2019, 54: 280-296.

9. Zeng X , Huang R , Zhong Y , et al. Reciprocal Learning for Semi-supervised Segmentation[C]//Springer, Cham. Springer, Cham, 2021.

10. Milletari F, Navab N, Ahmadi S-A. V-net: fully convolutional neural networks for volumetric medical image segmentation[C]//2016 Fourth International Conference on 3D Vision (3DV), 2016: 565–571.

11. Simpson A L, Antonelli M, Bakas S, et al. A large annotated medical image dataset for the development and evaluation of segmentation algorithms[J]. arXiv preprint arXiv:1902.09063, 2019.

12. "MRI Hippocampus Segmentation using Deep Learning autoencoders", Saber Malekzadeh, 2020.&S. Malekzadeh, “MRI Hippocampus Segmentation.” Kaggle, 2019.

13. Yeghiazaryan V, Voiculescu I. An overview of current evaluation methods used in medical image segmentation[J]. Department of Computer Science, University of Oxford, 2015.

14. Karimi D, Salcudean S E. Reducing the hausdorff distance in medical image segmentation with convolutional neural networks[J]. IEEE Transactions on medical imaging, 2019, 39(2): 499-513.

Figures

Table 2. Quantitative testing results of two hippocampus datasets. In the inference stage, we only tested Student model, and compared it with Pre-model and Refer model.

Figure 3. In order to better analyze the performance of the model, we visualized GT, Pseudo label and the prediction of Student model on unlabeled dataset. (a) Unlabeled training set of Dataset1; (b) Unlabeled training set of Dataset2. The Pseudo label is close to GT, therefore the prediction of Student model will be closer to GT under the guidance of Teacher model.