0328

An AI-based Pipeline for Prostate Cancer PI-RADS Reporting on Multiparametric MRI using MiniSegCaps Network1Department of Diagnostic Radiology, the University of Hong Kong, Hong Kong, Hong Kong

Synopsis

Keywords: Multimodal, Cancer

Prostate Imaging Reporting and Data System (PI-RADS) on multiparametric MRI (mpMRI) provides fundamental MRI interpretation guidelines but suffers from inter-reader variability. Deep learning networks show great promise in automatic lesion segmentation and classification, which help to ease the burden on radiologists and reduce inter-reader variability. In this study, we proposed a novel multi-branch network, MiniSegCaps, for prostate cancer segmentation and PI-RADS classification on mpMRI, and a graphical user interface (GUI) integrated into the clinical workflow for diagnosis reports generation. Our model achieved the best performance in prostate cancer segmentation and PIRADS classification compared with state-of-the-art methods.

Introduction

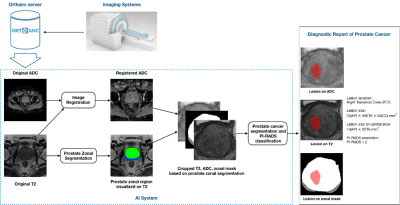

MRI is the primary imaging approach for diagnosing prostate cancer. Prostate Imaging Reporting and Data System (PI-RADS) on multiparametric MRI (mpMRI) provides fundamental MRI interpretation guidelines but suffers from inter-reader variability1. Deep learning networks show great promise in automatic lesion segmentation and classification, which help to ease the burden on radiologists and reduce inter-reader variability. Recent studies have shown the feasibility of detecting prostate cancer on mpMRI, using deep neural networks2,3,4,5. However, few considered the relative spatial information of prostate cancer to different anatomical structures to help identify prostate cancer from other anatomical tissues. Also, most of them require a large amount of annotated data which is costly and difficult to acquire in practice. Capsule Network (CapsNet)6 helps mitigate data starvation in deep learning-based medical image analysis due to its promising equivariance properties, representing the spatial/viewpoint variability of an object in a capsule (i.e., vector) format7. In this study, we proposed a pipeline including a novel multi-task network, MiniSegCaps, for prostate cancer segmentation and PI-RADS classification on mpMRI in an end-to-end manner and a graphical user interface (GUI) for diagnosis reports generation (Fig. 1).Methods

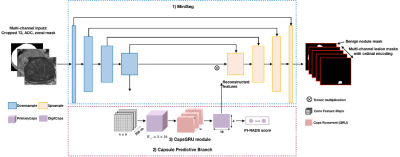

Approval from the institutional review board was obtained for this retrospective study, including 462 patients due to PI-RADS score ≥ 1 who underwent prebiopsy MRI and prostate biopsy for network training and evaluation; each case has both T2w and ADC images. The contours of lesions and PI-RADS score were obtained on T2w and ADC images to provide ground truths (GT) based on clinical reports. As in Fig.1, zonal masks of prostates on T2 images were obtained using pretrained UNet with an average dice of 0.89 on two public datasets (PROSTATEX8 , NCI-ISBI-20139). Preprocessing operations include resampling, normalization, cropping to the prostate region based on obtained zonal masks, and registration between T2w and ADC images, etc.As shown in Fig.2, a multi-task network MiniSegCaps with two predictive branches was proposed for segmenting and classifying lesions. A concatenation of T2W, ADC, and zonal mask creates the model’s 3-channel inputs. MiniSeg10 branch outputted the segmentation in conjunction with PIRADS prediction under the supervision of ordinal encoded lesion and BPH masks11,12. Another output branch using two capsule layers (caps-branch) was attached to the end of the encoder to produce specific malignancy. It is designed to exploit the relative spatial information of prostate cancer to anatomical structures, such as the zonal location of the lesion, which also reduced the sample size requirement in training due to its equivariance properties. Reconstructed feature maps by three fully connected (FC) layers[6] followed by the last convolution-capsule layer from the caps-branch were also integrated into the decoder as an attention map to improve the performance of the decoder branch. In addition, a gated recurrent unit (GRU) is adopted to exploit spatial knowledge across slices, improving through-plane consistency. This approach differs from the conventional GRU-Unet method, as our CapsGRU applies to capsules, unlike conventional GRU on feature maps. To further aid radiologists in prostate cancer diagnosis in clinical practice, we also designed a graphical user interface (GUI) integrated into the overall workflow to produce diagnosis reports of prostate cancer automatically, which contains the predicted lesion mask, lesion visualization on T2 and ADC, predicted probability of each PI-RADS category, position, and the dimension of each lesion, etc. The lesion location, i.e., peripheral/transition zone and left/right, was obtained from the intersection between the predicted lesion mask and zonal mask and the relative position of the lesion to the midpoint of the image.

Results

MiniSegCaps was trained and evaluated with fivefold cross-validation. Baseline methods in our study include U-Net13, attention U-Net14, U-Net++15, SegNet16, MiniSeg10, FocalNet12. To compare with MiniSegCaps, all these models are supervised with ordinal encoding GT segmentations during training. To keep consistent with our network, we adopt the same backbone MiniSeg for FocalNet.On 93 testing cases, our model achieved a 0.712 dice coefficient on lesion segmentation, 89.18% accuracy, and 92.52% sensitivity on PI-RADS classification (PI-RADS ≥ 4) in patient-level evaluation, significantly outperforming baseline methods. It indicates that our MiniSegCaps integrating Capsule layers can better identify prostate cancer from normal tissues by learning the relative spatial information of prostate cancer to different anatomical structures.

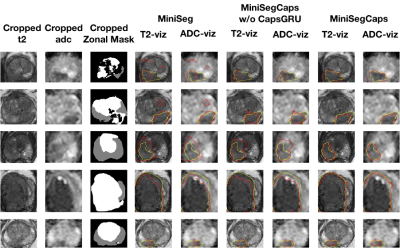

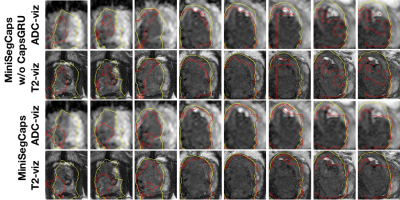

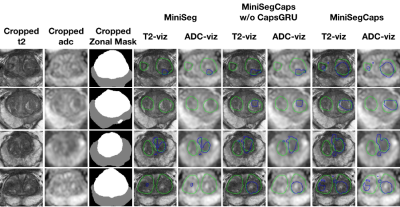

Fig.3 illustrates a visual comparison of the cropped T2, cropped ADC, cropped zonal mask, lesion ground truth, and predicted lesion mask by MiniSegCaps on T2 and ADC images. Our model successfully produced satisfactory segmentation of prostate cancer and revealed the spatial relationship between the zonal mask, lesion on T2, and ADC, which might help lesion location and classification. We also obtained consistent segmentations across adjacent slices within one volume, as shown in Fig.4. Our MiniSegCaps obtained better results than MiniSegCaps w/o CapsGRU, which indicates that CapsGRU captured the spatial information across adjacent slices as expected, boosting the prostate cancer segmentation performance. Moreover, our model achieved acceptable performance on BPH segmentation as shown in Fig.6.

Conclusion

Our MiniSegCaps predicted lesion segmentation and PIRADS classification jointly. It achieved the best performance in prostate cancer segmentation and PIRADS classification compared with state-of-the-art methods, especially for PI-RADS ≥ 3, which was highly important in clinical decision-making.Acknowledgements

This project was supported by the Hong Kong Health and Medical Research Fund 07182706.References

1. C. P. Smith et al., “Intra-and interreader reproducibility of PI-RADSv2: A multireader study,” J. Magn. Reson. Imaging, vol. 49, no. 6, pp. 1694–1703, 2019.

2. Y. Chen, L. Xing, L. Yu, H. P. Bagshaw, M. K. Buyyounouski, and B. Han, "Automatic intraprostatic lesion segmentation in multiparametric magnetic resonance images with proposed multiple branch UNet," Med. Phys., vol. 47, no. 12, pp. 6421–6429, 2020.

3. A. Saha, M. Hosseinzadeh, and H. Huisman, “End-to-end prostate cancer detection in bpMRI via 3D CNNs: Effects of attention mechanisms, clinical priori and decoupled false positive reduction,” Med. Image Anal., vol. 73, p. 102155, 2021.

4. A. Saha, M. Hosseinzadeh, and H. Huisman, “Encoding clinical priori in 3d convolutional neural networks for prostate cancer detection in bpmri,” ArXiv Prepr. ArXiv201100263, 2020.

5. A. Seetharaman et al., “Automated detection of aggressive and indolent prostate cancer on magnetic resonance imaging,” Med. Phys., vol. 48, no. 6, pp. 2960–2972, 2021.

6. S. Sabour, N. Frosst, and G. E. Hinton, “Dynamic routing between capsules,” Adv. Neural Inf. Process. Syst., vol. 30, 2017.

7. A. Jiménez-Sánchez, S. Albarqouni, and D. Mateus, “Capsule networks against medical imaging data challenges,” in Intravascular imaging and computer assisted stenting and large-scale annotation of biomedical data and expert label synthesis, Springer, 2018, pp. 150–160.

8. S. G. Armato et al., “PROSTATEx Challenges for computerized classification of prostate lesions from multiparametric magnetic resonance images,” J. Med. Imaging, vol. 5, no. 4, p. 044501, 2018.

9. N. Bloch et al., “NCI-ISBI 2013 challenge: automated segmentation of prostate structures,” Cancer Imaging Arch., vol. 370, p. 6, 2015.

10. Y. Qiu, Y. Liu, S. Li, and J. Xu, “Miniseg: An extremely minimum network for efficient covid-19 segmentation,” ArXiv Prepr. ArXiv200409750, 2020.

11. P. A. Gutiérrez, M. Perez-Ortiz, J. Sanchez-Monedero, F. Fernandez-Navarro, and C. Hervas-Martinez, “Ordinal regression methods: survey and experimental study,” IEEE Trans. Knowl. Data Eng., vol. 28, no. 1, pp. 127–146, 2015.

12. R. Cao et al., “Joint prostate cancer detection and Gleason score prediction in mp-MRI via FocalNet,” IEEE Trans. Med. Imaging, vol. 38, no. 11, pp. 2496–2506, 2019.

13. O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in International Conference on Medical image computing and computer-assisted intervention, 2015, pp. 234–241.

14. O. Oktay et al., “Attention u-net: Learning where to look for the pancreas,” ArXiv Prepr. ArXiv180403999, 2018.

15. Z. Zhou, M. M. Rahman Siddiquee, N. Tajbakhsh, and J. Liang, “Unet++: A nested u-net architecture for medical image segmentation,” in Deep learning in medical image analysis and multimodal learning for clinical decision support, Springer, 2018, pp. 3–11.

16. V. Badrinarayanan, A. Handa, and R. Cipolla, “Segnet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling,” ArXiv Prepr. ArXiv150507293, 2015.

Figures

Fig. 1. The overall pipeline of our work includes four main steps: 1) T2W and DWI images obtained from the clinical MRI scan session; 2) image preprocessing (registration, normalization, etc.); 3) zonal segmentation and cropping; 4) prostate cancer segmentation and classification, and 5) diagnostic report generation.

Fig. 2. Architecture of MiniSegCaps: 1) MiniSeg, a lightweight network as the backbone for lesion segmentation; 2) Capsule predictive branch for producing PI-RADS score; 3) CapsGRU for exploiting spatial information across adjacent slices. MiniSeg extracts Conv-features and produces multi-channel cancer segmentations; learned features by the last downsample block of MiniSeg are used as inputs of caps-branch for PI-RADS classification; with learned caps-feature stacks by PrimaryCaps as inputs, the CapsGRU exploits inter-slice spatial information during learning process.

Fig. 3. Visualization of lesion segmentation results among different cases. The yellow contour is the ground truth, and the red contours are from the deep learning predictions.

Fig. 4. Visualization of lesion segmentation results on eight slices from one case. The yellow contour is ground truth, and the red contours are predictions from the MiniSegCaps without or with CapsGRU. MiniSegCaps with CapsGRU can better delineate the prostate cancer contours across different slices in one case compared to that without CapsGRU.

Fig. 5. Visualization of benign nodule (e.g., BPH) segmentation results among different cases. The green contour is the ground truth, and the blue contours are from the deep learning predictions.