Vision Transformers in Medical Imaging

1Bilkent University, Turkey

Synopsis

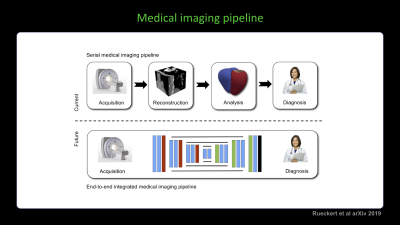

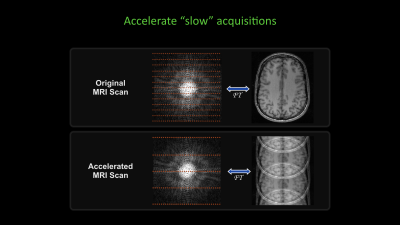

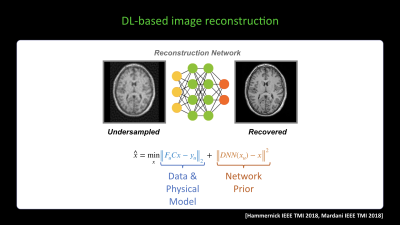

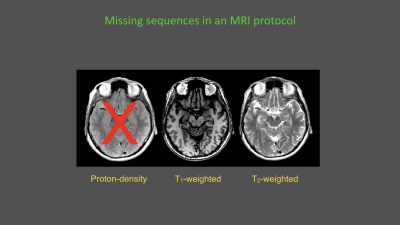

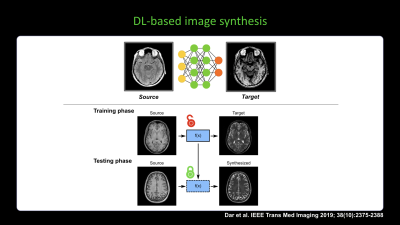

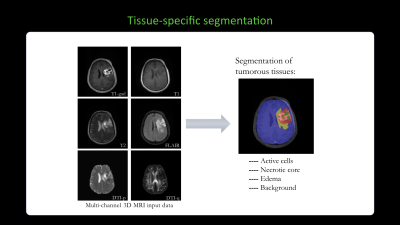

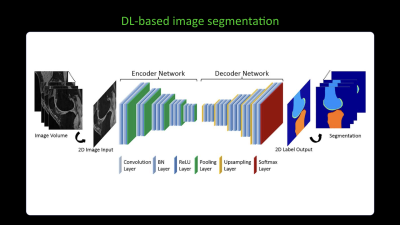

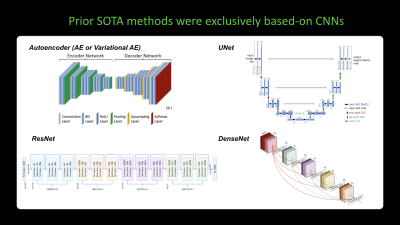

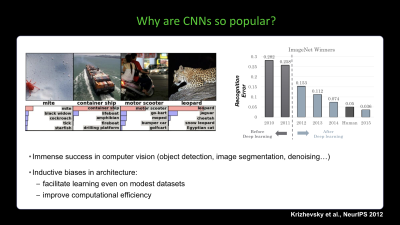

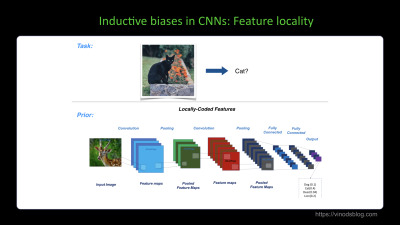

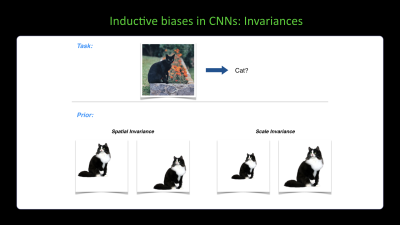

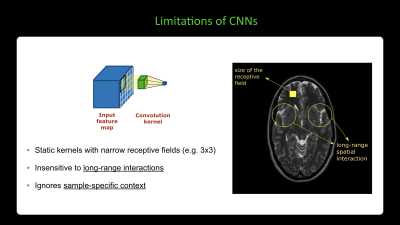

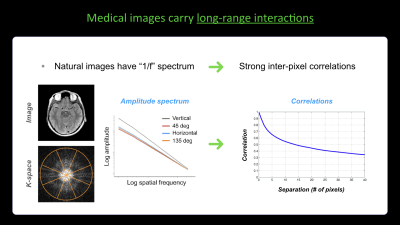

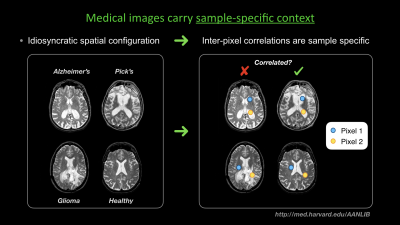

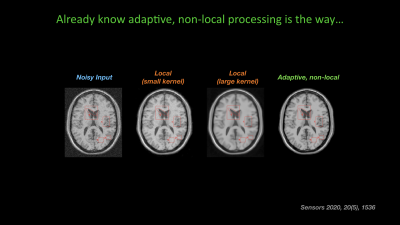

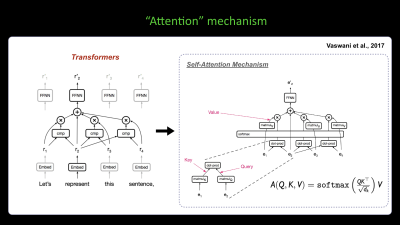

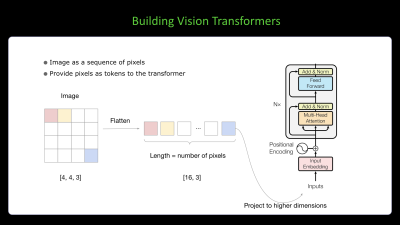

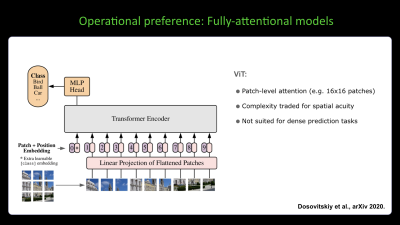

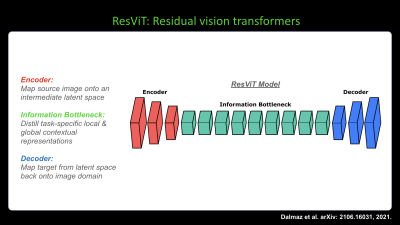

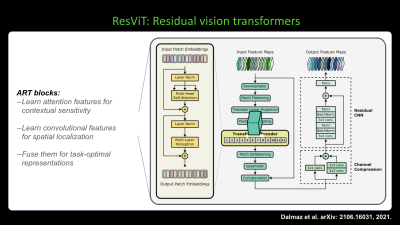

Deep learning models have been swiftly established as state-of-the-art in recent years for difficult medical image formation and analysis tasks such as reconstruction, synthesis, super-resolution and segmentation. A critical design consideration for model architectures is the capacity to account for representation errors that comprise both locally- and globally-distributed elements. While convolutional models with static local filters have been widely adopted due to their computational benefits, they lack in sensitivity for contextual or anomalous features. Instead, the recently emerging vision transformers are equipped with global attention operators as a universal mixing primitive for minimizing representation errors in diverse medical imaging tasks.