5017

Artifact-specific MRI quality assessment with multi-task model1Electrical Engineering, Stanford University, Stanford, CA, United States, 2Radiology, Stanford University, Stanford, CA, United States, 3GE Healthcare, Menlo Park, CA, United States

Synopsis

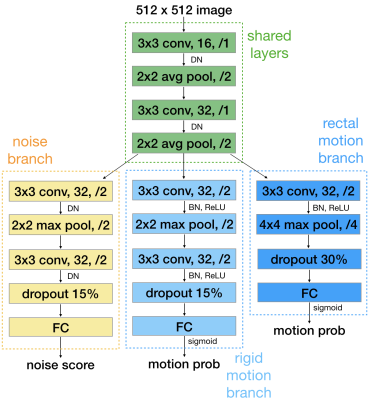

We present a CNN model that assesses diagnostic quality of MRIs in terms of noise, rigid motion and peristaltic motion artifacts. Our multi-task model consists of a set of shared convolutional layers followed by three branches, one for each artifact. We use dual-task training for two branches. We then utilize transfer learning for the third branch for which training data is scarce. We show that multi-task training improves the generalizability of the model, and transfer learning significantly improves the performance of the branch under data scarcity. Our model is deployed in clinically to provide warnings and artifact-specific solutions to technologists.

Introduction

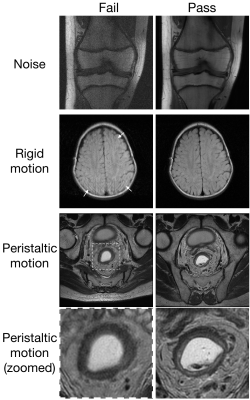

Different types of MRI artifacts can degrade diagnostic image quality, with varied causes and remedies. Preset scan parameters cannot guarantee satisfactory quality. In busy clinical settings, MR technologists with varied skill levels do not reliably assure image quality. We propose a no-reference artifact-specific quality assessment model that checks the image quality and enable artifact-specific suggestions to improve quality. Our model is a multi-task convolution neural network (CNN) that assesses three types of artifacts: noise, rigid motion and peristaltic motion. Transfer learning is utilized for one task where training data curation is expensive.Methods

We propose an artifact-specific image quality assessment framework. Instead of using one standard CNN for each artifact, we find a multi-task model most suitable for the purpose. In our model (Fig.1), each branch after some shared layers assesses one artifact.For a single-task trained model, the optimization depends heavily on the most influential factor to one task and is prone to overfitting. A model trained on several related tasks learns additional helpful features neglected in single-task. The shared layers responsible for multiple tasks lead to better generalization. Furthermore, a multi-task model can capture correlation among artifacts to better serve each task.

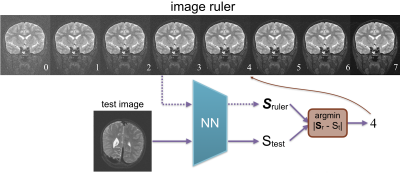

Noise branch. This branch outputs a scalar that can be used as is, or as we propose, interpreted by some protocol-specific image rulers (Fig.3). The scalar output is converted to a 0-7 integer ruler score during inference, which can then be converted to a binary pass/fail decision based on a flexible and task-specific threshold chosen by radiologists. Our training labels are obtained with minimum human labeling effort, by radiologists' calibration on heuristic estimations[1]. By adding Gaussian noise to the multi-coil k-space prior to reconstruction, we simulate four noisier versions for every slice in the training set and seven noisier versions for each slice used for the image rulers.

Rigid motion branch. This branch outputs a probability of the input image being motion corrupted, which is converted to a binary pass/fail decision with a fixed 0.5 threshold. We simulate a motion injected version for every slice in the training set. The simulation is done by moving the image three to four times and sampling parts of their corresponding k-space[1]. Training labels of pass and fail are automatically assigned to the original and motion injected versions, respectively.

Peristaltic non-rigid motion branch. This branch has the same output as above. Bowel motion, including that of the rectum, is different from the rigid motion examined above in that it tends to result in blurring rather than coherent ghosts and is thus local rather than distant (Fig.2). All peristaltic motion data are manually pass/fail labeled.

We utilize transfer learning from the rigid motion branch to this branch where the training data is 1/16 of that of the former. Parameters from the shared layers are taken from the noise and rigid motion trained model. Parameters in this branch are initialized from the rigid motion branch than optimized on the limited training data.

Root mean square error loss is used for training the noise branch. Cross entropy is used for two motion branches. The first two branches are dual-task trained simultaneously from scratch, then the peristaltic motion branch trained with transfer learning.

Results

Dataset. For training, after simulation, there are five and two versions of 515 musculoskeletal and brain subjects for noise and rigid motion, respectively. There is the original version of 63 subjects for peristaltic motion. For the standard test set, there are 470 and 295 slices from 120 subjects, for noise and rigid motion, respectively; there are 588 slices from 24 subjects for peristaltic motion.We use 180 DICOM images from 45 subjects from a clinical database as the out-of-distribution (OOD) dataset to test the generalizability of our models. This dataset differs from the training dataset in terms of patient age, hardware, reconstruction method, etc. All peristaltic motion data are DICOMs from the clinical database so w no OOD dataset for it.

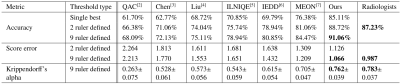

Noise. We compare the noise branch with existing noise assessment methods [2-7] and a radiologist (Table I). We also compare using a set of 0, 2 and 9 image rulers. The branch achieves an accuracy of 91.06%, 3.8% higher than a radiologist and >6.6% higher than other methods. The image ruler based threshold is better than a single fixed threshold; the more content-specific rulers used, the better performance.

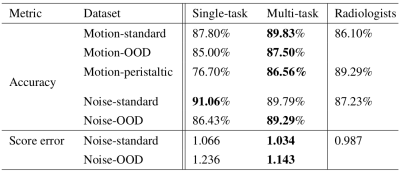

Rigid motion. Table II shows the dual-task trained (rigid) model branch performs better, on both standard and OOD datasets, than the single-task version, and better than a radiologist.

Peristaltic motion. In Table II for motion-peristaltic, the multi-task model utilizes transfer learning while the single-task model is trained from scratch. We achieve a 10% higher accuracy with the multi-task shared layers and branch parameters transferred from the rigid motion task than a standard model trained from scratch.

Comparisons on the OOD dataset from all branches demonstrate better generalizability of the multi-task model.

Conclusion

We present a multi-task model that checks noise, rigid motion and peristaltic motion artifacts in musculoskeletal, brain and pelvic 2D FSE scans. The model is deployed clinically. We plan to add attention to the region of interest for peristaltic motion detection.Acknowledgements

Work in this paper is supported by the NIH R01EB009690 and NIH R01EB026136 award, and GE Healthcare.References

1. Lei K, Pauly J, Vasanawala S. Artifact- and content-specific quality assessment for MRI with image rulers. https://arxiv.org/abs/2111.03780, 2021.

2. Xue W, Zhang L, Mou X. Learning without human scores for blind image quality assessment. Proc. IEEE Conf. Comput. Vis. Pattern Recognition, pp. 995-1002, Jun. 2013.

3. Chen G, Zhu F, Heng P A. An efficient statistical method for image noise level estimation. Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2015

4. Liu X, Tanaka M, Okutomi M. Noise level estimation using weak textured patches of a single noisy image. 19th IEEE International Conference on Image Processing, Orlando, FL, 2012, pp. 665-668.

5. Zhang L, Bovik A C. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process., vol. 24, no. 8, pp. 2579-2591, Aug. 2015.

6. Ponomarenko M, Gapon N, Voronin V, Egiazarian K. Blind estimation of white Gaussian noise variance in highly textured images. CoRR, vol. abs/1711.10792, 2017.

7. Ma K, Liu W, Zhang K, Duanmu Z, Wang Z, Zuo W. End-to-End Blind Image Quality Assessment Using Deep Neural Networks. IEEE Transactions on Image Processing, vol. 27, no. 3, pp. 1202-1213, March 2018.

Figures

Table II. Pass/fail accuracy and absolute ruler score error from single-task and multi-task trained models tested on the standard test set of rigid motion (Motion-standard), the out-of-distribution dataset of rigid motion (Motion-OOD), the test set for peristaltic motion (Motion-peristaltic), the standard test set of noise (noise-standard), and the out-of-distribution dataset of noise (noise-OOD).