4992

Super Resolution Enhanced PROPELLER for Retrospective Motion Correction1Electrical and Computer Engineering, University of Texas, Austin, TX, United States, 2Oden Institute for Computational Engineering and Sciences, University of Texas, Austin, TX, United States, 3Department of Diagnostic Medicine, University of Texas, Austin, TX, United States

Synopsis

PROPELLER based acquisitions have the unique ability to give low resolution images for each echo train acquired and are often used for motion correction. However, motion correction with PROPELLER can often be hindered due to the low resolution nature of each shot. We propose a technique which leverages recent advancements in super resolution neural networks to enhance low resolution PROPELLER shots for better inter-shot motion estimation.

Introduction

Due to the nature of data acquisition in MRI, motion artifacts can often arise in a variety of settings. To correct motion artifacts many techniques have been proposed and can be split broadly into prospective and retrospective methods. Prospective methods are active approaches to correct motion at scan time by altering the acquisition parameters to maintain a stable FOV as the scan proceeds1. Retrospective methods seek to correct motion artifacts after the scan has been completed. Within retrospective correction there are methods which assume the usage of available motion measurements2(non-blind) for use in correction and methods which must estimate motion along with the corrected image(blind)3,4. Previous work has looked at different scan trajectories for motion correction such as radial5 along with PROPELLER6. Using the low resolution images from each shot one can create motion estimate between shots, assuming only inter-shot motion is present, to correct the reconstructed image. However, these estimates can be hindered due to low resolution in the phase encode direction for each shot. Previous works have shown methods for correcting inter-shot motion by comparing central regions of k-space between shots7. There have been many great advances in extending deep learning super resolution techniques to MRI for improving existing image quality8. Here we propose a PROPELLER based motion estimation framework which leverages deep super resolution networks to enhance the accuracy of angular motion estimates.Methods

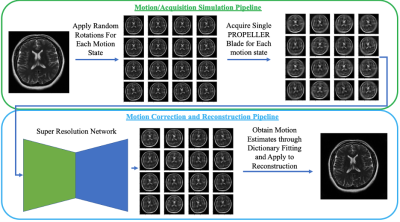

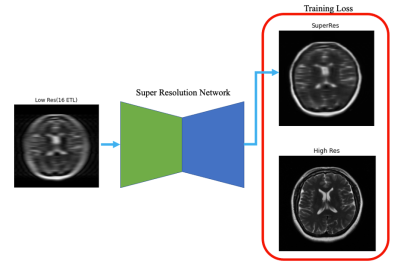

Motion/Acquisition Simulation: In our approach we have restricted our simulation to purely rigid in-plane rotation. We first generated a fully sampled PROPELLER trajectory(Echo Train Length (ETL) = 16, blades = 32) using BART9. For each PROPELLER blade(shot) we generated and applied a random angle of rotation(Uniform(-10,10)) to simulate inter-shot rotation(Figure 1). For this preliminary investigation we did not model the signal evolution during the echo train.Super Resolution Network: Each shot was first reconstructed into a low resolution image and then passed through a super resolution deep neural network(Figure 1). The network architecture we selected was a U-Net10 with 4 pooling layers. Our U-Net was trained using T2 brains from the fastMRI dataset11. For training, inputs were motion-corrupted low resolution images(images from a single ET) (Figure 2). Using NRMSE, the resolution boosted images were compared with the corresponding high resolution images for same motion state(Figure 2).

Motion Estimation: At test time, the new resolution boosted images are compared to a single reference shot, typically blade one in the PROPELLER trajectory, using a dictionary fit to estimate the rotation between each shot.

Trajectory Correction and Reconstruction: Since rotation in image space induces rotation in k-space we corrected k-space by rotating the coordinates of each PROPELLER blade by the corresponding motion estimate. Finally, the motion corrected PROPELLER blades were reconstructed assuming the use of a single channel reception and an MSE loss without regularization. BART was used for reconstruction9.

Results

For each motion corrupted image we estimated inter-shot rotation using both the raw low resolution images and the super resolution boosted images. We found that when estimating rotation using our super resolution boosted shots the angle estimation had an average absolute error of 0.4997 degrees with the true motion corrupting angles. Using only the low resolution images for motion estimation there was an average absolute error of 1.3537 degrees. These improvements were also evident in the corresponding motion corrected images(Figure 3,4). It can be seen in the super resolution enhanced estimation that finer details are better preserved due to its ability to more accurately estimate motion corruption.Discussion

Due to PROPELLER’s unique acquisition strategy, each blade can be viewed as a low resolution depiction of the fully sampled image at a particular moment in time. Using super resolution to enhance these images along with the assumption of inter-shot motion, it is possible to resolve the motion states prior to a conventional reconstruction. We were able to show through simulation that applying super resolution to the low resolution PROPELLER blades does indeed enhance the ability to estimate a parameterized motion model which ultimately leads to better image reconstruction quality. In our case this motion model was restricted to 2D rigid rotational motion but this has the possibility to be applied to deformable motion state estimation which can be parameterized by models such as optical flow fields.Conclusion

Through the results shown above we displayed that using super resolution on the low resolution shots greatly increased the accuracy of rigid-body angular rotation estimation. This is a promising step. The end goal for this method is to use low resolution shots obtained via a PROPELLER, enhanced with super resolution networks, to model non-rigid motion with accurate optical flow maps.Acknowledgements

This work was supported by Aspect ImagingReferences

[1] F Godenschweger, et al. Motion correction in MRI of the brain. 61(5):R32-R56, feb 2016

[2]Cheng, Joseph Y et al. “Free-breathing pediatric MRI with nonrigid motion correction and acceleration.” Journal of magnetic resonance imaging : JMRI vol. 42,2 (2015): 407-20. doi:10.1002/jmri.24785

[3] Forbes KP, Pipe JG, Bird CR, Heiserman JE. PROPELLER MRI: clinical testing of a novel technique for quantification and compensation of head motion. J Magn Reson Imaging. 2001 Sep;14(3):215-22. doi: 10.1002/jmri.1176. PMID: 11536397.

[4] Tamhane, Ashish A, and Konstantinos Arfanakis. “Motion correction in periodically-rotated overlapping parallel lines with enhanced reconstruction (PROPELLER) and turboprop

MRI.” Magnetic resonance in medicine vol. 62,1 (2009): 174-82. doi:10.1002/mrm.22004

[5]Zhu X, Chan M, Lustig M, Johnson KM, Larson PEZ. Iterative motion-compensation reconstruction ultra-short TE (iMoCo UTE) for high-resolution free-breathing pulmonary MRI. Magn Reson Med. 2020 Apr;83(4):1208-1221. doi: 10.1002/mrm.27998. Epub 2019 Sep 30. PMID: 31565817; PMCID: PMC6949392.

[6] Haskell, Melissa W et al. “TArgeted Motion Estimation and Reduction (TAMER): Data Consistency Based Motion Mitigation for MRI Using a Reduced Model Joint Optimization.” IEEE transactions on medical imaging vol. 37,5 (2018): 1253-1265. doi:10.1109/TMI.2018.2791482

[7] Haskell, Melissa W et al. “Network Accelerated Motion Estimation and Reduction (NAMER): Convolutional Neural Network Guided Retrospective Motion Correction Using a Separable Motion Model.” Magnetic resonance in medicine 82.4 (2019): 1452–1461. Web

[8] Eun, Di., Jang, R., Ha, W.S. et al. Deep-learning-based image quality enhancement of compressed sensing magnetic resonance imaging of vessel wall: comparison of self-supervised and unsupervised approaches. Sci Rep 10, 13950 (2020)

[9] BART Toolbox for Computational Magnetic Resonance Imaging, DOI: 10.5281/zenodo.592960

[10] Ronneberger O., Fischer P., Brox T. (2015) U-Net: Convolutional Networks for Biomedical Image Segmentation. In: Navab N., Hornegger J., Wells W., Frangi A. (eds) Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Springer, Cham. https://doi.org/10.1007/978-3-319-24574-4_28

[11] Jure Zbontar et al. fastmri: An open dataset and benchmarks for accelerated mri, 2019

Figures

Figure 1: For simulating motion a clean fully sampling image is rotated randomly for N motion states. Each motion state image is sampled by a single unique PROPELLER blade. Low resolution images are reconstructed for each PROPELLER blade(N images). Each image is individually passed through the super resolution network. The resolution boosted images are then used to estimate motion. Finally, the motion estimates are used to correct the PROPELLER blade angles and the corrected image is reconstructed.

Figure 2: Super Resolution Network Training.

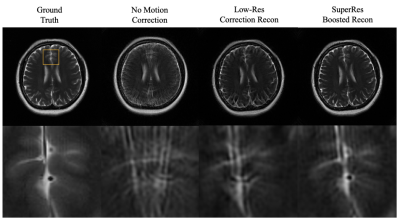

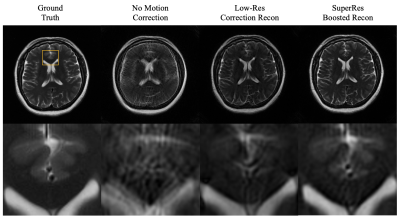

Figure 3: Reconstruction comparison between GT(left), no trajectory correction(second from left), trajectory correction guided by low resolution shots(second from right), trajectory correction guided by super resolution enhanced shots(right)