4475

Non-contrast enhanced MR vessel wall image acquisition based on generation adversarial network1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 2Department of Radiology, Huizhou Central People's Hospital, Huizhou, China, 3Department of Radiology, Guangdong Second Provincial General Hospital, Guangzhou, China, 4Department of Radiology, Peking University Shenzhen Hospital, Shenzhen, China, 5United Imaging Healthcare, Shanghai, China

Synopsis

High resolution magnetic resonance vessel wall imaging (MRVWI) is now widely used for the risk evaluation of patients with ischemic stroke. The use of MRVWI with and without contrast agent is a diagnostic criterion from expert consensus recommendations. However, patients with renal insufficiency who receive gadolinium-based contrast agents are at risk for developing a debilitating and potentially fatal disease known as nephrogenic systemic fibrosis. The study proposes a method based on adversarial generation network to obtain enhanced MR vessel wall image without using contrast agent. The results show the enhancement effect is consistent with the contrast-enhanced MRVWI images.

Introduction

High resolution magnetic resonance vessel wall imaging (MRVWI) is now widely used for the risk evaluation of patients with ischemic stroke [1]. The use of MRVWI with and without contrast agent is an internationally recognized evaluation standard [2]. However, the use of contrast agents has always been an internationally controversial issue due to the deposition in organs, especially in the brain [3]. In addition, patients with renal insufficiency who receive gadolinium-based contrast agents are at risk for developing a debilitating and potentially fatal disease known as nephrogenic systemic fibrosis [4]. This study proposes and evaluates a method based on generation adversarial network (GAN) to obtain non-contrast enhanced MRVWI images without using contrast agent.Method

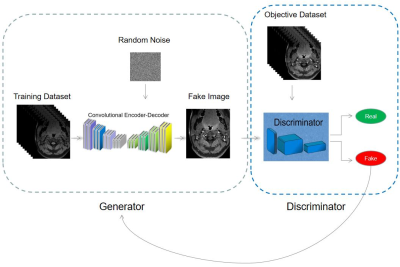

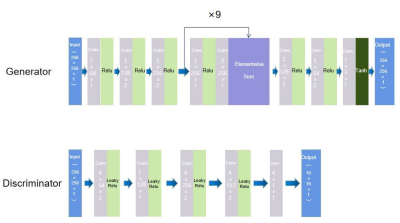

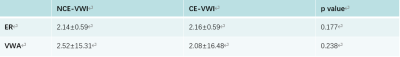

MRVWI images with and without contrast agent were acquired from 41 patients (23 males, age ranged 29 to 80 years, average age 60 years) with atherosclerotic disease using a T1-weighted 3D MATRIX sequence on a 3-T whole-body MR system (uMR 790, United Imaging Healthcare, Shanghai, China). A total of 4517 pairs of 2D slice were reconstructed from the acquired 3D original unenhanced MRVWI images and the contrast-enhanced MRVWI images. Of which, 70% data were used as training sets, 20% as validation sets and 10% as test sets. An optimized deep learning network based on Pix2Pix-GAN was used to train the collected data [5]. The loss function was modified according to Fixed-Point-GAN [6]. At the same time, the squeeze-and-excitation (SE) blocks and multi-scale features were added to improve the performance of the generator. Figure 1 shows the overall diagram of the proposed GAN-based network. The architecture of the generator and discriminator network is shown in Figure 2.100 groups of images were randomly selected from experimental results of the test set to evaluate the performance of the proposed Pix2Pix-GAN-based network. Each group included the original unenhanced image, non-contrast enhanced image output from the Pix2Pix-GAN-based network (NCE-VWI), and the contrast enhanced image with the use of contrast agent (CE-VWI). Two experienced radiologists in consensus evaluated the effectiveness of the proposed Pix2Pix-GAN-based network by visually assessing the consistency between the NCE-VWI and the CE-VWI in four qualitative indexes including the enhancement degree of the vessel wall, the number of enhanced vessels, the clarity of the vessel wall, and whether there are artifacts in the image. In addition, two quantitative indexes including enhancement ratio (ER) and vessel wall area (VWA) was also calculated to evaluate the performance of the proposed Pix2Pix-GAN-based network. ER was measured as the ratio of enhanced image SI to original unenhanced image SI, which SI was determined as the mean signal intensity of vessel wall. VWA was measured as the difference between inner and outer blood vessel area. Paired t test was performed for detecting the difference in ER and VWA between NCE-VWI and CE-VWI.

Results

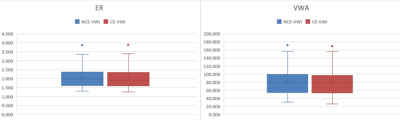

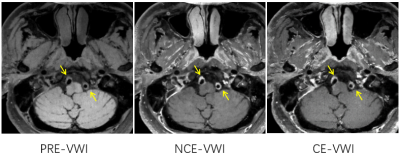

The proposed Pix2Pix-GAN-based network can obtain enhanced MRVWI images without using the contrast agent. The results of ER and VWA (presented as mean ± standard deviation) measured from NCE-VWI and CE-VWI are summarized in Table 1. The ER of NCE-VWI and CE-VWI are 2.15 and 2.16, respectively. And the VWA of NCE-VWI and CE-VWI are 2.52 and 2.08, respectively. There is no significant difference in ER and VWA between NCE-VWI and CE-VWI (p=0.177 for ER and 0.238 for VWA). Figure 3 visually shows the comparison of ER and VWA between NCE-VWI and CE-VWI. This indicates that the non-contrast enhanced MRVWI images generated from the proposed Pix2Pix-GAN-based network may replace the real contrast-enhanced images.Compared with the real contrast-enhanced MRVWI images, the non-contrast enhanced images generated by the Pix2Pix-GAN based network achieved consistency of 98%, 95%, and 98% on the enhancement degree of the vessel wall, the number of enhanced vessels, and the clarity of the vessel wall, respectively. In particular, 5% of real contrast enhanced images have motion artifacts, but corresponding non-contrast enhanced images have no artifacts because it is generated from the pre-VWI image without artifacts, which indicates that the images generated by the Pix2Pix-GAN based network can not only achieve enhanced MRVWI images without contrast agents, but also avoid the motion artifacts caused by long scanning time. A representative MRVWI image without contrast agent (pre-VWI) and the corresponding NCE-VWI image and CE-VWI image are shown in Figure 4. NCE-VWI visually shows the comparable enhancement effect of vessel wall as CE-VWI..

Discussion

A Pix2Pix-GAN based network was proposed to obtain enhanced MRVWI images without using contrast agent and achieved the similar enhancement effect for vessel wall as contrast-enhanced MRVWI images. It is possible to obtain the enhanced MRVWI images for patients who cannot use contrast agent. In our generator, Cycle Consistency Loss, Conditional Identity Loss, and Structural Similarity (SSIM) loss are introduced into the loss function of our network. The addition of these loss functions ensures that the structure of the generated image does not change compared to the original image. And multi-scale feature and attention mechanism are added to the U-net structure of the generator to improve the accuracy of network enhancement.Conclusion

The proposed Pix2Pix-GAN-based network can generate enhanced MRVWI images without using contrast agent, and the enhancement effect is consistent with the contrast enhanced MRVWI images.Acknowledgements

The study was partially support by National Natural Science Foundation of China (81830056), Key Laboratory for Magnetic Resonance and Multimodality Imaging of Guangdong Province (2020B1212060051), Shenzhen Basic Research Program (JCYJ20180302145700745 and KCXFZ202002011010360), and Guangdong Innovation Platform of Translational Research for Cerebrovascular Diseases.References

[1] Song J W, Wasserman B A. Vessel wall MR imaging of intracranial atherosclerosis. Cardiovascular Diagnosis and Therapy, 2020, 10(4): 982.

[2] Saba L, Yuan C, Hatsukami T S, et al. Carotid artery wall imaging: perspective and guidelines from the ASNR vessel wall imaging study group and expert consensus recommendations of the American Society of Neuroradiology. American Journal of Neuroradiology, 2018, 39(2): E9-E31.

[3] Wahsner J, Gale E M, Rodríguez-Rodríguez A, et al. Chemistry of MRI contrast agents: current challenges and new frontiers. Chemical reviews, 2018, 119(2): 957-1057.

[4] Le Fur M, Caravan P. The biological fate of gadolinium-based MRI contrast agents: a call to action for bioinorganic chemists. Metallomics, 2019, 11(2): 240-254.

[5] Isola P, Zhu J Y, Zhou T, et al. Image-to-image translation with conditional adversarial networks//Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 1125-1134.

[6] Siddiquee M M R, Zhou Z, Tajbakhsh N, et al. Learning fixed points in generative adversarial networks: From image-to-image translation to disease detection and localization//Proceedings of the IEEE/CVF International Conference on Computer Vision. 2019: 191-200.

Figures