4345

A deep learning network for low-field MRI denoising using group sparsity information and a Noise2Noise method

Yuan Lian1, Xinyu Ye1, Hai Luo2, Ziyue Wu2, and Hua Guo1

1Center for Biomedical Imaging Research, Department of Biomedical Engineering, School of Medicine, Tsinghua University, Beijing, China, 2Marvel Stone Healthcare Co., Ltd., Wuxi, China

1Center for Biomedical Imaging Research, Department of Biomedical Engineering, School of Medicine, Tsinghua University, Beijing, China, 2Marvel Stone Healthcare Co., Ltd., Wuxi, China

Synopsis

Owing to hardware advancements, interest in low-field MRI system has increased recently. However, the imaging quality of low-field MRI is limited due to intrinsic low signal to noise ratio (SNR). Here we propose a deep-learning model to jointly denoise multi-contrast images using Noise2Noise training strategy. Our method can promote the SNRs of multi-contrast low-field images, and experiments show the effectiveness of the proposed strategy.

Introduction

Low-field MRI can promote accessibility of MRI scanners due to its potential low-cost. Additionally, compared with widely used high-field MRI, low-field systems benefit from reduced SAR and implants heating, and fewer susceptibility-induced artifacts[1,2]. However, the image SNR decreases as the field strength becomes lower[1,3]. Thus, a robust denoising method for low-field MRI is needed to maintain practical acquisition efficiency.Recently, deep learning has been introduced to image denoising[4-10]. Among them, methods with convolutional neural network like DnCNN[4] have achieved competitive results. Meanwhile, multi-contrast MRI images share similar structures and thus provide additional information for joint denoising. As such, we investigate a deep learning based denoising method to improve SNRs of multi-contrast low-field MRI images jointly, and use a Noise2Noise[11] strategy for training without noise-free targets.

Method

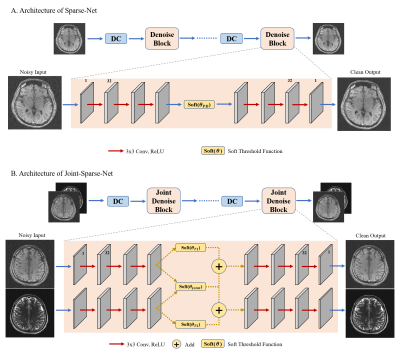

Sparse-Net and Joint-Sparse-NetNeural network is widely used on image denoising. Meanwhile, model-driven deep learning networks, which exploit the sparsity of input images with iterative transform blocks, have shown great potential in image reconstruction[12] and denoising[6]. Thus, we develop a cascade model for low-field MRI images denoising, named Sparse-Net, as shown in Figure 1A. Several denoising blocks simulate an iterative denoising procedure. In each block, the general update step can be described as:

$$ x_{k+1}=DB(x_k)=x_k+\widehat{T}_k(\tau_k(T_k(x_k,DC(x_k)))) $$

Here $$$DC$$$ stands for data consistency. $$$T$$$ and $$$\widehat{T}$$$ are sparse transform consisting of 2D convolutions. Noisy images are filtered in transform domain using learnable soft thresholding function $$$\tau$$$.

To expand our model to multi-contrast images, we adopt the traditional group sparsity concept[13,14]. The group sparsity of multi-contrast images $$$x_{k,i}$$$ with sparse transform $$$T$$$ is

$$ T_{Group}(x_k)=\sqrt{\sum_i(T(x_{k,i}))^2} $$

Thus, the update step of multi-contrast denoising model can be described as $$$ x_{k+1,i} = DB(x_{k,i})+\lambda_iDB\_G(x_k) $$$, where $$ DB\_G(x_k) = \widehat{T}_{k,Group}(\tau_k(T_{k,Group}(x_k,DC(x_k)))) $$

The model with group sparsity transform is denoted as Joint-Sparse-Net, and its structure is shown in Figure 1B. All proposed models are trained using a combination of Multiscale-Structural Similarity (MSSIM) and $$$L_1$$$ as the loss function:$$ Loss=0.84MSSIM(t-x)+(1-0.84)\parallel t-x\Vert_1 $$Where $$$t$$$ and $$$x$$$ denotes target and input. We also implement DnCNN[4] trained on single contrast data for comparison.

Noise2Noise

Most deep learning denoising models use averaged images as noise-free targets. However, low-field MRI images acquired with up to 6 averages still contain considerable amount of noise. In view of that, we employ the Noise2Noise training strategy[11]. According to the Noise2Noise theory, considering that the majority of the noise in MRI images has zero means, using a pair of noisy images from two separate acquisitions as input and target can theoretically produce results equal to using noise-free images as targets. Models using Noise2Noise are named as Sparse-Net-N2N and Joint-Sparse-N2N.

Data Acquisition

Low-field MRI data were acquired on a prototype 0.5T MR Scanner (Marvel Stone Healthcare Co., Ltd., Wuxi, China), including 2D T1w and T2w images from 17 subjects. 13 subjects are chosen randomly for training, while 2 for validation and 2 for testing. Each subject has 14 slices of T1w and T2w images with 6 NSAs and 4 NSAs. The single average images are used as noisy image sets for denoising. Averages of T1w and T2w images are used as targets for non-N2N model training. Images from different NSAs are grouped as noisy image pairs for N2N training. The resolution is 0.7$$$\times$$$0.7$$$\times$$$5mm3.

Result

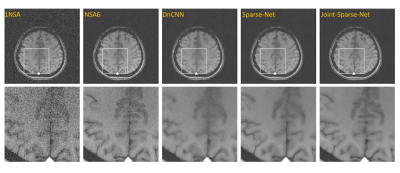

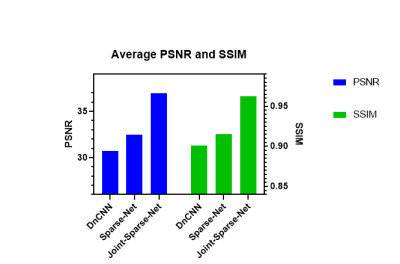

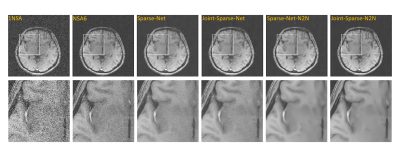

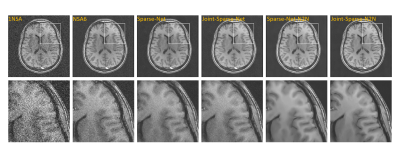

Figure 2 shows the denoising results from DnCNN, Sparse-Net and Joint-Sparse-Net for one set of T1w images. Notice that the result from Joint-Sparse-Net comes from jointly denoising the T1w and T2w images of the same subject. All methods can produce comparable results to the NSA6 reference images. Compared to DnCNN, Sparse-Net shows an advantage in reducing noise, proving the effectiveness of the proposed sparsity-based denoising model. Meanwhile, Joint-Sparse-Net provides sharper edges and structures than Sparse-Net. Figure 3 shows the PSNR and SSIM values for DnCNN, Sparse-Net and Joint-Sparse-Net.The results from Sparse-Net, Joint-Sparse-Net and their N2N-version are presented in Figure 4. Denoising results using the Noise2Noise strategy have a lower noise level compared with the reference NSA6 images, while maintaining the sharpness of edges and structures. Of all methods, the proposed Joint-Sparse-N2N model has the best SNR and preserves the most details, while Sparse-Net-N2N produces over-smoothed results. Figure 5 shows the results from another subject. The finding is consistent with that from Figure 4.

Conclusion and Discussion

In this work, we develop a deep learning denoising model, namely Joint-Sparse-N2N, for multi-contrast low-field MRI images. Experiments demonstrate that the proposed method can suppress noise while preserving detailed information of anatomical structures. We expect a promotion of the proposed method with larger training dataset. Further study on manually controlling the denoising levels will be explored in the future.Acknowledgements

No acknowledgement found.References

- Marques JP, Simonis FFJ, Webb AG. Low-field MRI: An MR physics perspective. J Magn Reson Imaging 2019;49(6):1528-1542.

- Campbell-Washburn AE, Ramasawmy R, Restivo MC, et al. Opportunities in Interventional and Diagnostic Imaging by Using High-Performance Low-Field-Strength MRI. Radiology 2019;293(2):384-393.

- Koonjoo N, Zhu B, Bagnall GC, Bhutto D, Rosen MS. Boosting the signal-to-noise of low-field MRI with deep learning image reconstruction. Sci Rep 2021;11(1):8248.

- Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a Gaussian Denoiser: Residual Learning of Deep CNN for Image Denoising. IEEE Trans Image Process 2017;26(7):3142-3155

- Zhang K, Zuo W, Zhang L. FFDNet: Toward a Fast and Flexible Solution for CNN based Image Denoising. IEEE Trans Image Process 2018.

- Yang D, Sun J. BM3D-Net: A Convolutional Neural Network for Transform-Domain Collaborative Filtering. IEEE Signal Processing Letters 2018;25(1):55-59.

- Hong D, Huang C, Yang C, Li J, Qian Y, Cai C. FFA-DMRI: A Network Based on Feature Fusion and Attention Mechanism for Brain MRI Denoising. Front Neurosci 2020;14:577937.

- Manjón JV, Coupe P. MRI Denoising Using Deep Learning. Cham, 2018. p. 12-19.

- Xie D, Li Y, Yang H, et al. Denoising arterial spin labeling perfusion MRI with deep machine learning. Magn Reson Imaging 2020;68:95-105.

- Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z Med Phys 2019;29(2):102-127.

- Lehtinen J, Munkberg J, Hasselgren J, et al. Noise2noise: Learning image restoration without clean data. 2018.

- Zhang J, Ghanem B. ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing. Proceedings of the IEEE conference on computer vision and pattern recognition, 2018. p. 1828-1837.

- Ji S, Xue Y, Carin L. Bayesian Compressive Sensing. IEEE Transactions on Signal Processing 2008;56(6):2346-2356.

- Huang J, Chen C, Axel

L. Fast multi-contrast MRI reconstruction. Magn Reson Imaging

2014;32(10):1344-1352.

Figures

Fig

1. Architecture of proposed Sparse-Net and Joint-Sparse-Net. In Denoise Block,

noisy image is transformed to sparse domain to exploits the instinct sparsity.

In Joint Denoise Block, group sparse transform is implemented to share similar

structural information of multi-contrast images.

Fig

2. Denoising results of low-field images using DnCNN,

Sparse-Net and Joint-Sparse-Net. The Sparse-Net and DnCNN

are trained on T1w images only, and Joint-Sparse-Net is trained and tested with

T1w and T2w data jointly.

Fig

3. The average PSNR and SSIM values of DnCNN, Sparse-Net and Joint-Sparse-Net.

Fig

4. Denoising results of low-field images using Sparse-Net, Joint-Sparse-Net and

their N2N version. Benefitting from N2N strategy, the SNR of results using

Sparse-Net-N2N and Joint-Sparse-N2N is greatly improved.

Fig

5. Another denoising results of low-field images using Sparse-Net,

Joint-Sparse-Net and their N2N version. Joint-Sparse-N2N model suppress most of

the noise and preserve most details.

DOI: https://doi.org/10.58530/2022/4345