4331

Correlated and specific features fusion based on attention mechanism for grading hepatocellular carcinoma with Contrast-enhanced MR1School of Medical Information Engineering, Guangzhou University of Chinese Medicine, Guangzhou, China, 2Department of Radiology, Guangdong General Hospital, Guangzhou, China, 3Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Synopsis

Contrast-enhanced MR plays an important role in the characterization of hepatocellular carcinoma (HCC). In this work, we propose an attention-based common and specific features fusion network (ACSF-net) for grading HCC with Contrast-enhanced MR. Specifically, we introduce the correlated and individual components analysis to extract the common and specific features of Contrast-enhanced MR. Moreover, we propose an attention-based fusion module to adaptively fuse the common and specific features for better grading. Experimental results demonstrate that the proposed ACSF-net outperforms previously reported multimodality fusion methods for grading HCC. In addition, the weighting coefficient may have great potential for clinical interpretation.

Introduction

Hepatocellular carcinoma (HCC) has been the fourth most common cause of cancer death worldwide 1. Characterization of the biological aggressiveness of HCC is of great importance in patient management and prognosis prediction. Contrast-enhanced MR has been shown to be promising for the diagnosis and characterization of HCC in clinical practice 2. Many multimodality deep learning models have been attempted for the diagnosis and characterization of lesions 3-6. However, there are still challenges in lesion characterization based on multimodal feature fusion. First, previous studies of multimodality fusion are prone to extract common information of all modalities for lesion characterization, neglecting the importance of specific information within individual modality. Furthermore, how to combine such common and specific information for better lesion characterization has not be explored, and the relative importance of the common and specific features for the characterization has not been reflected for clinical interpretation. To this end, we propose an attention-based common and specific features fusion network (ACSF-net) for lesion characterization.Materials and Methods

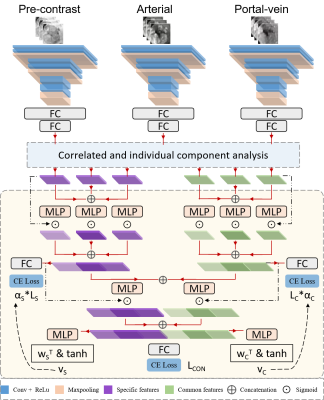

This retrospective study was approved by the local institutional review board and the informed consent of patients was waived. From October 2012 to December 2018, a total of 112 patients with 117 histologically confirmed HCCs were retrospectively included in the study. Gd-DTPA enhanced MR images for each patient were acquired with a 3.0T MR scanner. The pathological diagnosis of HCC was based on surgical specimens, in which there were 54 low-grade and 63 high-grade. Figure 1 showed the proposed attention-based common and specific features fusion network (ACSF-net). The proposed framework first extracted deep features from different modalities using a parallel CNN architecture. Subsequently, we performed correlated and independent feature analysis 7 of these multi-modal deep features. The resulting features were then weighted and fused based on attention mechanisms 8, using the resulting weights to resolve differences in the contribution of the different modality and common and specific features to lesion representations. In order to train deep learning algorithms on small medical data, image resampling was for data augmentation to extract a large number of 3D patches in each phase for each tumor. We randomly generate three sets of dataset, including 3D patches in the three phases for each tumor. Each dataset was randomly divided into two parts: training set (77 HCCs) and test set (40 HCCs). The training and test were repetitively performed five times in order to reduce the measurement error, and values of accuracy, sensitivity, specificity, p-value and Area under the curves (AUC) were calculated in average.Results

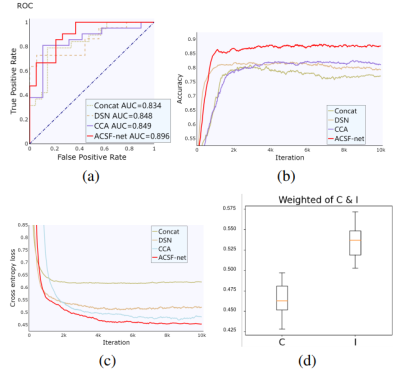

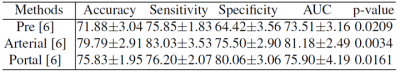

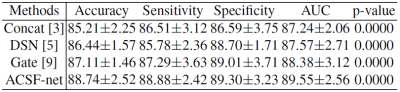

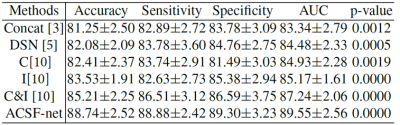

Table I showed the performance of typical 3D CNNs 6 used in the arterial phase, portal-vein phase and pre-contrast phase for HCC grading. The arterial phase is better than the portal-vein phase for HCC grading, and the pre-contrast phase is the lowest. Table 2 showed the performance comparison of different fusion methods in HCC grading based on the common and specific features of Contrast-enhanced MR. The performance of proposed ACSF-net is significantly better than other fusion methods, including concatenation 3 and DSN fusion 5. In addition, compared with the gated fusion model 9 used in multimodal medical image fusion, the average accuracy of the proposed method is increased by 1.34%. Figure 2 showed the performance comparison of different fusion methods for HCC grading, including ROC curves, accuracy curves and loss curves of different methods as shown in Figure 2(a-c). Compared with the existing fusion methods of concatenate 3, DSN 5, and CCA 4, our method has achieved a significant improvement. As shown in Figure 2(d), the final weight value learned through the iterative optimization of the network is made into a box diagram for representation. In addition, Table 3 showed the results of the ablation study. Compared with common features, specific features appear to be better for HCC characterization 10, while the combination of common and specific features produce improved performance. Finally, the accuracy of proposed ACSF-net reached 88.74%, which is significantly higher than the performance of individual modality.Discussion

Our study indicates that arterial phase is better than portal-vein phase and pre-contrast phase for HCC grading, which is consistent with the previously reported study as the arterial phase is more related to aggressiveness of HCC 4. In addition, our study suggests that the combination of common and specific features can yield improved performance, indicating that specific features of each modality may have indispensable characteristics for lesion characterization. Evidently, the proposed ACSF-net is superior to the previously reported multimodality fusion methods for lesion characterization. This might be because we use attention-based deep supervision to balance the importance of correlated and specific features. Finally, the attention coefficient (Figure 2(d)) may have great potential to reveal the correlation of different modalities and characteristics to the disease for clinical interpretation. To our knowledge, the correlated and individual components analysis has not been investigated for multimodal medical images, where multiple modalities combined for better diagnosis or characterization of neoplasm are highly desired in clinical practice.Conclusion

We proposed the ACSF-net to improve the performance of lesion characterization based on multimodal fusion, outperforming previously reported multimodal fusion method. The method and findings proposed in this study may be beneficial to multimodal fusion for lesion characterization.Acknowledgements

This research is supported by the grant from National Natural Science Foundation of China (NSFC: 81771920).References

1 Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 2018; 68:394-424.

2 Choi JY, Lee JM, Sirlin CB. CT and MR imaging diagnosis and staging of hepatocellular carcinoma: part II. extracellular agents, hepatobiliary agents, and ancillary imaging features. Radiology 2014; 273(1): 30-50.

3 Dou T, Zhang L, Zhou W. 3D deep feature fusion in contrast-enhanced MR for malignancy characterization of hepatocellular carcinoma. IEEE 15th International Symposium on Biomedical Imaging 2018:29-33.

4 Yao J, Zhu X, Zhu F, Huang J. Deep correlational learning for survival prediction from multi-modality data. International Conference on Medical Image Computing and Computer Assisted Intervention 2017: 406-414.

5 Zhou W, Wang G, Xie G, Zhang L. Grading of hepatocellular carcinoma based on diffusion weighted images with multiple b-values using convolutional neural networks. Medical physics 2019;46(9):3951-3960.

6 Hamm CA, Wang CJ, Savic LJ, et al. Deep learning for liver tumor diagnosis part i: development of a convolutional neural network classifier for multi-phasic MRI. European radiology 2019;29(7):3338-3347.

7 Panagakis Y, Nicolaou MA, Zafeiriou S, Pantic M. Robust correlated and individual component analysis. IEEE transactions on pattern analysis and machine intelligence 2015;38(8):1665-1678.

8 Hori C, Hori T, Lee TY, et al. Attention-based multimodal fusion for video description. IEEE international conference on computer vision 2017:4193-4202.

9 Valanarasu JMJ, Oza P, Hacihaliloglu I, Patel VM. Medical transformer: Gated axial-attention for medical image segmentation. arXiv preprint arXiv:2102.10662, 2021.

10 Wang Z, Lu J, Lin R, et al. Correlated and individual multi-modal deep learning for rgb-d object recognition. arXiv preprint arXiv:1604.01655, 2016.

Figures