4046

1D Convolutional Neural Network as Regularizer for Learning DCE-MRI Reconstruction

Zhengnan Huang1,2, Jonghyun Bae1,2, Eddy Solomon3, Linda Moy1,2, Sungheon Gene Kim3, Patricia M. Johnson1, and Florian Knoll1,4

1Center for Biomedical Imaging, Radiology, New York University School of Medicine, New York, NY, United States, 2Vilcek Institute of Graduate Biomedical Sciences, New York University School of Medicine, New York, NY, United States, 3Department of Radiology, Weill Cornell Medical College, New York, NY, United States, 4Friedrich-Alexander-University of Erlangen-Nürnberg, Erlangen, Germany

1Center for Biomedical Imaging, Radiology, New York University School of Medicine, New York, NY, United States, 2Vilcek Institute of Graduate Biomedical Sciences, New York University School of Medicine, New York, NY, United States, 3Department of Radiology, Weill Cornell Medical College, New York, NY, United States, 4Friedrich-Alexander-University of Erlangen-Nürnberg, Erlangen, Germany

Synopsis

We proposed to use a variational network (VN) reconstruction algorithm with a 1-dimensional convolutional neural network (CNN) as a temporal regularizer for DCE-MRI reconstruction in this study. We used our newly developed breast perfusion simulation pipeline, to generate simulate data and train the reconstruction model. The machine learning (ML) reconstruction shows non-inferior structural similarity and improved visual image quality when compared with the iGRASP reconstruction. The ML reconstruction also takes much less time than the iGRASP reconstruction.

INTRODUCTION

Despite the rich information provided by radial DCE-MRI, long reconstruction time and the inherent trade-off between temporal and spatial resolution limits its clinical application. In this research, we proposed to use a variational network (VN) [1] reconstruction algorithm with a 1-dimensional convolutional neural network (CNN) as a temporal regularizer. With the learnt regularizer, we hope it could detect and remove undersampling artifacts more efficiently. [2] Taking advantage of our newly developed breast perfusion simulation pipeline, we were able to train the reconstruction model with simultaneously high temporal and spatial resolution ground truth data. The machine learning (ML) reconstruction shows non-inferior structural similarity and improved visual image quality when compared with the iGRASP [3] reconstruction. The ML reconstruction also takes much less time than the iGRASP reconstruction.METHODS

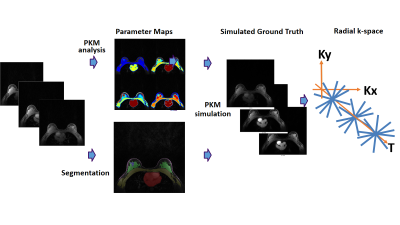

Data SimulationTo generate a ground truth image and k-space for training, we used our newly developed simulation pipeline[4]. The simulation pipeline was established based on the manually segmented regions along with the estimated contrast kinetic parameters for the regions. It was based on the breast images acquired using a 3T MRI scanner, a 16 channel breast coil, and a golden-angle spoke ordering stack-of-star 3D spoiled gradient echo sequence. The total scan time was 150 seconds with contrast injection (gadavist, Bayer) after 60 seconds into the scan. GRASP-Pro[5] was used for image reconstruction. One slice with a suspicious lesion or central slice from each subject was selected to build the simulation pipeline. For the selected slices, we performed pharmacokinetic model analysis, using the Two Compartment Exchange Model (TCM), to estimate the contrast kinetic parameters. Manual segmentation was performed to select the anatomical regions. The T1 values for the anatomical regions were assigned according to literature values. The simulation pipeline was then used to generate DCE-MRI images with different combinations of contrast kinetic parameters for different anatomical regions.

The data was randomly split into training (51), validation (5) and testing (5) sets. Simulated K-space and original coil sensitivities (estimated by dividing coil images with ‘sum-of-square’ image [6]) were provided as the input of the reconstruction network.

Model Architecture

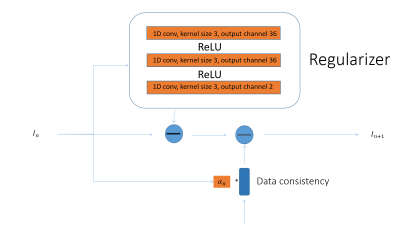

We designed the regularizer in the network as a three layer CNN (Figure 2). 1D convolution with a kernel size 5 (zero padding size 2) is used across the 3-layer regularizer network. The input and output of this network is a vector of length equal to the 22 time points. The regularizier treated the complex signal as 2 channels of real signal. This model resembles the iGRASP reconstruction process, which alternates between data consistency and regularization. This allowed us to directly compare machine learning and traditional iterative reconstruction.

Model Training and Evaluation

The model was trained for 70 epochs on 1 GPU (NVIDIA V100 Tensor Core). We used a batch size of one and Adam as the optimizer. The learning rate initially set as 0.01. We have 28 iteration stages in our model. To fit our model on a single GPU, gradient checkpointing was utilized to minimize memory consumption. Mean square error between the reconstructed image and the simulated ground truth was used as the loss.

As a comparison, we ran the iGRASP reconstruction on the same hardware (Cray CS-Storm 500NX) and software platform (Linux 3.10.0, Python 3.6.8, PyTorch 1.1.0 and TorchKBNufft 0.2.1 [7], CUDA 9.0). Only temporal regularization was used. The regularization parameter value was set as 2.5% times the maximum adjoint NUFFT signal. We let it run for 32 and 64 iterations.

RESULTS

Our 1D regularization reconstruction network has shown it can outperform iGRASP reconstruction in terms of MSE, while producing comparable structure similarity. Visual image quality also appeared satisfactory (Figure 3). However, the averaged temporal signal curve does not align well with the ground truth distribution (Figure 4). iGRASP reconstruction took ~30 minutes (32 iterations) to ~60 minutes (64 iterations) for each case, while the machine learning reconstruction took ~2 minutes for each case.DISCUSSION

In this research, we developed a regularizer for machine learning reconstruction of DCE-MRI data. We were able to train the machine learning model and achieved high structural similarity. Machine learning reconstruction takes much less time than iGRASP to reach similar structure similarity. We did not recover the averaged signal curve accurately, limiting our ability to map the reconstructed images to the simulated parameter space. However, this could be caused by suboptimal hyperparameters or limited training data. Future research will be focused on temporal signal fidelity which allows us to correctly estimate the pharmacokinetics parameters.CONCLUSION

This research demonstrated that a 1D regularization in a variational network could be trained to reconstruct DCE-MRI images. It produced reconstruction with great structure similarity to the ground truth with some speed advantage. Accurately reconstructing the contrast enhancement curve will be the focus of our future investigation.Acknowledgements

We thank Matthew Muckley for his assistant related to TorchKBNufft. We thank Li Feng for his valuable input to this work regarding iGRASP.References

1. Hammernik K, Klatzer T, Kobler E, Recht MP, Sodickson DK, Pock T, et al. Learning a variational network for reconstruction of accelerated MRI data. Magn Reson Med. 2018;79: 3055–3071.2. Knoll F, Hammernik K, Zhang C, Moeller S, Pock T, Sodickson DK, et al. Deep-Learning Methods for Parallel Magnetic Resonance Imaging Reconstruction: A Survey of the Current Approaches, Trends, and Issues. IEEE Signal Process Mag. 2020;37: 128–140.3. Feng L, Grimm R, Block KT, Chandarana H, Kim S, Xu J, et al. Golden-angle radial sparse parallel MRI: combination of compressed sensing, parallel imaging, and golden-angle radial sampling for fast and flexible dynamic volumetric MRI. Magn Reson Med. 2014;72: 707–717.4. Huang Z, Bae J, Johnson PM, Sood T, Heacock L, Fogarty J, et al. A Simulation Pipeline to Generate Realistic Breast Images for Learning DCE-MRI Reconstruction. Machine Learning for Medical Image Reconstruction. 2021. pp. 45–53. doi:10.1007/978-3-030-88552-6_55. Feng L, Wen Q, Huang C, Tong A, Liu F, Chandarana H. GRASP-Pro: imProving GRASP DCE-MRI through self-calibrating subspace-modeling and contrast phase automation. Magn Reson Med. 2020;83: 94–108.6. Pruessmann KP, Weiger M, Scheidegger MB, Boesiger P. SENSE: sensitivity encoding for fast MRI. Magn Reson Med. 1999;42: 952–962.7. mmuckley. GitHub - mmuckley/torchkbnufft: A high-level, easy-to-deploy non-uniform Fast Fourier Transform in PyTorch. [cited 9 Nov 2021]. Available: https://github.com/mmuckley/torchkbnufftFigures

Simulation Pipeline. Original data were used for PKM analysis which generates 4 pharmacokinetic parameters for each pixel. Manual segmentation was performed. These 4 parameters were treated as ground truth and used to simulate contrast enhancement for different anatomical regions. Applying the contrast enhancement to the baseline image resulting in our simulated ground truth. K-space was simulated from the simulated ground truth.

Model Architecture of one stage in the reconstruction network is shown here. The architecture for the reconstruction model follows the variational network structure. The orange part of the diagram contains learnable parameters. In each stage, a regularizer contains 3 layers of 1D convolution, each followed by a ReLU activation function. The convolution kernel size is 5 and zero padding of 2 is used. The complex images were treated as an image of 2 separated channels.

Reconstructed Images. One malignant and One benign case from the validation set were shown. The target, adjoint NUFFT of the simulated kspace, 1D-VN and iGRASP (32 iterations and 64 iterations) are shown in each row followed by a zoom-in view of the lesion. To show the temporal differences, Images were scaled according to the largest and smallest value in each time series.

Averaged signal curves based on anatomical regions(benign or malignant lesion and glandular tissue)

DOI: https://doi.org/10.58530/2022/4046