3805

Improving image quality of ultralow-dose pediatric total-body PET/CT using deep learning technique1Lauterbur Research Center for Biomedical Imaging, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 2National Innovation Center for High Performance Medical Devices, Shenzhen, China, 3Department of Nuclear Medicine, Sun Yat-sen University Cancer Center, Guangzhou, China, 4Central Research Institute, United Imaging Healthcare Group, Shanghai, China

Synopsis

Young children are more sensitive to radiation doses than adults, and their absorption of effective doses can be 4-5 times that of adults. When performing PET imaging, the use of low-dose imaging agents for high-quality imaging is of clinical importance. Here, we use artificial intelligence techniques combined with prior CT information to improve the quality of total-body PET/CT images in ultralow-dose pediatric FDG scans, and the results show that the equivalent quality of 600s acquisition data can be obtained using 15s acquisition.

INTRODUCTION

In the clinical diagnosis of tumor diseases in young children, PET imaging is almost an essential part of the process. In order to locate the tumor and correct the attenuation of PET images, CT imaging is also required. The rate of radiation absorption in infants and children can be about 4-5 times that of adults[1,2]. Therefore, it is clinically important to reduce the radiation dose during the imaging of infants and children. This work focuses on the use of artificial intelligence techniques to further reduce the dose during PET imaging after a low-dose acquisition protocol has been adopted at the device side.METHODS

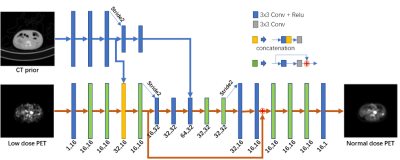

A total of 44 pediatric patients (weight range 8.5–50.1 kg; ages 1–12 years) who underwent total-body PET/CT using a uEXPLORER scanner (uEXPLORER, United Imaging Healthcare, Shanghai, China) were retrospectively enrolled[3-5]. 18F-FDG was administered at an approximate dose of 3.7 MBq/kg and an acquisition of 600 s; low-dose total-body CT scans were also acquired. The low-dose PET images (0.037–0.925 MBq/kg) were simulated by truncating the list-mode data to reduce count density.The proposed neural network for low-dose PET image synthesis is shown in Figure 1. Based on an investigative assessment of different state-of-the-art deep learning structures, including ResNet[6] and U-Net[7], we used the U-Net encoder-decoder architecture strategy with the residual module as the main framework to introduce the prior CT information at different scales into the network. The fusion from the high-dimensional features of the individual modal images can lead to better integration of complementary information in each modality[8, 9]. Therefore, we used the high-dimensional features extracted from the CT images after N convolutional layers (here, N = 3, 5) as the prior information introduced into the encoder of the network. A K-fold cross-validation strategy was used to compensate for the lack of training samples.

The inputs to the network were the low-dose PET images and low-dose CT images. The full-dose PET image was treated as the ground truth. To enhance the network's ability to recover anatomical structures and texture details, the loss function used a combination of L2 normal and perceptual loss[10]. The network was constructed using the PyTorch deep learning framework and was optimized using the Adam optimizer with a cosine annealing strategy to speed up convergence[11,12].

RESULTS

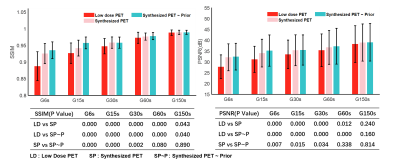

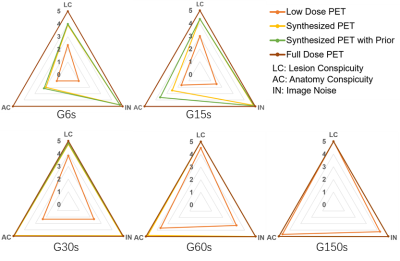

Image quality was assessed by subjective and objective analyses. The subjective analysis was performed on a 5-point scale (5 = excellent), and the objective analysis metrics included the SSIM and PSNR.Figure 2 shows the PET images of five dose levels and the images synthesized based on artificial intelligence methods. The synthesized PET images show significantly reduced noise compared to the low-dose PET images, and the images generated from the PET combined with the prior CT model were superior in reflecting the underlying anatomic patterns compared with the images generated from the PET-only model. The objective measurements of the average SSIM and PSNR values that were calculated from the synthesized images and low-dose images relative to the full-dose images for all the patients in the evaluation set are shown in Figure 3. A subjective assessment of the PET image quality was rated independently by two nuclear radiologists (a senior radiologist with >10 years of experience and a radiologist with >5 years of experience) based on a 5-point Likert scale. The 5-point Likert scale was used for (1) the overall impression of the image quality, (2) the conspicuity of the major suspected malignant lesions, (3) the conspicuity of the organ anatomical structures and (4) the image noise. Results are shown in Figure 4.

DISCUSSION AND CONCLUSION

This proof-of-concept study shows that the use of artificial intelligence techniques can be effective in improving the quality of low-dose images. Among all the images, the image synthesized by the network model combined with the CT prior image has a higher average image quality. This indicates the important value of introducing CT images with rich anatomical structure information into the imaging model.Based on the quantitative and qualitative results, we can see that the enhancement of total-body PET/CT ultralow-dose images using artificial intelligence techniques can significantly improve the image quality, thus allowing for a reduction of the injected tracer concentration, which is of great importance for the clinical diagnosis of dose-sensitive pediatric patients. The images generated by the model that assembles the CT a priori information are better in terms of the noise and detail than the model alone

Acknowledgements

This work was supported by the National Natural Science Foundation of China (32022042, 81871441, 62001465), the Shenzhen Excellent Technological Innovation Talent Training Project of China (RCJC20200714114436080)References

1. Stauss, Jan et al. “Guidelines for 18F-FDG PET and PET-CT imaging in paediatric oncology.” European Journal of Nuclear Medicine and Molecular Imaging 35 (2008): 1581-1588.

2. Cox, Christina P. W. et al. “A dedicated paediatric [18F]FDG PET/CT dosage regimen.” EJNMMI Research 11 (2021): 1-10.

3. X. Zhang et al., "Total-Body Dynamic Reconstruction and Parametric Imaging on the uEXPLORER," The Journal of Nuclear Medicine, vol. 61, pp. 285 - 291, 2020.

4. H. Tan et al., "Total-body PET/CT using half-dose FDG and compared with conventional PET/CT using full-dose FDG in lung cancer," European Journal of Nuclear Medicine and Molecular Imaging, vol. 48, pp. 1966 - 1975, 2020.

5. Y.-M. Zhao et al., "Image quality and lesion detectability in low-dose pediatric 18F-FDG scans using total-body PET/CT," European Journal of Nuclear Medicine and Molecular Imaging, pp. 1 - 8, 2021.

6. K. He, X. Zhang, S. Ren, and J. Sun, "Deep Residual Learning for Image Recognition," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016.

7. O. Ronneberger, P. Fischer, and T. Brox, "U-Net: Convolutional Networks for Biomedical Image Segmentation," in MICCAI, 2015.

8. L. Y., B. Y., and H. G., "Deep Learning," Nature, vol. 521, pp. 436-444, 2015.

9. A. Kumar, M. Fulham, D. Feng, and J. Kim, "Co-Learning Feature Fusion Maps From PET-CT Images of Lung Cancer," IEEE Transactions on Medical Imaging, vol. 39, pp. 204-217, 2020.

10. J. Johnson, A. Alahi, and L. Fei-Fei, "Perceptual Losses for Real-Time Style Transfer and Super-Resolution," ArXiv, vol. abs/1603.08155, 2016.

11. D. P. Kingma and J. Ba, "Adam: A Method for Stochastic Optimization," CoRR, vol. abs/1412.6980, 2015.

12. I. Loshchilov and F. Hutter, "SGDR: Stochastic Gradient Descent with Warm Restarts," arXiv: Learning, 2017.

Figures