3794

4D-MRI image enhancement via a deep learning-based adversarial network1Department of Health Technology and Informatics, The Hong Kong Polytechnic University, Hong Kong, China

Synopsis

We developed and evaluated a deep learning technique for enhancing four-dimensional MRI (4D-MRI) image quality based on conditional adversarial networks. The quantitative and qualitative evaluative results demonstrated that the proposed model was able to reduce artifacts in low-quality 4D-MRI images and recover the details obtained from high-quality MR images, and performed better as compared with a state-of-the-art method.

INTRODUCTION

Four-dimensional magnetic resonance imaging (4D-MRI) has shown great potential in organ exploration, target delineation, and treatment planning. However, due to limited hardware and imaging time, 4D-MRI usually suffers from poor signal-to-noise ratios (SNR) and severe motion artifacts.1 High-quality (HQ) MRIs are critical for detecting diseases and making diagnostic decisions in the clinical application, however, their availability is limited due to long scan time, insufficient hardware capacity, etc..2 One solution to generate an HQ 4D-MRI image is to refine low-quality (LQ) 4D-MRI images by capturing perceptually important image features and texture details from referenced HQ images. As deep learning (DL) has grown rapidly in recent years, lots of studies have verified the feasibility of DL models on upgrading the quality of images. In this study, a DL model was proposed for 4D-MRI that extracts visual features to improve the quality of 4D-MRI.METHODS

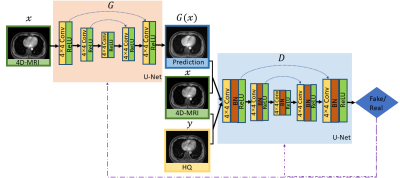

The dataset used for training and testing the proposed network were obtained from twenty one patients undergoing radiotherapy for liver tumors. The study protocol was approved by the institutional review board. Each patient underwent 4D-MRI using the TWIST volumetric interpolated breath-hold examination (TWIST-VIBE) MRI sequence. The corresponding HQ images were also underwent a regular T1w (free-breathing) 3D MRI scan. Of the twenty-one patients, nineteen cases were used as training, while the others were used for testing. Due to the limited number of MRI training volumes, we developed a two-dimensional model for slice by slice enhancement. We designed our adversarial enhancing model based on generative adversarial nets (GAN)3 to learn an accurate mapping between the 4D-MRI and their corresponding HR images. The GAN framework has two networks, a generator (G) and a discriminator (D). G was trained to produce enhanced 4D-MRI images that could not be distinguished from referenced HQ images by D, and D was trained to detect the fake images from the G. The training procedure of the adversarial model was shown in Figure 1.During the training procedure, the G was fed with LQ images $x$ and outputs improved images $G(x)$, which was then transferred to the D to calculate a loss function compared with real high-quality MR images $y$ to guide the training. The loss function in Eq.(1) comprises two parts, a condition GAN objective (Eq.(2)) and a traditional loss (Eq.(3)). In our task, L1-norm-based distance was adopted as the traditional loss to lessen blurring artifacts.

$$G^*=arg\min \limits_{G}\max \limits_{D} \mathcal{L}_{CGAN}(G,D)+\lambda \mathcal{L}_{L1}(G)\quad(1)$$

$$\mathcal{L}_{CGAN}(G,D)=\mathbb{E}_{(x,y)}[\log D(x,y)]+\mathbb{E}_{(x,z)}[\log \parallel(1-D(x,G(x,z))\parallel]\quad(2)$$

$$\mathcal{L}_{L1}(G)=\mathbb{E}_{(x,y,z)}[\parallel y-G(x,z) \parallel ]\quad(3)$$

Considering the U-Net architecture allows low-level information to shortcut across the network, we used U-Net in G and D. In this network, the input was passed through a series of layers that progressively down-sample, until a bottleneck layer, at which point the process was reversed. We observed that the model with BN layers was more likely to introduce unpleasant artifacts on coronal and sagittal planes. 4 For stable and consistent performance without artifacts, we removed all BN layers in G. It also saved computational resources and memory usage. The discriminator consists of eight blocks of Convolution-BN-ReLU operations. For each training image pair, we used Elastix 5 in pre-registration processing to align HQ images and LQ 4D-MRI images.

RESULTS

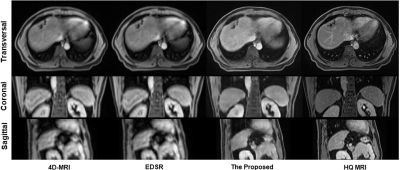

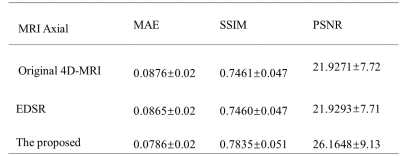

An enhanced deep super-resolution network (EDSR) was chosen as the contrast method due to its state-of-the-art performance. 6 Visual quality comparison of different planes between the original 4D-MRI, the EDSR, and the proposed method was shown in Figure 2. The image quality of 4D-MRI by our method was largely improved from axial, sagittal, and coronal three views and the shape information of organs shows better visibility. Compared with original 4D-MRI and the output of EDSR, artifacts on axial planes were reduced effectively. Besides, to quantitatively measure recovery accuracy of the proposed method, we used three reference-based image similarity metrics: mean absolute error (MAE), structural similarity index (SSIM), and peak signal to noise ratio (PSNR). As shown in Table 1, the numbers suggest that the prediction of our method shows slightly closer to HQ MR images among three images and has a better performance in all three measurements.DISCUSSION

We developed a DL-based 4D-MRI enhancement technique in this study. The image quality of the enhanced 4D-MRIs was improved with fewer artifacts in the axial plane. Besides, the shape and outline of organs in the sagittal and coronal planes were much clearer compared with original ones and the results of EDSR. Currently, 4D-MRI is rarely used in practice for its poor image quality and severe artifacts. This technique can enhance its quality and lessen the artifacts to make it more practical in clinical application.CONCLUSION

A GAN-based 4D-MRI enhancing technique was developed. The enhanced 4D-MRI showed clearer texture and shape information of organs with fewer artifacts and noises than the original 4D-MRI. This post-processing technique enables to reconstruct sharp 4D-MR images with rich texture details and has great promises in medical image analysis.Acknowledgements

No acknowledgement found.References

1 Liu, Y., Yin, F. F., Chen, N. K., Chu, M. L., & Cai, J. (2015). Four dimensional magnetic resonance imaging with retrospective k‐space reordering: A feasibility study. Medical physics, 42(2), 534-541.

2 Pruessner, J. C., Li, L. M., Serles, W., Pruessner, M., Collins, D. L., Kabani, N., ... & Evans, A. C. (2000). Volumetry of hippocampus and amygdala with high-resolution MRI and three-dimensional analysis software: minimizing the discrepancies between laboratories. Cerebral cortex, 10(4), 433-442.

3 Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., ... & Bengio, Y. (2014). Generative adversarial nets. Advances in neural information processing systems, 27.

4 Wang, X., Yu, K., Wu, S., Gu, J., Liu, Y., Dong, C., ... & Change Loy, C. (2018). Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European conference on computer vision (ECCV) workshops (pp. 0-0).

5 Klein, S., Staring, M., Murphy, K., Viergever, M. A., & Pluim, J. P. (2009). Elastix: a toolbox for intensity-based medical image registration. IEEE transactions on medical imaging, 29(1), 196-205. [6] Lim, B., Son, S., Kim, H., Nah, S., & Mu Lee, K. (2017).

6 Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition workshops (pp. 136-144).

Figures