3197

Accurate Prostate Segmentation in MR Images Guided by Semantic Flow1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Synopsis

To enlarge the receptive field, downsampling is frequently utilized in deep learning (DL) models. Consequently, there exists one common issue for DL-based image segmentation – the misalignment between high-resolution features and high-semantic features. To this end, decoding or upsampling has been proposed and promising performances have been achieved. However, upsamling without explicit pixel-wise localization guidance may introduce errors. To address this issue, we propose a semantic flow-guided prostate segmentation method. By guiding the upsampling process with semantic flow calculated from both high-resolution and high-semantic features, more accurate segmentation results are generated.

Summary of Main Findings

A semantic flow-guided deep neural segmentation network is proposed to better solve the misalignment issue between high-resolution features and high-semantic features caused by downsampling. More accurate prostate MR image segmentation is achieved.Introduction

Prostate cancer is the second leading cause of cancer mortality among men, affecting one out of every six men. Due to the difficulty in correctly determining the prostate's anatomy, many diagnostic prostate biopsies fail to detect occult cancers [1]. For prostate cancer and other prostate diseases, accurate prostate segmentation in magnetic resonance (MR) images is particularly valuable for treatment planning and many other diagnostic and therapeutic procedures [2]. On the other hand, manual segmentation from MR images is time-consuming and subject to mistakes. As a result, in daily clinical practice, accurate automated prostate gland and prostate region segmentation are highly desirable. Currently, the leading and most effective approaches to automated prostate segmentation are deep convolutional neural networks (CNNs), especially those with an encoder-decoder architecture [3]. During encoding, the input images are downsampled to enlarge the receptive field and extract features with more semantic information. As a result, those features with high semantics have low resolutions. To obtain the final segmentation outputs that have the same resolution as the inputs, decoding or upsampling is utilized. Common upsampling methods include deconvolution and parameter-free interpolation. Both may introduce localization errors as no explicit constraint is enforced. State-of-the-art approaches [4,5] employ atrous convolutions [6] to address this issue. However, atrous convolutions involve extensive additional computation. Motivated by this and inspired by the recent works on flow field calculation in computer vision [7, 8], we introduce a semantic flow-guided CNN model in this study to achieve more accurate prostate segmentation in MR images. Learning semantic flow between high-resolution and high-semantic feature maps to guide the upsampling procedure can improve localization accuracy. The network can propagate semantic features with minimal information loss once the precise flow is obtained.Methodology

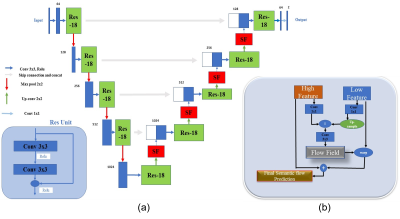

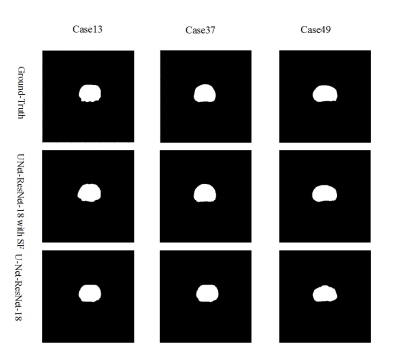

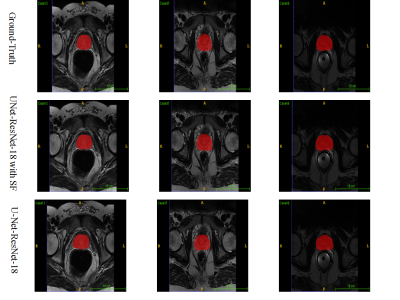

Figure 1 depicts the overall network architecture, which includes a contracting path encoder and an expansive path decoder. The encoder path follows the conventional design of a convolutional network, which provides feature representations at several layers. The decoder may be regarded as a Feature Pyramid Network (FPN) [9] equipped with multiple semantic flow modules. We build our model based on the U-Net [10] model for prostate MR image segmentation. In expansive path decoder, before passing on to the next level, the feature map of each level is compressed into the same channel depth, and the prediction of the semantic flow field is the output of sub-networks. After generating a semantic flow field, we warp the top layer feature maps using a simple addition operator and then approximate the semantic flow module's final output using the differentiable bi-linear sampling mechanism proposed in spatial transformer networks [11]. The aligned decoder employs the aligned feature pyramid for the final segmentation of prostate MR images, taking feature maps from the encoder. Data augmentation techniques are used to augment the training data in order to boost the use of the limited training data. The segmentation loss is calculated as the sum of Dice loss and Cross-Entropy loss. The MICCAI Prostate MR Image Segmentation (PROMISE12) challenge dataset [12] is utilized to validate the effectiveness of our method. This dataset includes data collected in a variety of hospitals, using a variety of equipment and protocols. 5-fold cross-validation experiments are conducted. Figure 2 and Figure 3 show the qualitative and visualization segmentation results of prostate MR images. For quantitative evaluation, intersection-over-union (IoU), Dice score ((Dice similarity coefficient, DSC), average symmetric surface distance (ASSD), maximum symmetric surface distance (MSSD), and relative area/volume difference (RAVD) are calculated. Higher DSC and IoU values, as well as lower RAVD, ASSD, and MSSD indicate better segmentation results.Results and Discussion

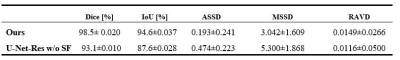

We compare our approach to models trained without the semantic flow module to assess the effectiveness of our proposed strategy. All networks are trained using the same settings. Table 1 reports the evaluation metrics on the testing dataset. Our proposed segmentation approach with the semantic flow field module generates better results compared to the two comparison models without semantic flow module. Insert semantic flow module improves DCS by 5.5% when compared to the original U-Net and ResNet-18. An example case's segmentation results are visualized in Figure 2 and Figure 3. When compared to models without a semantic flow module, our proposed technique yields better segmentation maps.Conclusion

In this paper, we propose a semantic flow-guided deep neural segmentation network to align high-resolution and high-semantic feature maps generated by a feature pyramid to the task of prostate MR image segmentation. The proposed approach addresses a common issue of deep learning-based image segmentation. High-semantic features with low-resolutions are well fused into low-semantic features with high resolutions using the proposed semantic flow alignment module. Encouraging results are achieved on a public dataset for prostate segmentation in MR images.Acknowledgements

This work was partly supported by Scientific and Technical Innovation 2030-“New Generation Artificial Intelligence” Project (2020AAA0104100, 2020AAA0104105), the National Natural Science Foundation of China (61871371, 81830056), the Basic Research Program of Shenzhen (JCYJ20180507182400762), and Youth Innovation Promotion Association Program of Chinese Academy of Sciences (2019351).References

1- Bloch, N. et al. NCI-ISBI 2013 challenge: automated segmentation of prostate structures. International Symposium on Biomedical Imaging (ISBI), San Francisco, California, USA, 2013.

2- Yu, Lequan, et al. Volumetric ConvNets with mixed residual connections for automated prostate segmentation from 3D MR images. Thirty-first AAAI conference on artificial intelligence. 2017.

3- Milletari, Fausto, Nassir Navab, and Seyed-Ahmad Ahmadi. V-net: Fully convolutional neural networks for volumetric medical image segmentation. 2016 fourth international conference on 3D vision (3DV). IEEE, 2016.

4- Fu, Jun, et al. Dual attention network for scene segmentation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.

5- Zhu, Yi, et al. Improving semantic segmentation via video propagation and label relaxation. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.

6- Yu, Fisher, and Vladlen Koltun. Multi-scale context aggregation by dilated convolutions. arXiv preprint arXiv:1511.07122 (2015).

7- Dosovitskiy Alexey, et al. FlowNet: Learning optical flow with convolutional networks. IEEE International Conference on Computer Vision. 2015.

8- Li, Xiangtai, et al. Semantic flow for fast and accurate scene parsing. European Conference on Computer Vision. Springer, Cham, 2020.

9- Lin, Tsung-Yi, et al. Feature pyramid networks for object detection. Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

10- Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015.

11- Jaderberg, Max, Karen Simonyan, and Andrew Zisserman. Spatial transformer networks. Advances in neural information processing systems 28 (2015): 2017-2025.

12- Litjens, Geert, et al. Evaluation of prostate segmentation algorithms for MRI: the PROMISE12 challenge. Medical image analysis 18.2 (2014): 359-373.

Figures