3189

Human Knowledge Guided Deep Learning with Multi-parametric MR Images for Glioma Grading1School of Information Engineering, Zhengzhou University, Zhengzhou, China, 2Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China

Synopsis

Automatic glioma grading based on magnetic resonance imaging (MRI) is crucial for appropriate clinical managements. Recently, Convolutional Neural Networks (CNNs)-based classification models have been extensively investigated. However, to achieve accurate glioma grading, tumor segmentation maps are typically required for these models to locate important regions. Delineating the tumor regions in 3D MR images is time-consuming and error-prone. Our target in this study is to develop a human knowledge guided CNN model for glioma grading without the reliance of tumor segmentation maps in clinical applications. Extensive experiments are conducted utilizing a public dataset and promising grading performance is achieved.

Introduction

Gliomas are the most common type of primary neuroepithelial malignant tumors [1], and the treatment and prognosis of patients with different glioma grades vary significantly [2,3]. Magnetic Resonance Imaging (MRI) plays a crucial role in the diagnosis of gliomas because of its superior imaging contrast and resolution [4]. To assist physicians in achieving fast and accurate diagnoses, automatic glioma grading based on brain MR images has become an important research field.Convolutional Neural Networks (CNNs) have presented promising performances in glioma grading recently [5]. However, one big issue remains in existing relevant studies is that tumor segmentation maps are commonly utilized when performing the glioma grading [6,7]. Despite the reported promising results, manually delineating brain tumor regions is time-consuming and error-prone because of the complexity of the shape and texture of gliomas. Moreover, different physicians may give slightly different tumor segmentations, which can affect the grading performance during model implementation. In this study, we propose a human knowledge guided CNN-based glioma grading model with a designed y-discrepancy distance. Our grading model gets rid of the dependence on tumor segmentation maps at test time, which can be very important for clinical applications. Promising test results are achieved on a public dataset.

Method

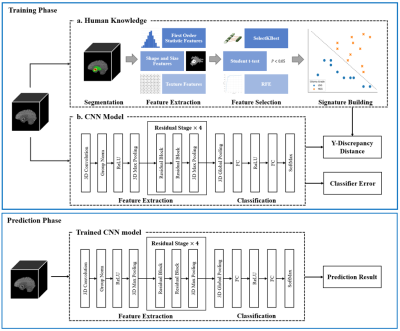

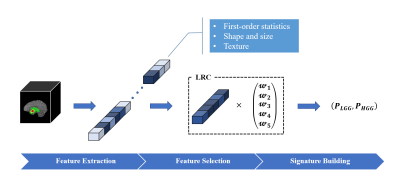

The overall framework of the proposed method is shown in Figure 1. Two major steps are involved. The first step is related to the human knowledge construction. We extract abundant quantitative features from the tumor regions and select the most important features to train a logistic regression classifier (LRC). All of the features have specific meanings describing the attributes of the tumor regions. The outputs of the LRC are used as the radiomic signatures that contain useful human knowledge. The whole human knowledge construction process is depicted in Figure 2. The second step is to train our CNN-based grading model with the constructed human knowledge. A CNN model is developed that adopts a residual architecture [8] with Squeeze-and-Excitation module [9] embedded (Figure 1). Then, we calculate a y-discrepancy distance between the radiomic signatures and the predictions of the CNN model. While minimizing the y-discrepancy distance, the human knowledge containing the information of the tumor regions is introduced to the parameter optimization of the CNN model through the backpropagation process. In this way, we can eliminate the dependence of the CNN grading model on tumor segmentation maps. The y-discrepancy is defined as$$ dist\langle h_{lrc} (x),h_{cnn} (x)\rangle=σ[CE(h_{lrc} (x),y)-CE(h_{cnn} (x),y)]KL(h_{lrc} (x)∥h_{cnn} (x)) ,$$

where $$$h_{lrc}$$$ and $$$h_{cnn}$$$ are the hypotheses of the LRC and the CNN model, $$$CE$$$ is cross entropy, $$$KL$$$ is KL-dispersion, and $$$σ$$$ is ReLU activation. The overall objective function of the human knowledge guided CNN-based glioma grading model is:

$${\mathcal{h}_{cnn}}^\ast(\mathbf{x}) = \mathop{argmin} \limits_{\mathcal{h}_{cnn} \in\mathcal{H}_{cnn}}\frac{1}{N} \sum_{i=1}^{N}CE\left(\mathcal{h}_{cnn}\left(\mathcal{x}_\mathcal{i}\right),\mathcal{y}_i\right)+\lambda dist\langle h_{lrc} (x),h_{cnn} (x)\rangle,$$

where $$$\lambda$$$ is a regularization parameter. By optimizing the objective function, we can use the brain MR images more efficiently and obtain a high-performance glioma grading model that does not require manual tumor segmentation maps during testing.

Dataset

Experiments are conducted utilizing the public dataset, BraTs2020 dataset [10-12]. In total, there are 369 samples with 293 HGG samples and 76 LGG samples. We randomly divide the samples into a training dataset and a test dataset with a ratio of 8:2. All brain tumors in MR images were segmented manually by experienced neuro-radiologists. Besides, all MR images were co-registered to the same anatomical template, interpolated to the same resolution (1mm3), and skull stripped. MR data of four sequences are provided, and we utilize the T1ce MR images in all our experiments.Results and Discussion

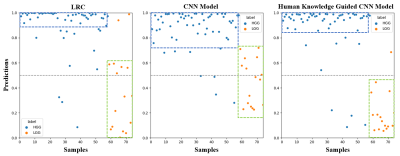

The effectiveness of the proposed method is validated by extensive experiments. First, we present the results of the construction of radiomic signatures. Among the extracted 1618 quantitative features, 5 features are selected and most of them are texture features (Figure 3). Then, we compare the AUC scores achieved by the LRC, the CNN model only, and the human knowledge guided CNN model (Figure 4). Different ratios of training data are utilized. Results suggest that the LRC and the CNN model perform similarly. The human knowledge guided CNN model can achieve better results compared to the two baselines regardless of the training data utilized. The predictions on test data are plotted in Figure 5. The three plots show that although the LRC and the CNN model give similar performance, the LRC is more confident in its predictions than the CNN model. The human knowledge guided CNN model can reduce the uncertainty in predictions for both HGG and LGG samples. Besides, we further observe that the human knowledge can enhance the capability of the CNN model in distinguishing LGG samples.Conclusion

In this paper, we propose a human knowledge guided CNN model for glioma grading on brain MR images. Using the human knowledge contained in the radiomic signatures as a prior, a y-discrepancy distance is designed and minimized. In this way, the proposed CNN model, which gets rid of the cumbersome tumor region delineation process during model implementation, can use MR images more efficiently. The results suggest that the proposed model has high potential in helping physicians achieve fast and accurate glioma grading.Acknowledgements

This research was partly supported by the National Natural Science Foundation of China (61871371, 81830056, 81801691, 61671441), and Youth Innovation Promotion Association Program of Chinese Academy of Sciences (2019351).References

[1] Ostrom, Q.T., Patil, N., Cioffi, G., Waite, K., Kruchko, C. and Barnholtz-Sloan, J.S., 2020. CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the United States in 2013–2017. Neuro-oncology, 22(Supplement_1), pp.iv1-iv96.

[2] Obara T, Blonski M, Brzenczek C, et al. Adult diffuse low-grade gliomas: 35-year experience at the nancy france neurooncology unit[J]. Frontiers in Oncology, 2020, 10.

[3] Wu W, Lamborn K R, Buckner J C, et al. Joint NCCTG and NABTC prognostic factors analysis for high-grade recurrent glioma[J]. Neuro-oncology, 2010, 12(2): 164-172.

[4] Zhuge Y, Ning H, Mathen P, et al. Automated glioma grading on conventional MRI images using deep convolutional neural networks[J]. Medical physics, 2020, 47(7): 3044-3053.

[5] Ge C, Qu Q, Gu I Y H, et al. 3D multi-scale convolutional networks for glioma grading using MR images[C]. 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE, 2018: 141-145.

[6] Matsui Y, Maruyama T, Nitta M, et al. Prediction of lower-grade glioma molecular subtypes using deep learning[J]. Journal of neuro-oncology, 2020, 146(2): 321-327.

[7] Ge C, Gu I Y H, Jakola A S, et al. Deep learning and multi-sensor fusion for glioma classification using multistream 2D convolutional networks[C]. 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, 2018: 5894-5897.

[8] He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016: 770-778.

[9] Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]. Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 7132-7141.

[10] Menze B H, Jakab A, Bauer S, et al. The multimodal brain tumor image segmentation benchmark (BRATS)[J]. IEEE transactions on medical imaging, 2014, 34(10): 1993-2024.

[11] Bakas S, Akbari H, Sotiras A, et al. Advancing the cancer genome atlas glioma MRI collections with expert segmentation labels and radiomic features[J]. Scientific data, 2017, 4(1): 1-13.

[12] Bakas S, Reyes M, Jakab A, et al. Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the BRATS challenge[J]. arXiv preprint arXiv:1811.02629, 2018.

Figures

Figure 1. Schematic diagram of the human knowledge guided CNN model. The most important quantitative features extracted from tumor regions are selected to build radiomic signatures which contain the human knowledge. Using the human knowledge as a prior, the y-discrepancy distance is minimized to help the constructed CNN model use brain MR images more efficiently and eliminate the reliance on segmentation maps in clinical applications.

Figure 2. Schematic diagram of the human knowledge construction process. Quantitative features are extracted from the tumor areas in brain MR images. The most important features are selected to train the LRC. The outputs of the trained LRC are treated as the radiomic signatures that contain expected human knowledge.

Figure 3. Names and types of the selected features. 5 features are selected using the SelectKBest algorithm based on mutual information, student t-test method, and Recursive Feature elimination (RFE) algorithm.

Figure 4. AUC scores of different models. The LRC and the CNN model achieve similar grading performance. Both can perform better when more training data are provided. Human knowledge exploitation can improve the performance of the CNN model, and the human knowledge guided CNN model achieves the best grading results.