3172

MVPA using the hyperaligned 7T-BOLD signals revealed that the initial decrease contains finer information to decode facial expressions1National Institute of Information and Communications Technology, Suita Osaka, Japan, 2Osaka Universitiy, Suita Osaka, Japan

Synopsis

Previous studies suggested that the initial decrease in the BOLD signal reflects primary neuronal activity more than the later hemodynamic positive peak responses. We applied the hyper-alignment algorithm to 7T-BOLD timeseries during the facial expression discrimination task. and conducted the MVPA using the aligned data. We found decoding accuracies in the amygdala and superior temporal sulcus at 2 s after the face onset were significantly beyond baseline and the voxels contributing to the decoding accuracy displayed decreasing pattern in hemodynamics response, revealing that the initial decrease in 7T-BOLD signals contains finer information than thought previously.

Introduction

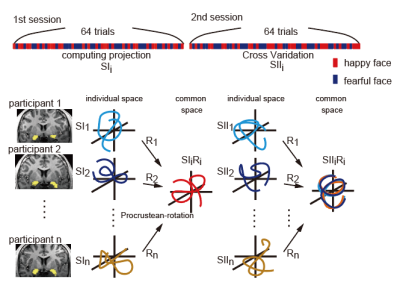

Functional MRI has been widely used to identify the neural substrates for human behaviors and the development of multivoxel pattern analysis (MVPA) enables us to quantify the information about task events and cognitive processes contained in the BOLD activity patterns. The initial de-oxygenation in the hemodynamic response is considered a better indicator of neuronal activity than later oxygenation1. Regarding BOLD fMRI, the magnitude of the initial decrease depends on the strength of the magnetic field2-6 and the initial decrease is easier to detect with a stronger magnetic field. However, whether the initial decrease of the hemodynamic response carries quantitative information for MVPA to decode task events and cognitive processes is unknown. We conducted an MVPA of a facial expression discrimination task using 7T BOLD fMRI to examine whether activity shortly after the stimulus (i.e., face) onset can discriminate facial expressions. To overcome the following difficulties of the conventional method: 1) the signal to noise ratio is limited due to the locations in the brain and the habituation through task repetition (e.g., amygdala), 2) there is structural variability among individuals, we applied the hyper-alignment algorithm7 (Figure1) to 7T-BOLD timeseries of the social brain, such as the amygdala and superior temporal sulcus (STS), since these structures play a central role in emotional processing, facial expression recognition, and social interactions 8,9.Methods

MRI data were acquired with a 7T MRI scanner (MAGNETOM, Siemens Healthcare, Erlangen, Germany) with a 32-channel receiver head coil (Nova Medical, Wilmington, MA, USA). We obtained 32 partial brain axial oblique slices including the amygdala using a 2D multiband gradient echo EPI sequence at 1.5 mm isotropic resolution10. The sequence parameters were as follows: TR = 1 s, TE = 25 ms, Flip angle = 55°, multi-band acceleration factor = 2, and GRAPPA acceleration factor = 3. Participants were required to identify if a presented face was fearful or happy by pressing a left or right button within 1.5 s. The entire task consisted of 64 trials, the length of each trial was 16 s, and the face presentation lasted 2 s. The analysis window was 9 s beginning immediately after the stimulus onset. The number of fearful and happy trials was 32 each, and they were presented in pseudo-random order. The decoding accuracies of different hemodynamic responses at different times after the stimulus onset were evaluated.Results

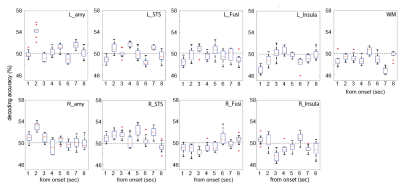

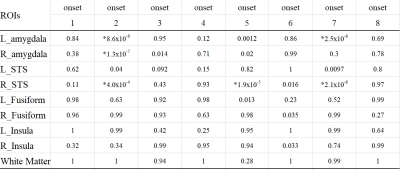

We computed the decoding accuracy by conducting a logistic regression of the hyperaligned data at every time point after the onset of the face stimuli (1-8 s in 1-s increments) using a 10-fold cross-validation with the paired t-test to compare accuracies for the correct face labels and randomized labels (Figure 2 and Table1). The decoding accuracy was significantly higher in the bilateral amygdala and right STS than the baseline with the randomized emotion labels. No difference was observed in the other control ROIs. Next, we checked the hemodynamic features of the voxels important for facial expression discrimination (i.e., large-weighted voxels) at each time point by comparing the facial expressions and signs of the weights. It was found that the initial decrease in hemodynamics can discriminate facial expressions at onset 2 and that the voxels used for fearful faces exhibit a larger initial decrease in the amygdala (Figure 3A). In the right STS, we also noticed a large initial decrease in the voxels weighted positively for fearful faces at 2s from the onset (Figure 3B). Notably, the BOLD timeseries of the voxels in the fusiform showed uniform responses to faces regardless of their emotional expressions (Figure 3C). Finally, we examined the effectiveness of hyperalignment prior to MVPA by comparing it with structure-based normalization methods. We found that structure-based normalization produced less accurate decoding accuracy, particularly at onset 2 (two tailed paired t-test, p = 5.1 x 10-6, t = 6.1 and p = 6.1 x 10-4, t = 3.9 for left and right amygdala, respectively).Discussion and Conclusion

Using the simple facial expression discrimination task, we demonstrated that discrimination accuracies in the bilateral amygdala and right STS were higher shortly after the onset of the stimulus than at the time of the hemodynamic positive peak, suggesting that the initial decrease in the BOLD signal contains more information to decode task events than previously thought. We also showed that the voxels contributing to the discrimination shortly after the face onset displayed an initial decrease in activity that was consistent with previous studies2,4,11. Using the hyperalignment proposed by Haxby et al.7, we succeeded to improve the decoding performance. This ability suggested that functional alignment techniques, such as hyperalignment, are also effective for subcortical regions such as the amygdala, for which structural alignment is difficult due to structural diversity and increasing the number of trials in a task is also difficult due to signal decay12-14. In summary, this study provides empirical evidence that the 7T-BOLD initial decrease detected by hyperalignment contains information to decode task events. The methodology and results reported here should be useful not only for identifying various types of information represented in each brain region, but also for deeper understanding of the link between fMRI signals and information about internal and external worlds of the subject.Acknowledgements

The authors disclose receipt of the following financial support for the research, authorship, and/or publication of this article: Preparation of this manuscript was supported by CREST, JST, Moonshot R&D Grant Number JPMJMS2011, JST and KAKENHI (17H06314 and 20K21562) to M.H. We would like to thank Satoshi Tada and Dr. Tomoki Haji for technical assistance.References

1 Hu X, Yacoub E. The story of the initial dip in fMRI. Neuroimage 2012;62:1103-1108.

2 Menon RS, Ogawa S, Hu X, et al. BOLD based functional MRI at 4 Tesla includes a capillary bed contribution: echo-planar imaging mirrors previous optical imaging using intrinsic signals. Magn Reson Med. 1995;33:453–459.

3 Yacoub E, Hu X. Detection of the early negative response in fMRI at 1.5 Tesla. Magn Reson Med. 1999;41:1088-1092.

4 Yacoub E, Shmuel A, Pfeuffer J, et al. Investigation of the initial dip in fMRI at 7 Tesla. NMR Biomed. 2001;14:408-412.

5 Janz C, Speck O, Hennig J. Time-resolved measurements of brain activation after a short visual stimulus: new results on the physiological mechanisms of the cortical response. NMR Biomed. 1997;10:222–229.

6 Janz C, Schmitt C, Speck O, et al. Comparison of the hemodynamic response to different visual stimuli in single-event and block stimulation fMRI experiments. J Magn Reson Imaging. 2000;12:708–714.

7 Haxby JV, Guntupalli JS, Connolly AC, et al. A common, high-dimensional model of the representational space in human ventral temporal cortex. Neuron 2011;72:404-416.

8 Adolphs R, Tranel D, Damasio H, et al. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 1994;372:669-672.

9 Young AW, Aggleton JP, Hellawell DJ, et al. Face processing impairments after amygdalotomy. Brain 1995;118:15-24.

10 Moeller S, Yacoub E, Olman CA, et al. Multiband multislice GE-EPI at 7 tesla, with 16-fold acceleration using partial parallel imaging with application to high spatial and temporal whole-brain fMRI. Magn Reson Med. 2010;63:1144-1153.

11 Malonek D, Grinvald A. Interactions between electrical activity and cortical microcirculation revealed by imaging spectroscopy: implications for functional brain mapping. Science 1996;272:551-554.

12 Schwartz CE, Wright CI, Shin LM, et al. Differential amygdalar response to novel versus newly familiar neutral faces: a functional MRI probe developed for studying inhibited temperament. Biol Psychiatry 2003;53:854-862.

13 Geissberger N, Tik M, Sladky R, et al. Reproducibility of amygdala activation in facial emotion processing at 7T. Neuroimage 2020;211:116585.

14 Waraczynski M. Toward a systems-oriented approach to the role of the extended amygdala in adaptive responding. Neurosci Biobehav Rev. 2016;68:177-194.

Figures