3158

Automatic analysis of carotid vessel wall in MR black blood images using custom convolutional trajectories1Paul C. Lauterbur Research Center for Biomedical Imaging, Shenzhen Institute of Advanced Technology, Chinese Academy of Sciences, Shenzhen, China, 2College of Software, xinjiang University, Xinjiang, China, 3Department of Electronic Engineering, Tsinghua University, Beijing, China, 4Department of Radiology, Peking university shenzhen hospital, Beijing, China

Synopsis

Atherosclerotic plaque is a major cause of ischemic stroke. Some arterial morphological features obtained from MR vessel wall images show great potential for identifying high-risk plaques. Deep learning has now been applied to the automatic segmentation of vessel walls to accurately and efficiently measure arterial morphological features. However, the accuracy of the existing segmentation methods is not yet high enough for clinical practical applications. This study proposed a new segmentation framework with custom convolutional trajectories for automatic segmentation of arterial vessel wall and the framework improved the accuracy of vessel wall segmentation.

INTRODUCTION

Atherosclerotic plaque is a major cause of ischemic stroke [1]. Some arterial morphological features obtained from MR vessel wall images show great potential for identifying high-risk plaques [2-3]. At present, deep learning has been applied to the automatic segmentation of vessel walls to accurately and efficiently measure arterial morphological features [4]. However, due to the relatively small target of the arterial vessel wall and plaque, the low signal-to-noise ratio of the MR vessel wall image, and the irregular shape of the arterial vessel caused by the plaque, the accuracy of the existing segmentation methods is not yet high enough for clinical practical applications. In this work, a new segmentation framework with custom convolutional trajectories is proposed to improve the accuracy of vessel wall segmentation. The segmentation framework consists of two parts, the coordinate mapping and the optimised U-net [5-6].METHODS

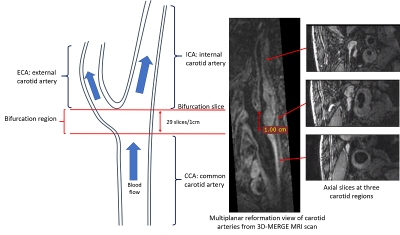

Data Sources: The Carotid MR vessel wall image dataset with spatial resolution of 0.35mm3 of 50 subjects provided by the Grand Challenge's Carotid Vessel Wall Segmentation Challenge were used in this study. The dataset of 19 and 6 subjects was used for training and validation, respectively. The dataset consisted of 25 subjects was used as a test set to assess the effectiveness of the proposed segmentation framework.Data preprocessing: As shown in the Figure 1, 720 or 640 2D slices were reconstructed for internal, external, and common carotid arterial segments and carotid bifurcation region. Of which, about 100 slices with good image quality were selected for annotation and intercepted to size of 96-pixel × 96-pixel centered on the vessel to ensure the target vessel in the center of the input image ’while reducing the amount of irrelevant data affecting the training efficiency of the model.

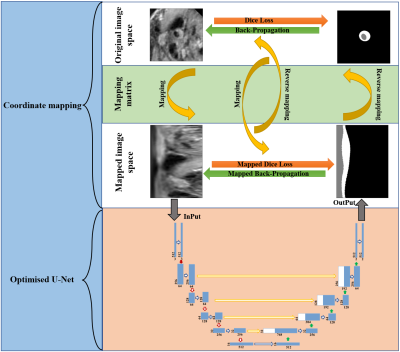

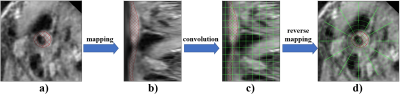

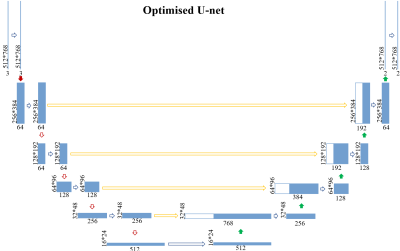

Segmentation framework: The main workflow of the proposed framework is shown in Figure 2. It consists of two parts, the coordinate mapping and the optimised U-net. As shown in Figure 3, the coordinate mapping module transforms the original image space to the mapped image space through a mapping matrix. The mapping matrix used in this study was developed to implement a rotation-radial convolution trajectory to exploit the angular features of the vessel wall. The optimised U-Net module is shown in Figure 4. It was used for the feature extraction based on the idea of the Shortcut Connections in ResNet. Based on the effective integration of the two modules, the framework customed the convolution trajectory for segmentation of vessel wall in the mapped image space while back-propagating the loss function to the original image space.

Experimental process: Augmented pseudo-labeling: To obtain better training results, we first trained a coarse segmentation network for augmenting pseudo-labels for the unlabelled data. Semi-supervised training: AI models are trained using pseudo-labeled datasets until the network converges. Supervised training: The AI model is trained only using the original labels of the dataset until the model converges.

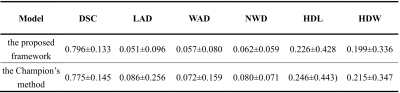

Evaluation indexes: Six indexes were used to evaluate the performance of the segmentation framework, including Dice Similarity Coefficient (DSC), Lumen area difference (LAD), Wall area difference (WAD), Normalized wall index difference (NWD), Haussdorf distance on lumen normalized by radius (HDL), and Haussdorf distance on wall normalized by radius (HDW).

RESULTS

The proposed segmentation framework demonstrates good segmentation performance with a DSC of 0.796. This is higher than that of the Champion in the Grand Challenge's Carotid Wall Segmentation Challenge, which achieves a DSC of 0.775. The vessel wall segmentation results of the proposed framework and the comparison with the Champion results are summarized in Table 1. The proposed segmentation framework achieves a LAD of 0.051, WAD of 0.057, NWD of 0.062, HDL of 0.226, and HDW of 0.199. These indicators were all lower than those of the Champion, which achieves a LAD of 0.086, WAD of 0.072, NWD of 0.080, HDL of 0.246, and HDW of 0.215. These evaluation indexes indicate that the arterial vessel wall segmentation results obtained by the proposed framework are closer to the standard of manual segmentation.DISCUSSION

The proposed framework achieves a more accurate segmentation effect than the Grand Challenge's Champion. This maybe benefited from the two advantages of the data mapping in proposed framework. First, the distribution of the vessel images is more regular that the blood vessels, vessel walls, and other voxels are sequentially composed in a horizontal sequence. Second, the voxels in the center of the image have a higher weighting, thus improving the efficiency of the network model optimization. In addition, the proposed framework implements the functionality of a custom convolutional trajectory. However, the DSC value of this study is slightly lower than that reported in the existing literature. The main reason is that the amount of data in the training dataset of this study is far less than that of other studies, only containing about 1800 slices for model training.CONCLUSION

The proposed framework customed the convolution trajectory for the automatic segmentation of the arterial vessel wall according to the ring distribution characteristics of the arteries, thereby achieving a better vessel wall segmentation result than the Champion of the carotid vessel wall segmentation challenge, and improving the accuracy of vessel wall segmentation in the MR black blood images.Acknowledgements

The study was partially support by National Natural Science Foundation of China (81830056), Key Laboratory for Magnetic Resonance and Multimodality Imaging of Guangdong Province (2020B1212060051), Shenzhen Basic Research Program (JCYJ20180302145700745 and KCXFZ202002011010360), and Guangdong Innovation Platform of Translational Research for Cerebrovascular Diseases.References

[1] Turan, Tanya N., et al. Treatment of atherosclerotic intracranial arterial stenosis. Stroke 40.6 (2009): 2257-2261.

[2] Saba L, Yuan C, et al. Carotid Artery Wall Imaging: Perspective and Guidelines from the ASNR Vessel Wall Imaging Study Group and Expert Consensus Recommendations of the American Society of Neuroradiology. AJNR Am J Neuroradiol. 2018;39(2):E9-E31.

[3] Mandell DM, Mossa-Basha M, et al. Intracranial Vessel Wall MRI: Principles and Expert Consensus Recommendations of the American Society of Neuroradiology. AJNR Am J Neuroradiol. 2017;38(2):218-229.

[4] Shi F, Yang Q, et al. Intracranial Vessel Wall Segmentation Using Convolutional Neural Networks. IEEE Trans Biomed Eng. 2019;66(10):2840-2847.

[5] Ronneberger, Olaf, et al. U-net: Convolutional networks for biomedical image segmentation. International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015.

[6] Kaiming He, Xiangyu Zhang, et al. Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

Figures