3104

Deep Learning for Veterinary MRI: Automated Detection of Intervertebral Disc Herniation in Pet Dogs1College of Health Science and Environmental Engineering, Shenzhen Technology University, Shenzhen, China, 2College of Applied Sciences, Shenzhen University, Shenzhen, China, 3Shenzhen GoldenStone Medical Technology Co. , Ltd, Shenzhen, China

Synopsis

The automated detection of Intervertebral disc (IVD) herniation in animal MRI may facilitate veterinary diagnosis, yet it is rarely studied due to the lack of training data and the challenges from inter-breed variations. Here, we constructed a dog spinal cord MRI dataset with bounding box annotations of herniated discs, and conducted experiments using a number of well-known deep learning models. We demonstrated that automated detection of animal IVD herniation was feasible and in general two-stage detection models such as Faster R-CNN outperformed one-stage models.

Introduction

MRI is widely considered the gold standard for diagnosing Intervertebral disc (IVD) disease for humans and veterinary patients1. Although deep learning based detection methods have been shown to facilitate the diagnosis of human IVD herniation 2-4, it is much less studied on animals, particularly pet animals5-6. Besides the lack of training data, challenges may emerge as the anatomical structures of pet animals vary significantly among species and breeds. On the other hand, automated or computer-aided detection can be particularly desirable for veterinary MRI, as many veterinarians may not have taken specialized training in reading MRI. In this study, we first constructed a dog spinal cord MRI dataset with bounding box annotations of herniated discs, then trained and compared a number of well-known deep learning models, e.g., Faster R-CNN, Cascade R-CNN and YOLOv57-8. Without special preprocessing and training tricks, we demonstrated that automated detection of animal IVD herniation was feasible with the best average precision over 0.72. Moreover, we found that two-stage detection models may be more suitable than one-stage models in this application.Methods

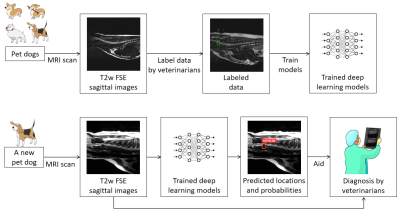

As illustrated in Figure 1, the MRI data used in this study were acquired from pet dogs of various breeds, from which the herniated IVDs were identified and labeled with bounding boxes by an experienced veterinarian radiologist. Approximately half of the dataset (training dataset) was used for training deep learning models and the rest (test dataset) for evaluation. The trained model can be employed later to facilitate diagnosis on new animals of potential IVD herniation. Details are provided as follows.Dataset construction

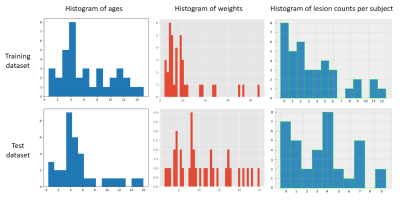

The MRI data were acquired using a T2-w fast spin-echo sequence on a super-conductive animal MRI scanner (1.5T vPetMR, GSMED) at a local veterinary hospital with written consensus obtained from the owners. In total, the dataset consisted of 229 sagittal images of 74 dog subjects (breeds: 21, ages: max=16Y, min=0.33Y, mean=5.94Y, std=3.80Y, weights: max=44kg, min=1.5kg, mean=10.34kg, std=8.41kg; 15 subjects had no IVD herniation found). As shown in Figure 2, 111 images of 38 dog subjects constituted the training dataset (breeds:15, ages: max=15Y, min=0.67Y, mean=6.15Y, std=3.74Y; weights: max=44kg, min=2kg, mean=10.53kg, std=9.77kg; 8 subjects had no IVD herniation found). 118 images of 36 dog subjects constituted the test dataset (breeds:15; ages: max=16Y, min=0.33Y, mean=5.74Y, std=3.84Y; Weights: max=25kg, min=1.5kg, mean=10.82kg, std=6.4kg; 7 subjects had no IVD herniation found). There was no subject overlap between the training dataset and the test dataset.

Model training

Faster R-CNN, Cascade R-CNN, Guided Anchoring, Cascade-RPN, AutoAssign and YOLOv5 models were trained and compared. The first four methods are two-stage detection methods proposed between 2015 and 2019, whereas the last two are one-stage detection methods both proposed in 2020. We used three-fold cross-validation to find the optimal training hyper-parameters for each model. All training was done using MMDetection framework except for YOLOv5 (github.com/ultralytics/yolov5).

Evaluation

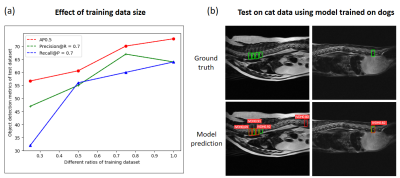

For each model, precision/recall (PR) curves were plotted at intersection over union (IOU)=0.5 and average precisions (AP) were calculated as area under the PR curves using Companion Open-Source Toolkit9. For all models at evaluation, we used test time augmentation (TTA), which could slightly increase AP by 1 to 2 percent. To study the effect of training data size, we tried using only a fraction of the training data at different ratios (1/4, 1/2, and 3/4). In addition, to challenge the generalization ability of our model, we performed preliminary cross-species test on data of two cats without any further training of models.

Results

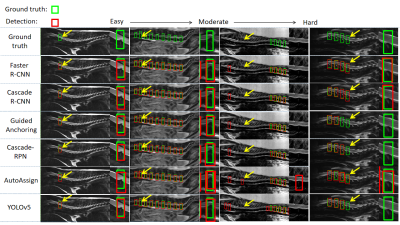

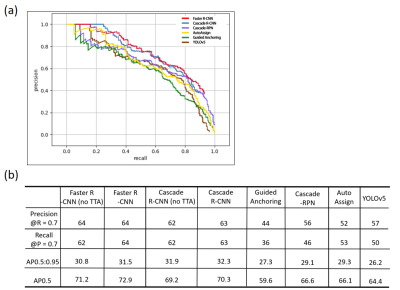

All trained deep learning models were able to detect potential IVD herniation but with varying accuracies. For the severe IVD herniation (Figure 3, easy example), all models provided accurate detection results. For more challenging cases (Figure 3, moderate and hard examples), some models started to show false positives (FPs) and false negatives (FNs) but in general two-stage methods (Faster R-CNN, Cascade R-CNN, Guided Anchoring, and Cascade-RPN) were better than one-stage methods (AutoAssign and YOLOv5). As shown in Figure 4, Faster R-CNN and Cascade R-CNN were consistently the best two models in terms of AP and other metrics. Figure 5a shows that the training data size did play an important role as all metrics had a strong correlation with it, which suggests that more data may lead to even better results. Figure 5b shows the preliminary test on cat data using Faster R-CNN: there were errors as expected, but most herniated discs were correctly identified without excessive false positives.Discussion and Conclusion

Despite the huge differences in breeds, ages, and weights among the dog subjects, we demonstrated that it was feasible to achieve automated detection on IVD herniation based on deep learning methods. In recent years, computer vision community has focused more on developing one-stage detection methods. However, in medical applications, two-stage methods may still prevail due to the accuracy advantages as we demonstrated in Figure 4. We did not use dedicated preprocessing and training tricks, but strategies such as cropping irrelevant areas, strong data augmentation and model ensemble should all help. To improve the cross-breed/species performance (Figure 5b), it is possible to jointly train a backbone then apply fine-tuning. With much room to explore, deep learning aided diagnosis may provide powerful support for veterinary MRI in the future.Acknowledgements

No acknowledgement found.References

1. Ray-Offor O D, Wachukwu C M, Onubiyi C C B. Intervertebral disc herniation: prevalence and association with clinical diagnosis[J]. Nigerian Journal of Medicine, 2016, 25(2): 107-112.

2. Mbarki W, Bouchouicha M, Frizzi S, et al. A novel method based on deep learning for herniated lumbar disc segmentation[C]//2020 4th International Conference on Advanced Systems and Emergent Technologies (IC_ASET). IEEE, 2020: 394-399.

3. Ma S, Huang Y, Che X, et al. Faster RCNN‐based detection of cervical spinal cord injury and disc degeneration[J]. Journal of Applied Clinical Medical Physics, 2020, 21(9): 235-243.

4. Cui P, Guo Z, Xu J, et al. Tissue recognition in spinal endoscopic surgery using deep learning[C]//2019 IEEE 10th International Conference on Awareness Science and Technology (iCAST). IEEE, 2019: 1-5.

5. Fenn J, Olby N J, Moore S A, et al. Classification of intervertebral disc disease[J]. Frontiers in veterinary science, 2020, 7: 707.

6. Dara L Kraitchman, Larry Gainsburg3 ,et al.Veterinary Diagnostic MRI at an Academic Medical Center: Tips, Tricks, and Pathological Confirmation[J]. Proc. Intl. Soc. Mag. Reson. Med. 23 (2015)

7. Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28: 91-99.

8. Cai Z, Vasconcelos N. Cascade r-cnn: High quality object detection and instance segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019.

9. Padilla R, Passos W L, Dias T L B, et al. A comparative analysis of object detection metrics with a companion open-source toolkit[J]. Electronics, 2021, 10(3): 279.

Figures

Figure 1. Schematic illustration of the developed method. T2-weighted images of 74 pet dogs were acquired using 1.5T scanners, and the herniated discs were identified and annotated with bounding boxes by one veterinarian radiologist. Approximately half of the dataset was used for training deep learning models to predict the locations and possibilities of suspected IVD herniation, and the rest for evaluation. The trained model can be employed later to facilitate diagnosis on new animals of potential IVD herniation.

Figure 2. The age, weight, and lesion count histograms of training and test dataset. There were huge age and weight variations among the subjects, but the distributions were similar in the training dataset and test dataset.

Figure 3. Typical detection results of Faster R-CNN, Cascade R-CNN, Guided Anchoring, Cascade-RPN, AutoAssign and YOLOv5 models. The green box represents ground truth, and the red box represents the detection. The yellow arrow points to the area we magnified. The deep learning models we used had accurate detection of the severe IVD herniation (the left two columns). In hard cases (the right two columns), there were some false positives (FPs) and False negatives (FNs). In general two-stage methods performed better.

Figure 4. (a) The precision/recall (PR) curves of Faster R-CNN, Cascade R-CNN, Guided Anchoring, Cascade-RPN, AutoAssign and YOLOv5 models. The best performing models were Faster R-CNN (red line) and Cascade R-CNN (blue line). (b) The object detection metrics of of Faster R-CNN, Cascade R-CNN, Guided Anchoring, Cascade-RPN, AutoAssign and YOLOv5 models.

Figure 5. (a) The effect of training data size on detection accuracy, suggesting the demand for collecting more data to improve the model performance in the future. (b) The preliminary detection results of Faster R-CNN model on cat MRI data. The data from two cats were tested without retraining the model.