3064

Interpretable Meningioma Grading and Segmentation with Multiparametric Deep Learning1Electrical and Electronic Engineering, Yonsei University, Seoul, Korea, Republic of, 2Department of Radiology and Research Institute of Radiological Science and Center for Clinical Imaging Data Science, Yonsei University College of Medicine, Seoul, Korea, Republic of, 3Department of Radiology, Ewha Womans University College of Medicine, Seoul, Korea, Republic of

Synopsis

Preoperative prediction of meningioma grade is important because it influences treatment planning, including surgical resection and stereotactic radiosurgery strategy. The aim of this study was to establish a robust interpretable multiparametric deep learning (DL) model for automatic noninvasive grading of meningiomas along with segmentation. We demonstrated that the interpretable multiparametric DL grading model that combined the T2-weighted and contrast-enhanced T1-weighted images can enable fully automatic grading of meningiomas along with segmentation.

Introduction

Meningiomas are the most common primary intracranial neoplasms in adults, comprising approximately one-third of all intracranial tumors1. Meningiomas display remarkable histopathologic and clinical heterogeneity; the majority of meningiomas (80%) are classified as low-grade (WHO grade 1; benign) and have an indolent clinical course2. Conversely, high-grade (WHO grade 2; atypical or WHO grade 3; anaplastic) tumors have an aggressive biological behavior, a tendency to recur, and a poor prognosis2. MRI is the primary imaging modality for meningioma diagnosis and characterization, treatment planning, and monitoring of therapeutic effect3,4,5; however, the value of meningioma grading by imaging has not been fully appreciated so far due to the challenge of finding distinct imaging features6. Moreover, although accurate lesion segmentation is crucial in meningioma diagnosis and treatment planning7,8 manual segmentation of meningiomas is time-consuming, laborious, and subject to interreader and intrareader variability8. Thus, in this study, a robust fully automated deep learning (DL) grading model for grading of meningiomas along with segmentation is proposed.Methods

Patient Population: The institutional review board of Severance Hospital approved this retrospective study and waived the need for informed patient consent. We retrospectively reviewed consecutive meningioma cases in which pathological confirmation and preoperative conventional MRI were performed between February 2008 and September 2018. A total of 257 patients (mean age: 57.16 ± 12.83 years; 197 females and 60 males; low-grade: 162; high-grade: 95) were enrolled in the institutional training set. External validation sets with identical criteria consisted of 61 patients (mean age: 55.52 ± 15.02 years; 41 females and 20 males; low-grade: 46; high-grade: 15) from Ewha Womans University Mokdong Hospital, enrolled between January 2016 and December 2018.MRI Protocol: In the institutional dataset, patients were scanned on 3.0 Tesla MRI units (Achieva/Ingenia, Philips Medical Systems). Imaging protocols included T2-weighted (T2) and contrast-enhanced T1-weighted imaging (T1C). T1C images were acquired after administration of 0.1 mL/kg of gadolinium-based contrast material (Gadovist, Bayer). In the external validation set, patients were scanned on 1.5 or 3.0 Tesla MRI units (Avanto, Siemens or Achieva/Ingenia, Philips Medical Systems). T1C images were acquired after administration of 0.1 mL/kg of gadolinium-based contrast material (Dotarem, Guerbert or Gadovist, Bayer).

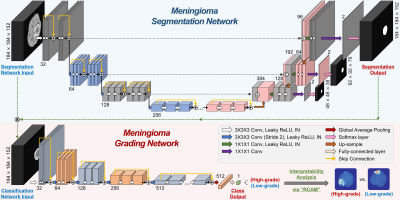

Deep Learning Architecture: A two-stage DL grading model was constructed for segmentation and classification based on three-dimensional (3D) U-net9 and 3D ResNet10. The overall architecture of the DL grading model is shown in Fig. 1. The proposed DL grading model consists of two main networks: (1) a segmentation network that segments meningiomas from the multiparametric images, and (2) a classification network that classifies the grade of meningiomas from the multiparametric tumor images that were segmented via the segmentation network. The DL grading model was implemented using the Python PyTorch library.

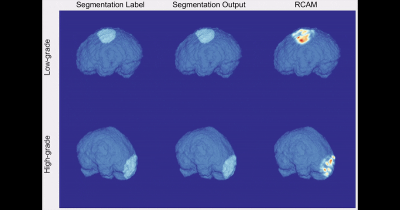

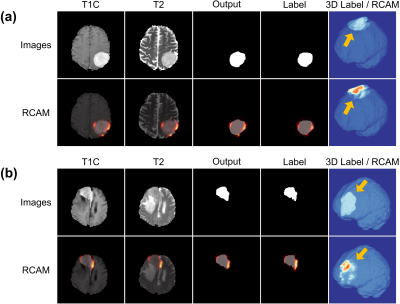

Interpretability of DL Grading Model: To interpret and analyze the classification network’s inference and the features for grading the meningiomas, we used the RCAM (i.e., Relevance-weighted Class Activation Mapping) method, which can generate high-resolution class activation maps11. We visualized the heatmaps made by RCAM at the convolutional layer of the second level of the classification network. The relevance values calculated by the RCAM can be interpreted as the contribution to an output class (high-grade vs. low-grade)11.

Statistical Analysis: The segmentation performance of the DL grading model was assessed using Dice coefficient. The grading performance was evaluated in the training set and validated in the test set. The predictive performance was quantified by calculating the area under the curve (AUC), accuracy, sensitivity, and specificity. Statistical significance was set at p < 0.05.

Results

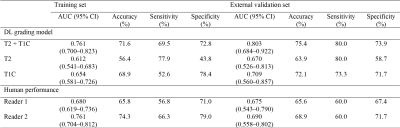

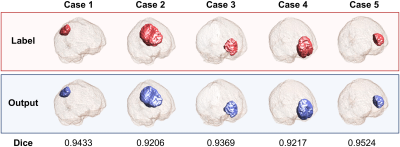

The T1C grading model showed a Dice coefficient (0.848) similar to that of the combined T2 and T1C grading model (0.846), whereas the T2 grading model showed a lower Dice coefficient (0.745). 3D visualization of the ground-truth and DL segmentations are shown in Fig. 2.In the external validation set, the combined T2 and T1C grading model showed the highest performance, with an AUC of 0.803 (95% confidence interval [CI]: 0.684–0.922) and accuracy, sensitivity, and specificity of 75.4%, 80.0%, and 73.9%, respectively (Table 1). The T1C grading model showed an AUC of 0.709 (95% CI: 0.560–0.857) and accuracy, sensitivity, and specificity of 72.1%, 73.3%, and 71.7%, respectively. The T2 grading model showed the lowest performance, with an AUC of 0.670 (95% CI: 0.526-0.813) and accuracy, sensitivity, and specificity of 63.9%, 80.0%, and 58.7%, respectively. Two human readers showed AUCs of 0.675 and 0.690 (95% CI: 0.543–0.790 and 0.558–0.802, respectively), accuracies of 65.6% and 68.9%, sensitivities of 60.0% and 60.0%, and specificities of 67.4% and 71.7%, respectively.

Fig. 3 shows representative cases of low-grade and high-grade meningiomas that were correctly classified by the DL grading model. According to the RCAM analysis, our DL grading model recognized the specific features of the tumor margin for classification. The corresponding RCAM shows that DL grading model is referring to the relatively smooth and irregular surface characteristics of the mass for classifying the low-grade and high-grade meningiomas, respectively. 3D visualization of the ground-truth segmentations, DL segmentations, and the RCAM analysis are presented in Fig. 4.

Conclusion

In conclusion, an interpretable multiparametric DL grading model that combined the T2 and T1C can enable fully automatic grading of meningiomas along with segmentation.Acknowledgements

* Yohan Jun and Yae Won Park contributed equally to this work.

** Dosik Hwang and Sung Soo Ahn are co-corresponding authors.

This research received funding from the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, Information and Communication Technologies & Future Planning (2020R1A2C1003886); Korea Health Technology R&D Project through the Korea Health Industry Development Institute, funded by the Ministry of Health & Welfare (HI21C1161); Basic Science Research Program through the NRF funded by the Ministry of Education (2020R1I1A1A01071648); Basic Science Research Program through the NRF funded by the Ministry of Science and ICT (2019R1A2B5B01070488); Brain Research Program through the NRF funded by the Ministry of Science, ICT & Future Planning (2018M3C7A1024734); Basic Research Program through the National Research Foundation of Korea (NRF) funded by the MSIT (2021R1A4A1031437); and Y-BASE R&E Institute a Brain Korea 21, Yonsei University.

References

[1] Ostrom, Quinn T., et al. "CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the United States in 2009–2013." Neuro-oncology 18.suppl_5 (2016): v1-v75.

[2] Kshettry, Varun R., et al. "Descriptive epidemiology of World Health Organization grades II and III intracranial meningiomas in the United States." Neuro-oncology 17.8 (2015): 1166-1173.

[3] Goldbrunner, Roland, et al. "EANO guidelines for the diagnosis and treatment of meningiomas." The Lancet Oncology 17.9 (2016): e383-e391.

[4] Huang, Raymond Y., et al. "Proposed response assessment and endpoints for meningioma clinical trials: report from the Response Assessment in Neuro-Oncology Working Group." Neuro-oncology 21.1 (2019): 26-36.

[5] Won, So Yeon, et al. "Quality assessment of meningioma radiomics studies: Bridging the gap between exploratory research and clinical applications." European Journal of Radiology 138 (2021): 109673.

[6] Nowosielski, Martha, et al. "Diagnostic challenges in meningioma." Neuro-oncology 19.12 (2017): 1588-1598.

[7] Wadhwa, Anjali, Anuj Bhardwaj, and Vivek Singh Verma. "A review on brain tumor segmentation of MRI images." Magnetic resonance imaging 61 (2019): 247-259.

[8] Harrison, Gillian, et al. "Quantitative tumor volumetric responses after Gamma Knife radiosurgery for meningiomas." Journal of neurosurgery 124.1 (2016): 146-154.

[9] Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015.

[10] He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

[11] Lee, Jeong Ryong, et al. "Relevance-CAM: Your Model Already Knows Where To Look." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021.

Figures

Figure 3. Representative cases of meningiomas that are correctly classified by both deep learning (DL) grading model and human readers. Relevance-weighted Class Activation Map (RCAM) using the output mask is overlayed on contrast-enhanced T1-weighted image (T1C), T2-weighted image (T2), and output mask, respectively, and RCAM using the label mask is overlayed on the label mask. Three-dimensional visualization of the label mask and RCAM are also provided. Representative cases of (a) low-grade meningioma and (b) high-grade meningioma, respectively.