2850

Generalizable synthetic multi-contrast MRI generation using physics-informed convolutional networks1Department of Radiotherapy, Division of Imaging and Oncology, UMC Utrecht, Utrecht, Netherlands, 2Computational Imaging Group for MR Diagnostics and Therapy, Center for Image Sciences, UMC Utrecht, Utrecht, Netherlands

Synopsis

Synthetic MRI aims to reconstruct multiple MRI contrasts from short measurements of tissue properties. Here, a generalizable physics-informed deep learning-based approach for synthetic MRI was investigated. Acquired data were mapped to effective quantitative parameter maps, here named q*-maps, which are fed to a physical signal model synthesizing four contrasts-weighted images. We demonstrated that from q*-maps, MRI contrasts unseen during training could be synthesized. The proposed method is benchmarked to a standard end-to-end deep learning approach. The two deep learning methods generated similar brain images for healthy subjects and patients with different pathologies.

Introduction

Synthetic MRI aims to reconstruct multiple contrasts from short measurements of tissue properties to reduce examination time and patient discomfort compared to separate conventional acquisitions. Synthetic MRI can facilitate patients’ diagnosis in line with conventional contrasts for various brain pathologies, e.g. multiple sclerosis and ischemia1. However, synthetic T2-FLAIR (fluid-attenuated inversion recovery) contrasts are generally degraded by artifacts, e.g. lack of lesion contrast, which may lead to an additional conventional scan being necessary to confirm the diagnosis2.We propose a physics-informed deep learning-based approach that maps a single five-minute, transient state MRI acquisition with whole brain coverage, initially designed for the quantitative MRI framework MR-STAT3,4, to effective quantitative maps (q*-maps) that are used to fit a signal model. By mapping to a physically interpretable latent space, we aim to add explainability and generalizability to unseen contrasts compared to an end-to-end deep learning-based approach.

Methods

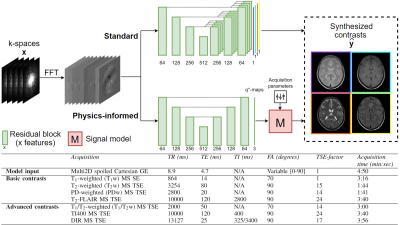

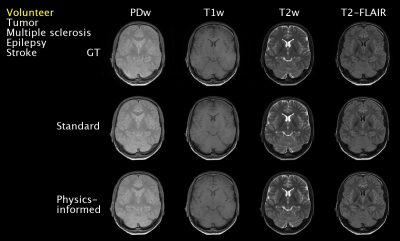

Dataset: The data of 48 subjects included in an IRB approved study5 and imaged at a 3T scanner (Ingenia MR-RT, Philips, Best, The Netherlands) using a five-minute transient state multi2D spoiled gradient echo (GE) with a slowly varying flip angle preceded by a non-selective inversion pulse3,4, supplemented by four standard protocols (PDw, T1w, T2w, and T2-FLAIR) [Fig1] were used for training (38, of which 7 for validation) and testing (10). Data consisted of volunteers (10) and brain tumor (11), stroke (8), epilepsy (9), and multiple sclerosis (10) patients, equally distributed over training, validation, and testing. One extra volunteer was scanned using additional protocols (T1/T2w, TI400, and DIR) to assess generalizability of the q*-maps.Deep learning framework: The 2D real and imaginary components of the five Fourier transformed k-spaces (transient state spoiled GE) were used as input. Conditional generative adversarial networks (GANs)6 were used for contrast synthesis. Our proposed approach, named “physics-informed”, mapped the input to a latent space that was used as relaxation rates (T1, T2) and proton density (PD), so-called effective quantitative maps (q*-maps), that were then fed into a steady-state signal model (M) for contrast synthesis [Fig1]. We hypothesize that this approach may correct for the current shortcomings of synthetic MRI and possibly generalize to the synthesis of unseen contrasts. The method is benchmarked to an end-to-end method similar to previous work7, named “standard”.

Network architectures consisted of a ResUNet8 generator and contrast-specific PatchGAN9 discriminators. The standard deep learning-based approach used a separate decoder for each of the four contrasts. The objective function consisted of an adversarial10 and content loss term per contrast $$$i$$$, where the content loss was defined as:

$$\mathcal{L}_{\textit{cont, i}} = \lambda_{1}\|\hat{y}_i-y_i\|_{1}+\lambda_{2}\|\hat{y}_i-y_i\|_{2} + \lambda_{3} (1 - \operatorname{SSIM}(\hat{y}_i, y_i)) + \lambda_{4}\left\|\left(\phi(\hat{y}_i)-\phi(y_i)\right)\right\|_{2}$$

with generated contrasts $$$\hat{y}$$$ , ground truth contrasts $$$y$$$, the 14th convolutional layer of a pre-trained VGG-19 net10 $$$\phi$$$, and loss weights $$$\lambda$$$ which are optimized based on perceptual similarity and structural similarity index (SSIM)11 using the validation set. For both methods, training for 300 epochs with batch size=1 took ~20 hours on a GPU (Tesla P100, Nvidia).

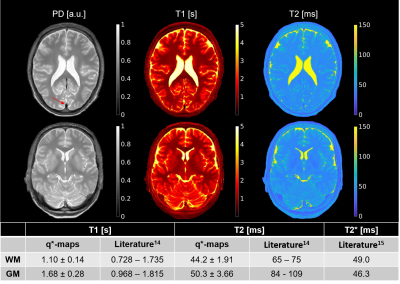

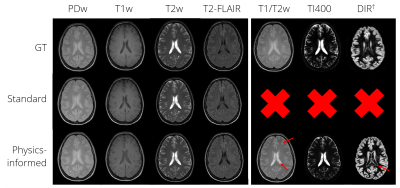

Evaluation: Q*-maps were segmented in gray and white matter using SPM1213 and compared to literature14,15. A feasibility test was performed on a volunteer investigating whether the use of q*-maps enable the synthesis of unseen contrasts. For both methods, SSIM and peak signal-to-noise ratio (PSNR) were calculated against the conventional contrasts over all slices of the test subjects, excluding the outer ten slices due to high levels of noise in the ground truth.

Results

T1-values fall within the wide range of literature whereas T2-values are closer to the reported T2*-values due to their effective definition [Fig2]. Small lesions in a T2-FLAIR contrast were captured, e.g. for epilepsy [Fig3]. However, some hyperintensities were missed, e.g. for multiple sclerosis. The physics-informed method learned to correctly null signal in vessels by setting the PD in the q*-maps to zero [Fig2, Fig3]. The visual feasibility test suggested that the physics-informed model may be able to synthesize contrasts unseen during training [Fig4]. However, contrast differences can be observed for CSF. The physics-informed method performed equally to the standard method for all contrasts. For example, for T2-FLAIR, we obtained SSIM=$$$0.770\pm08$$$ (mean$$$\pm$$$std) and PSNR=$$$23.4\pm1.8$$$ for the physics-informed model against SSIM=$$$0.76\pm0.08$$$ and PSNR=$$$22.9\pm2.2$$$ for the standard method [Fig5].Discussion

The quantitative nature of the q*-maps has been demonstrated and the presented limited feasibility test suggests that q*-maps can synthesize contrasts unseen by the model. We conjecture that the combination of the data-driven and physics-informed components can aid the synthesis of different contrasts from a single five-minute scan, but further validation is warranted, especially in pathologic conditions. The physics-informed method generated weighted contrasts similar to the standard end-to-end approach. Despite the small dataset, a wide variety of pathologies could be synthesized. Compared to previous work16, our method aims to extend the ability to adjustment the contrast using GANs during inference to synthetic MRI by explicitly incorporating the physics via a physically interpretable latent space in the form of q*-maps.Conclusion

In this work, a physics-informed deep learning-based multi-contrast synthetic MRI framework was investigated. The concept of physically interpretable q*-maps was introduced and preliminary results promisingly suggest that q*-maps enable the synthesis of MRI contrasts unseen during training. Similar brain images were synthesized compared to a standard end-to-end deep learning-based approach.Acknowledgements

We thank Anja van der Kolk, Beyza Koktas, and Sarah Jacobs for their contributions to the acquisition of the data. We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Quadro RTX 5000 GPU used for prototyping part of this research.References

1 I Blystad, JB Warntjes, O Smedby, et al. “Synthetic MRI of the brain in a clinical setting,” Acta Radiologica, 2012.

2 LN Tanenbaum, AJ Tsiouris, AN Johnson, et al. “Synthetic MRI for clinical neuroimaging: Results of the magnetic resonance image compilation (MAGiC) prospective, multicenter, multireader trial,” American Journal of Neuroradiology, 2017.

3 A Sbrizzi, O van der Heide, M Cloos, et al. “Fast quantitative MRI as a nonlinear tomography problem,” Magnetic Resonance Imaging, 2018.

4 O van der Heide, A Sbrizzi, PR Luijten, et al. “High-resolution in vivo MR-STAT using a matrix-free and parallelized reconstruction algorithm,” NMR in Biomedicine, 2020.

5 S Mandija, F D'Agata, H Liu, et al. “A five-minute multi-parametric high-resolution whole-brain MR-STAT exam: first results from a clinical trial”, ISMRM 2020.

6 I Goodfellow, J Pouget-Abadie, M Mirza, et al. “Generative adversarial nets,” Advances in neural information processing systems, 2014.

7 K Wang, M Doneva, T Amthor, et al. “High Fidelity Direct-Contrast Synthesis from Magnetic Resonance Fingerprinting in Diagnostic Imaging,” ISMRM 2020.

8 Z Zhang, Q Liu, and Y Wang, “Road Extraction by Deep Residual U-Net,” IEEE Geoscience and Remote Sensing Letters, 2018.

9 P Isola, JY Zhu, T Zhou, et al. “Image-to-image translation with conditional adversarial networks,” in Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017.

10 X Mao, Q Li, H Xie, et al. “Least Squares Generative Adversarial Networks,” 2017.

11 K Simonyan and A Zisserman, “Very deep convolutional networks for large-scale image recognition,” in 3rd International Conference on Learning Representations, ICLR 2015.

12 Z Wang, AC Bovik, HR Sheikh, et al. “Image quality assessment: From error visibility to structural similarity,” IEEE Transactions on Image Processing, 2004.

13 WD Penny, KJ Friston, JT Ashburner, et al. Statistical parametric mapping: the analysis of functional brain images. Elsevier, 2011.

14 J Zavala Bojorquez, S Bricq, C Acquitter, et al. “What are normal relaxation times of tissues at 3 T?”2016.

15 G Krüger and GH Glover, “Physiological noise in oxygenation-sensitive magnetic resonance imaging,” Magnetic Resonance in Medicine, 2001.

16 J Denck, J Guehring, A Maier, et al. “MR-contrast-aware image-to-image translations with generative adversarial networks,” International Journal of Computer Assisted Radiology and Surgery, 2021.

Figures