2744

Clinical Implementation of Novel PACS-based Deep Learning Glioma Segmentation Algorithm1Yale School of Medicine, New Haven, CT, United States, 2Visage Imaging, Berlin, Germany, 3Ulm University, Ulm, Germany, 4University of Düsseldorf, Düsseldorf, Germany

Synopsis

Tumor segmentation is a laborious process, which impedes the progress of data production for development of classification/prediction algorithms. We present a novel PACS-based workflow for deep learning-based auto-segmentation of gliomas that allows generation of annotated images during clinical workflow. We developed a U-Net auto-segmentation algorithm natively imbedded in PACS and trained on BraTS dataset. Subsequent retraining on tertiary hospital dataset was performed and generation of new segmentations, allowing labeling of 440 gliomas in a three-months period. This novel approach for annotated data generation allows real-time building of large, labeled datasets by experts in the field.

Body of the Abstract

INTRODUCTIONGliomas are the most common primary brain malignancy, classified according to histopathologic and molecular World Health Organization (WHO) criteria into grades 1-4.1,2 Glioblastoma is the most aggressive of gliomas, with a 15-month median overall survival.3 While machine learning (ML) has demonstrated tremendous progress in predicting glioma grade and molecular subtypes based on radiomic analysis of MR images, a rate limiting step in development of predictive algorithms is generation of ground truth tumor segmentations, a prerequisite for volumetric information and radiomic features extraction.4

Manual contouring and segmentation of gliomas is laborious and user-dependent, thus hindering volumetric assessment of gliomas into clinical practice and generation of large, annotated datasets. Therefore, there is a critical need to develop automatic segmentation algorithms that are accurate and reproducible within the tools used in clinical practice. This will allow translation of new algorithms by training on hospitals’ real-time annotated data in batches.

METHODS

We pretrained a deep learning algorithm (U-Net) on 1251 tumors from the multi-institutional BraTS 2021 dataset (baseline), which includes segmentations of low- and high-grade glioma. Our algorithm was evaluated on our internal test dataset from Yale New Haven Health (YNHH). We consequently retrained the U-Net in 5 batches of new data [50 tumors/batch] from YNHH to auto-segment whole, core, and necrotic portions of glioma on Visage 7 (Visage Imaging, Inc., San Diego, CA). Our gradual training pipeline consisted of 5 steps, which included subsequent training on datasets of 50 segmented tumors. We repeated this process for all 5 batches, until we finally retrained our model on the total YNHH dataset. Throughout our training process, we undertook several training modification techniques, which include increasing dropout, incrementally reducing learning rate during training, freezing encoder during training, and freezing both encoder and decoder during training.

We established a segmentation workflow within PACS, which made it possible for us to automatically segment and manually correct the internal YNHH dataset in a timeframe of three months weeks (Figure 1). In our pipeline, de-identified images get transferred from Visage Clinical PACS to Visage Research PACS server (AI Accelerator), where they were auto-segmented in 3D using the U-Net. The resulting segmentations can be manually modified and copied and pasted on all sequences (i.e., FLAIR, T1, T1ce, T2, ADC and SWI) available within a patient jacket. 3D registration was automatically applied across the sequences using the built-in Visage tools. PyRadiomics was natively embedded into PACS and once activated, feature extraction of the segmentations in all sequences was done automatically. The extracted features, 3D segmentations, and MR images were then exported from PACS in open file formats (JSON and NIfTI) for future reference and analysis. We performed test-retest analysis for our feature extraction pipeline by extracting features in PACS (Pyradiomics) and outside of PACS (Python Script).The accuracy of the AI algorithm was measured based on Dice Scores (DSC) between the automatic segmentations and the manually modified segmentations.

RESULTS

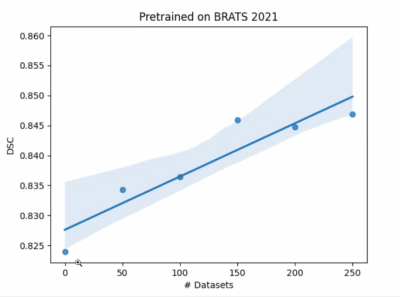

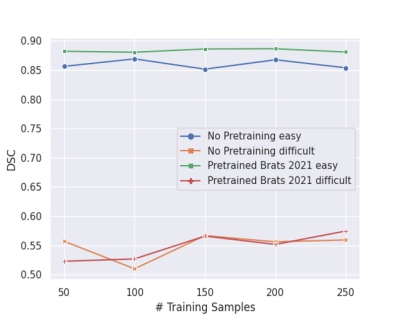

The baseline U-Net algorithm was trained on 1251 tumors from BraTS 2021 dataset, and it was evaluated on our internal test dataset of 35 gliomas from YNHH. The internal dataset used for the gradual retraining of U-Net consisted of 250 gliomas divided into 5 batches containing 50 tumors each. Our test-retest analysis for our feature extraction pipeline initially resulted in <2% error (Figure 2A), which was brought to zero after the versions of PyRadiomics were matched (Figure 2B).The baseline U-Net trained on BraTS 2021 achieved Dice Scores of » 0.82. Dice Scores gradually improved from » 0.82 to » 0.84 through the incremental training (Figure 3). Moreover, we grouped our data into ‘easy’ cases (higher DSC) and ‘difficult’ cases (lower DSC), and showed that specific training is needed to improve Dice Scores of both categories (Figure 4). Subsequent analysis showed that ‘difficult’ cases consisted of motion-degraded images.

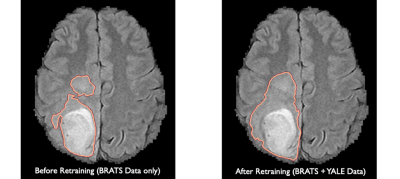

Figure 5 demonstrates an example of a segmentation of a brain tumor generated before our incremental retraining (BrATS data only) and after our incremental retraining process (BraTS and YNHH data). We noticed that signal intensity contrasts were less pronounced on segmentations generated after our retraining process, resulting in ground base segmentations that are closer to what a neuroradiologist would segment in daily clinical practice.

DISCUSSION

An Artificial Intelligence (AI)-based workflow including auto-segmentation and radiomic feature-extraction was integrated into our PACS platform. The workflow is fast with a total computation time of below ten seconds for running both developed tools and easily accessible through buttons integrated into the workstation’s interface. The quality of segmentations generated can be improved through incremental training of algorithm before implementation into hospital’s clinical PACS. Availability of such PACS-integrated tools can help build and process large datasets, allow longitudinal tumor volume tracking, and generate Regions of Interest (ROIs) for developing classification algorithms.

CONCLUSION

We demonstrate that clinical implementation of segmentation algorithms in neuroradiology practice is feasible through a PACS-integrated workflow and specific batch-based pretraining using hospital’s data. This workflow allows real-time generation of large, annotated datasets of brain tumors.

Acknowledgements

We would like to thank Richard Bronen, Sandra Abi Fadel and Ichiro Ikuta for their assistance with the double-checking of some of our initial segmentations.References

1. Ostrom QT, Cioffi G, Gittleman H, Patil N, Waite K, Kruchko C, et al. CBTRUS Statistical Report: Primary Brain and Other Central Nervous System Tumors Diagnosed in the United States in 2012-2016. Neuro Oncol. 2019;21(Suppl 5):v1-v100. doi: 10.1093/neuonc/noz150. PubMed PMID: 31675094; PubMed Central PMCID: PMC6823730.

2. Louis DN, Perry A, Reifenberger G, von Deimling A, Figarella-Branger D, Cavenee WK, et al. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: a summary. Acta Neuropathol. 2016;131(6):803-20. doi: 10.1007/s00401-016-1545-1. PubMed PMID: 27157931.

3. Tran B, Rosenthal MA. Survival comparison between glioblastoma multiforme and other incurable cancers. Journal of Clinical Neuroscience. 2010;17(4):417-21. doi: 10.1016/j.jocn.2009.09.004. PubMed PMID: WOS:000276014600001.

4. Lotan, E., Jain, R., Razavian, N., Fatterpekar, G. M. & Lui, Y. W. State of the Art: Machine Learning Applications in Glioma Imaging. AJR Am J Roentgenol 212, 26-37, doi:10.2214/ajr.18.20218 (2019).

Figures