2720

Deep learning-based motion correction for Semisolid MT and CEST imaging1Johns Hopkins University, Baltimore, MD, United States

Synopsis

Conventional semisolid magnetization transfer contrast (MTC) and chemical exchange saturation transfer (CEST) MRI studies typically employ acquisition of a series of images at multiple RF saturation frequencies. Furthermore, quantitative MTC and CEST imaging techniques based on MR fingerprinting (MRF) require a range of values for multiple RF saturation parameters (e.g. B1, saturation time). These multiple saturation acquisitions lead to a long scan time, which is likely vulnerable to motion during in vivo imaging. Motion correction is hard due to varying image intensity between acquisitions. Herein, we proposed a deep learning-based motion correction technique for conventional Z-spectra and MRF data.

Introduction

Conventional semisolid magnetization transfer contrast (MTC) and chemical exchange saturation transfer (CEST) MRI typically employ a series of images acquired at multiple RF saturation frequencies1,2. Furthermore, quantitative MTC and CEST imaging techniques based on MR fingerprinting (MRF) need to apply a range of values for various RF saturation parameters to generate unique signal evolutions for different tissue properties3,4. These multiple saturation acquisitions lead to a long scan time, which is likely vulnerable to motion during in vivo imaging. Significant dynamic image intensity changes due to varying RF saturation parameters makes it very challenging to perform motion correction with a conventional intensity-based image registration method5,6. Motion correction with saturated images obtained around the water resonance is even more difficult because the signal intensity is inherently very low and not sufficient to correct motion artifacts. Herein, we propose a deep learning-based motion correction technique that addresses this challenging situation for conventional Z-spectrum and MRF data.Methods

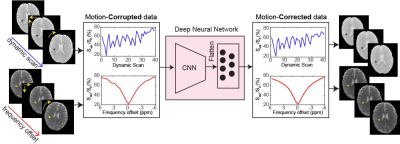

A convolutional neural network (CNN)-based deep learning model (Fig. 1) was implemented to remove motion artifacts from the Z-spectrum (Z = Ssat/S0 as a function of saturation frequency) and MTC-MRF data. The network starts with four layers for convolution operation and ends with four layers of fully connected neurons. Each of the convolution layers has 256 filters and each of the dense layers 256 neurons. The deep learning network was trained on a simulated motion-corrupted dataset using 3D translations and rotations applied to Z-spectral images (10 healthy volunteers and 15 tumor patients) and MTC-MRF images (5 healthy volunteers and 4 tumor patients). Simulation parameters were randomly chosen from the range of ± 2 mm for translation and ± 1 degree for rotation. Z-spectra were acquired using a fat-suppressed TSE sequence with a RF saturation time of 2 s, B1 of 1.5 or 2 μT, and saturation frequency offsets (6 to -6 ppm at intervals of 0.5 ppm). MTC-MRF schedules were the same as those used in a previous study4. All human subjects were scanned at 3T after informed consent was obtained in accordance with the IRB requirements. CEST images were analyzed using the MT ratio asymmetry (MTRasym) method. Water and semisolid MTC parameters were quantified using a fully connected neural network. MTC images (= 1 - Zref, free water pool + semisolid macromolecule pool) at ±3.5 ppm were synthesized by inserting the water and MTC tissue parameters and scan parameters into the Bloch Mc-Connell equations. APT and NOE images were calculated as the difference between Zref and experimental data at 3.5 ppm and -3.5 ppm, respectively. The performance of the motion correction was evaluated using structural similarity index measure (SSIM) and voxel-wise cross correlation (CC) along the frequency or dynamic scan dimensions.Results and discussion

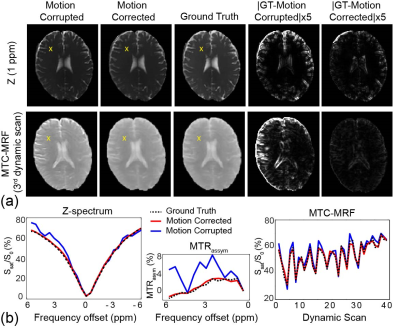

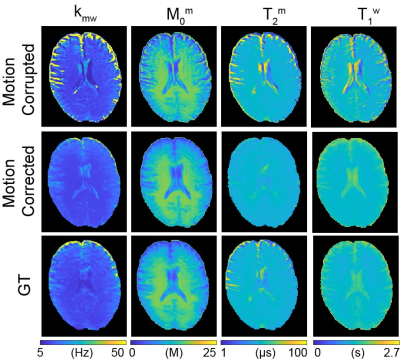

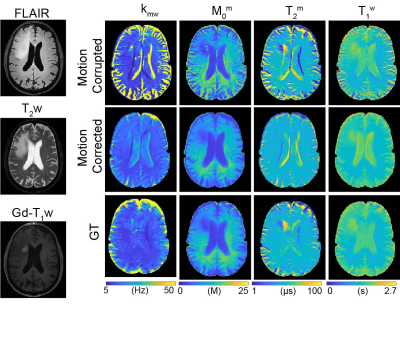

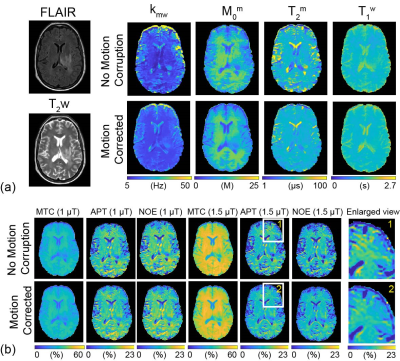

Fig. 2a shows motion-corrupted, motion-corrected, ground truth (GT), and corresponding differences images of Z(1ppm) and MTC-MRF (3rd dynamic) images. The effect of simulated motion was significantly reduced after processing the data through the deep-learning framework as shown in the difference images, Z-spectra, MTRasym spectra, and MTC-MRF profiles. SSIM and CC increased significantly after motion correction (SSIM: 94.2% vs. 97.2% and 90.3% vs. 97.8% for Z(1ppm) and MTC-MRF(3rd scan), respectively; CC: 88.7% vs. 97.7% and 96.6% vs. 99.8% for Z(1ppm) and MTC-MRF(3rd scan), respectively). Motion artifacts are clearly visible in saturation transfer and relaxation parameter maps obtained using MRF from a representative healthy volunteer (Fig. 3). As expected, motion artifacts are most prominent at contrast edges, i.e. the border between gray and white matter and around the lateral ventricles. As shown in Fig. 2, motion induced during MTC-MRF acquisition may alter the MRF profile, resulting in the incorrect estimation of tissue parameters from the MRF reconstruction. The deep-learning motion correction framework was further tested using MTC-MRF images obtained from two tumor patients. First, the motion effect was simulated in MTC-MRF images and tissue parameter maps were generated as shown in Fig. 4. The motion-corrected, averaged tissue parameter maps had significant higher SSIM (78.2% to 84.2%) and CC (95.9% to 99.7%). Second, the deep-learning-based motion correction was applied to a tumor patient without any motion-related simulations (Fig. 5). As shown in the water and semisolid MTC parameter maps, overall image quality was significantly improved after the motion correction. In addition, an improvement in mitigating the motion effect can be seen in the synthesized MTC, APT, and NOE images.Conclusions

A deep-learning neural network-based motion correction method for semisolid MT and CEST imaging was proposed and validated in vivo for Z-spectral and 3D MTC-MRF acquisitions. Unlike existing registration methods, the deep-learning-based motion correction was performed in the temporal (or frequency offset) dimension, which improves accuracy in Z-spectrum acquisition and tissue parameter mapping.Acknowledgements

This work was supported in part by grants from the National Institutes of Health.References

1. van Zijl PCM, Lam WW, Xu J, Knutsson L, Stanisz GJ. Magnetization Transfer Contrast and Chemical Exchange Saturation Transfer MRI. Features and analysis of the field-dependent saturation spectrum. Neuroimage 2018;168:222-241.

2. Zhou J, Heo HY, Knutsson L, van Zijl PCM, Jiang S. APT-weighted MRI: Techniques, current neuro applications, and challenging issues. J Magn Reson Imaging 2019;50(2):347-364.

3. Perlman O, Herz K, Zaiss M, Cohen O, Rosen MS, Farrar CT. CEST MR-Fingerprinting: Practical considerations and insights for acquisition schedule design and improved reconstruction. Magn Reson Med 2020;83(2):462-478.

4. Kang B, Kim B, Schar M, Park H, Heo HY. Unsupervised Learning for Magnetization Transfer Contrast MR Fingerprinting (MTC-MRF): Application to Chemical Exchange Saturation Transfer (CEST) and Nuclear Overhauser Enhancement (NOE) Imaging. Magn Reson Med 2020:DOI:10.1002/mrm.28573.

5. Zaiss M, Herz K, Deshmane A, Kim M, Golay X, Lindig T, Bender B, Ernemann U, Scheffler K. Possible artifacts in dynamic CEST MRI due to motion and field alterations. J Magn Reson 2019;298:16-22.

6. Zhang Y, Heo HY, Lee DH, Zhao X, Jiang S, Zhang K, Li H, Zhou J. Selecting the reference image for registration of CEST series. J Magn Reson Imaging 2016;43(3):756-761.

Figures