2686

SynthStrip: skull stripping for any brain image1Martinos Center for Biomedical Imaging, Boston, MA, United States, 2Department of Radiology, Harvard Medical School, Boston, MA, United States, 3Computer Science and Artificial Intelligence Lab, Massachusetts Institute of Technology, Cambridge, MA, United States

Synopsis

The removal of non-brain signal from MR data is an integral component of neuroimaging streams. However, popular skull-stripping utilities are typically tailored to isotropic T1-weighted scans and tend to fail, sometimes catastrophically, on images with other MRI contrasts or stack-of-slices acquisitions that are common in the clinic. We propose SynthStrip, a flexible tool that produces highly accurate brain masks across a landscape of neuroimaging data with widely varying contrast and resolution. We implement our method by leveraging anatomical label maps to synthesize a broad set of training images, optimizing a robust convolutional network agnostic to MRI contrast and acquisition scheme.

Authorship

*Sharing senior authorship.Introduction

Whole-brain extraction, or skull-stripping, is a critical processing step in many widely used brain MRI analysis pipelines1,2. Such frameworks leverage algorithms that need non-brain voxels removed from the data for comparability across images and to remove irrelevant information that may distract from the specific task. For example, non-brain voxels have a tendency to impede the reliability of non-linear brain registration, a fundamental component of atlas-based segmentation and an array of other analyses.While a variety of classical algorithms have been proposed, these are usually tailored to acquisitions with near-isotropic resolution and T1-weighted (T1w) contrast. This makes them less reliable for scans such as fast spin-echo3 (FSE) stacks of thick slices, which are routinely acquired in the clinic and come in a range of MRI contrasts like T2-weighted (T2w), fluid-attenuated (FLAIR), or post-Gadolinium. Deep-learning algorithms have gained tremendous popularity for computer-vision tasks, but these methods are typically only accurate for image types available at training4, making reliable skull stripping across MRI sequences challenging with a single model.

We address these shortcomings by introducing SynthStrip, a universal brain-extraction tool that can be deployed successfully on a wide variety of brain images. We achieve this by training SynthStrip solely with synthetic data encompassing a deliberately unrealistic range of anatomies, acquisition parameters, and artifacts. We demonstrate its viability and compare against popular baselines for a diverse testset of T1w, FLAIR, and proton-density (PD) contrasts, as well as clinical scans with thick, high-resolution FSE slices and low-resolution EPI.

Method

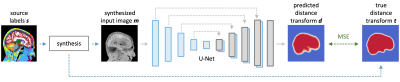

We train a deep neural network on a vast landscape of images synthesized on the fly from a set of anatomical segmentations. At each optimization step, we start with a segmentation $$$s$$$ from which we generate a new label map by applying a random deformation. Building on previous work5, we synthesize a gray-scale image $$$m$$$ from the transformed segmentation by sampling corresponding voxel intensities from a random Gaussian distribution for each label and applying random smoothing, noise, cropping, downsampling, and intensity bias (Figure 1).For the input image $$$m$$$, a U-Net6 $$$h$$$ predicts a signed distance transform $$$d$$$, which represents the minimum distance to the skull boundary at each voxel. Distances are positive within the brain and negative outside, facilitating the extraction of a brain mask from $$$d$$$ at test-time. We train $$$h$$$ with a fully supervised approach, minimizing the mean squared error between predicted ($$$d$$$) and ground-truth distance transforms $$$t$$$ (Figure 2). Specifically, at each training step, we compute the target transform $$$t$$$ from $$$s$$$. We threshold absolute distances of $$$t$$$ at 5 mm to focus the network on pertinent image regions.

Experiment

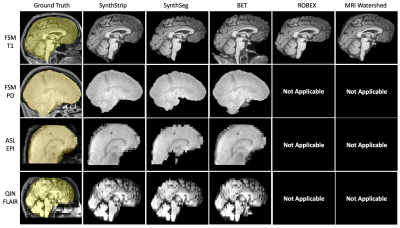

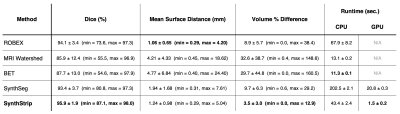

We analyze the performance of SynthStrip on diverse whole-head images, and compare its skull-stripping accuracy with popular tools: BET7, mri_watershed8, ROBEX9, and SynthSeg5. While BET is designed for T1w, T2w, and PD contrast, ROBEX and mri_watershed are specific to T1w images. SynthSeg is a similar learning-based model trained with synthesized images to predict a set of brain labels, which we merge, dilate, and erode to produce an all-encompassing mask of brain tissue.Data: We obtain training brain segmentations for 40 Buckner401 and 40 HCP-A10,11 subjects. We conduct all experiments with held-out testsets including (1) 54 clinical T1w 2D-FLASH stacks (0.4 $$$\times$$$ 0.4 $$$\times$$$ 6 mm3 voxels) and (2) 39 FLAIR 2D-FSE stacks (1 $$$\times$$$ 1 $$$\times$$$ 5 mm3 voxels) from QIN GBM12, (3) 38 T1w and (4) 32 PD scans with isotropic 1-mm resolution, and (5) 42 2D-EPI stacks (3.4 $$$\times$$$ 3.4 $$$\times$$$ 5 mm3 voxels) gathered from our in-house FSM and ASL datasets.

Processing: For training, we derive brain labels from T1w acquisitions using SAMSEG13 and non-brain labels by fitting a Gaussian mixture model with spatial regularization. We produce reference brain masks for each test image: for each subject's T1w scan, we compute the median across a set of masks generated by all baselines, transfer this result to non-T1w images using rigid registration, then visually inspect for accuracy and apply manual edits where necessary. We conform all images and label maps to 256 $$$\times$$$ 256 $$$\times$$$ 256 volumes with isotropic 1-mm resolution.

Metrics: We measure brain extraction accuracy by computing the Dice overlap, mean symmetric surface distance, and percent volume difference between the computed and reference brain masks.

Results: As shown in Figures 3 and 5, SynthStrip matches or outperforms the baselines for every evaluation metric and dataset, often by a substantial margin, with the exception of BET which has marginally better accuracy for EPI but performs poorly for the QIN and FSM data. Furthermore, SynthStrip avoids severe skull-stripping failures, and Figure 4 highlights representative brain masking examples across testsets.

Conclusion

We present SynthStrip, a universal brain extraction utility that outperforms competing methods and generalizes across contrasts, anatomical variability, and acquisition protocols, powered by a generative strategy for synthesizing diverse training images. We plan to further increase the versatility of this method by evaluating and extending it to CT and more challenging MRI applications such as fetal neuroimaging, where the brain is surrounded by amniotic fluid and maternal tissues14. We release our tool as a part of the FreeSurfer software.Acknowledgements

We thank Douglas N. Greve, David Salat, Lilla Zöllei, and the HCP-A Consortium for sharing data. This research is supported by NIH grants NICHD K99 HD101553, R56 AG064027, R01 AG064027, AG008122, AG016495, NIBIB P41 EB015896, R01 EB023281, EB006758, EB019956, R21 EB018907, NIDDK R21 DK10827701, NINDS R01 NS0525851, NS070963, NS105820, NS083534, and R21 NS072652, U01 NS086625, U24 NS10059103, SIG S10 RR023401, RR019307, RR023043, BICCN U01 MH117023 and Blueprint for Neuroscience Research U01 MH093765. B. Fischl has financial interests in a company called CorticoMetrics, which are reviewed and managed by Massachusetts General Hospital and Mass General Brigham.References

1. Fischl B et al. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron. 2002;33(3):341-55.

2. Jenkinson M et al. FSL. Neuroimage. 2012;62(2):782–90.

3. Hennig et al. RARE imaging: a fast imaging method for clinical MR. Magn Reson Med. 1986;3(6):823-33.

4. Hoffmann M et al. SynthMorph: learning contrast-invariant registration without acquired images. IEEE Trans Med Imaging. 2021:DOI 10.1109/TMI.2021.3116879.

5. Billot B et al. A Learning Strategy for Contrast-agnostic MRI Segmentation. PMLR. 2020;121:75-93.

6. Ronneberger O et al. U-Net: Convolutional Networks for Biomedical Image Segmentation. CoRR. 2015;234-41.

7. Smith SM et al. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17(3):143-55.

8. Segonne F et al. A hybrid approach to the skull stripping problem in MRI. Neuroimage. 2004;22(3);1060-75.

9. Iglesias JE et al. Robust brain extraction across datasets and comparison with publicly available methods. IEEE Trans Med Imaging. 2011;30(9):1617-34.

10. Harms MP et al. Extending the Human Connectome Project across ages: Imaging protocols for the Lifespan Development and Aging projects. NeuroImage. 2018;183:972-84.

11. Bookheimer SY et al. The Lifespan Human Connectome Project in Aging: An overview. NeuroImage. 2019;185:335-48.

12. Prah MA et al. Repeatability of standardized and normalized relative CBV in patients with newly diagnosed glioblastoma. Am J Neuroradiol. 2015;36(9):1654-61.

13. Puonti O et al. Fast and sequence-adaptive whole-brain segmentation using parametric Bayesian modeling. NeuroImage. 2016;143(1):235-49.

14. Hoffmann M et al. Rapid head‐pose detection for automated slice prescription of fetal‐brain MRI. Int J Imaging Syst Technol. 2021:31(3):1136-54.

Figures