2603

Deep-learning based brain tumor segmentation using quantitative MRI1Biomedical Engineering, Linkoping University, Linkoping, Sweden, 2Center for Medical Image Science and Visualization (CMIV), Linkoping University, Linkoping, Sweden, 3Department of Radiology in Linköping, Region Östergötland, Center for Diagnostics, Linkoping, Sweden, 4Department of Health, Medicine and Caring Sciences, Division of Diagnostics and Specialist Medicine, Linkoping University, Linkoping, Sweden, 5Department of Computer and Information Science, Linkoping University, Linkoping, Sweden

Synopsis

Manual annotation of gliomas in magnetic resonance (MR) images is a laborious task, and it is impossible to identify active tumor regions not enhanced in the conventionally acquired MR modalities. Recently, quantitative MRI (qMRI) has shown capability in capturing tumor-like values beyond the visible tumor structure. Aiming at addressing the challenges of manual annotation, qMRI data was used to train a 2D U-Net deep-learning model for brain tumor segmentation. Results on the available data show that a 7% higher Dice score is obtained when training the model on qMRI post-contrast images compared to when the conventional MR images are used.

Introduction

Malignant gliomas are tumors of the central nervous system with high recurrence and mortality rates, and poor prognosis1. Magnetic resonance (MR) images are indispensable for the diagnosis and treatment follow-up of gliomas, with T1 weighted pre- and post-gadolinium contrast (T1w and T1wGD), T2 weighted (T2w) and fluid-attenuated inversion recovery (FLAIR) images routinely acquired 2. Radiologists use these conventional images to delineate the tumor structure to balance the extent of the treatment with the possible collateral effects. Manual annotation of the tumor structures is a time consuming task and prone to intra-reader variability. Moreover, not all the active tumor regions are enhanced in the routinely acquired MR images, which can lead to new tumor regrowth if not considered during treatment.Deep learning methods for glioma segmentation have been widely investigated, with the state-of-the-art model showing a mean Dice score of 0.85 when using conventional MR images 3. Several approaches have been studied to improve segmentation performance, including designing more sophisticated models 4,5 and providing the model with additional context of the brain anatomy 6. Another approach is to use a different set of MR images for training instead of the ones conventionally acquired. In this study, quantitative MR images (qMRI) are used since it has been shown that tumor-like quantitative values (T1 and T2 relaxation times, Proton density) extend beyond the tumor structure visible in conventional images 7. These findings suggest that qMRI could contain information on the non-enhancing active tumor part invisible in the conventional images, and thus help improve tumor segmentation.

The aim of this study is to investigate if training a standard deep learning model (U-Net) for tumor segmentation using qMRI data can result in improved segmentation performance compared to when trained on conventional MRI modalities.

Method

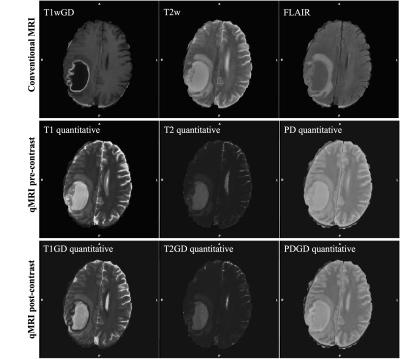

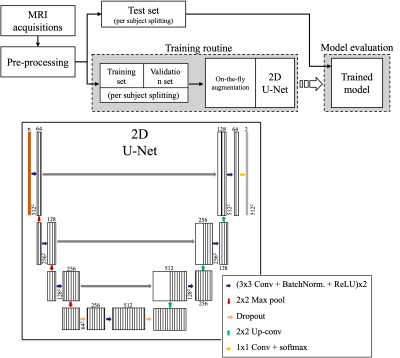

Twenty-one subjects diagnosed with high-grade gliomas were included in this study. Volumetric T1w, T1wGD, T2w, FLAIR and qMRI pre- and post-contrast injection were collected using a 3-Tesla MR scanner. For a full description of the acquisition sequences see7. A comparison between the available MR modalities can be seen in Figure 1. For all the subjects, the tumor structure (necrotic region ⋃ enhancing region) was annotated by an expert neuroradiologist. Each subject was pre-processed through skull stripping and registration of all image modalities to the T1wGD image. Out of the 21 subjects, three were randomly selected for testing (72 2D slices), two for validation (48 2D slices) and the remaining were used for training (408 2D slices).Lookahead optimizer 8, with ADAM9 inner optimizer, was used to train a standard 2D U-Net model10 to minimise the weighted Dice loss between annotation and model prediction. Learning rate was kept constant throughout the training, which ran for 1000 epochs. Batch size was set to 4. On-the-fly data augmentation was performed by means of random flipping, rotation, shifting and scaling. Model architecture and overall training pipeline can be seen in Figure 2.

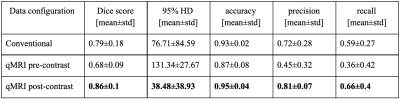

In total, three models were trained each using a different input data configuration: (1) conventional MR image modalities (T1w, T1wGD, T2w and FLAIR), (2) qMRI pre-contrast (T1, T2 and Proton density) and (3) qMRI post-contrast (T1GD, T2GD and Proton densityGD). Models’ performance was evaluated in terms of Dice score, 95% Hausdorff distance (HD), accuracy, precision and recall. Given the limited number of testing subjects, the performance of the different models was not compared using statistical tests.

Results

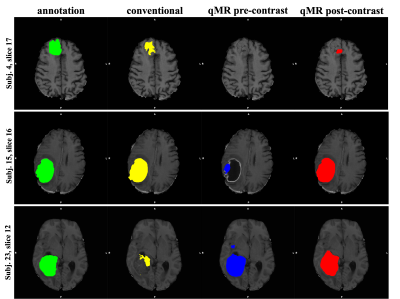

A summary of the models’ performance on the test dataset can be found in Figure 3. The model trained on qMRI post-contrast achieved the highest performance, with a mean Dice score of 0.86. An example of the tumor segmentation for one slice from each of the three test subjects is presented in Figure 4.Considering only the slices without tumor (61.1% of all test slices), it can be seen that the false positive rate for the model trained on the conventional images is higher compared to the model trained on the qMRI post-contrast data. This indicates that the model trained on qMRI post-contrast was better at avoiding segmenting tumor in slices without annotated tumor. On the other hand, looking at the test slices containing tumor (38.9% of all test slices), the number of false positives is almost double in the model using qMRI post-contrast data compared to the model using conventional data.

Discussion

Automatic segmentation of glioma in MR images has the potential of improving patient treatment planning. In this study it is shown that a deep learning model for brain tumor segmentation trained on qMRI post-contrast images achieves higher performance than the one trained on conventional MR modalities. Interestingly, the model trained on the qMRI post-contrast data over-segments the tumor structure in the slices where there is annotated tumor, but not in the ones where tumor is absent. In the context of the previous studies 7, this finding suggests that the model might use the quantitative information to expand the tumor segmentation beyond the annotation to include the non-enhancing tumor regions. However, given the limited data and that the annotations are based on the conventional images, further studies are needed to investigate this hypothesis and understand if the model over-segmentation is due to the information provided by qMRI.Acknowledgements

References

1. Davis, M. E. Glioblastoma: Overview of Disease and Treatment. Clin. J. Oncol. Nurs. 20, 2–8 (2016).

2. Juratli, T. A. et al. Radiographic assessment of contrast enhancement and T2/FLAIR mismatch sign in lower grade gliomas: correlation with molecular groups. J. Neurooncol. 141, 327–335 (2019).

3. Isensee, F., Jaeger, P. F., Kohl, S. A. A., Petersen, J. & Maier-Hein, K. H. nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211 (2021).

4. Zhou, Z., Siddiquee, M. M. R., Tajbakhsh, N. & Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. ArXiv180710165 Cs Eess Stat (2018).

5. Noori, M., Bahri, A. & Mohammadi, K. Attention-Guided Version of 2D UNet for Automatic Brain Tumor Segmentation. 2019 9th Int. Conf. Comput. Knowl. Eng. ICCKE 269–275 (2019) doi:10.1109/ICCKE48569.2019.8964956.

6. Tampu, I. E., Haj-Hosseini, N. & Eklund, A. Does Anatomical Contextual Information Improve 3D U-Net-Based Brain Tumor Segmentation? Diagn. Basel Switz. 11, 1159 (2021).

7. Blystad, I. et al. Quantitative MRI for analysis of peritumoral edema in malignant gliomas. PLOS ONE 12, e0177135 (2017).

8. Zhang, M. R., Lucas, J., Hinton, G. & Ba, J. Lookahead Optimizer: k steps forward, 1 step back. ArXiv190708610 Cs Stat (2019).

9. Kingma, D. P. & Ba, J. Adam: A Method for Stochastic Optimization. ArXiv14126980 Cs (2017).

10. Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. ArXiv150504597 Cs (2015).

Figures