2521

Predicting PDFF and R2* from Magnitude-Only Two-Point Dixon MRI Using Generative Adversarial Networks1Research Centre for Optimal Health, University of Westminster, London, United Kingdom, 2Calico Life Sciences LLC, South San Francisco, CA, United States

Synopsis

We implement generative adversarial network (GAN) models to predict fully quantitative parameters from a complex-valued multiecho MRI sequence using only data from the magnitude-only two-point Dixon acquisition in the UK Biobank abdominal protocol. The training data consists of in- and opposed-phase channels from the Dixon sequence as inputs and the proton density fat fraction (PDFF) and R2* parameter maps estimated from the IDEAL acquisition as outputs. We compare conditional and cycle GANs, where the conditional GAN models outperformed the cycleGAN models in SSIM, PSNR and MSE. PDFF predictions were better than R2* predictions for all models.

Introduction

The UK Biobank (UKBB) abdominal MRI protocol consists of several sequences [1], including: a single-slice IDEAL acquisition [2], that provides measurements of proton density fat fraction (PDFF) and transverse relaxation rate (R2*) in the liver [3], and a 3D two-point Dixon sequence covering the region from the neck to the knees. However, the two-point Dixon sequence is limited in its ability to quantify PDFF [4].Within the field of deep learning, generative adversarial networks (GANs) have sparked substantial interest in medical image analysis, particularly for image synthesis used in data augmentation [5]. GANs are able to support paired training data via the conditional GAN (cGAN) [6], as well as unpaired data via the cycleGAN [7]. Along with data synthesis, cross-modality style transfer (MRI to CT) is also of interest since CT is quantitative via Hounsfield units (HU) but comes with exposure to ionizing radiation. The authors in [8,9] show that deep-learning based methods accurately convert MRI data into CT images with sensible HUs.

Here we present a proof-of-concept study where we have trained two implementations of GANs (conditional and cycle) to predict PDFF and R2* parameter maps from the two-point Dixon data in the UKBB.

Methods

The Dixon sequence involved six overlapping 3D volumes that were acquired using a common set of parameters: TR = 6.67ms, TE = 2.39/4.77ms, FA = 10° and bandwidth = 440Hz. Only the magnitude data were available for the Dixon sequences. The single-slice IDEAL sequence used the following parameters: TR = 14 ms, TE = 1.2/3.2/5.2/7.2/9.2/11.2ms, FA = 5°, bandwidth = 1565Hz. Both the magnitude and phase data were available for the IDEAL sequence.We selected 4,840 UK Biobank participants with both single-slice IDEAL and 3D Dixon acquisitions available for this study. Stratified sampling was performed in order to obtain a balanced number of men and women with a representative range of BMI and age [10]. These data were split into training and testing sets (80-20%). The training set was further split into training and validation sets (90-10%) for model development.

We implemented versions of the cGAN [6] and cycleGAN [7] models such that the generator produced two-channel output from two-channel input. We selected the cGAN for its ability to learn a mapping between two different domains using paired images, and the unpaired approach of the cycleGAN to potentially overcome minor misalignments related to differences in breath-hold between the Dixon and IDEAL data that may affect model training.

We also implemented two variations for each model by adjusting the output activation functions. One variation had a single activation function (ReLU) for both channels in the final layer, denoted as single-activation (SA). In the other variation, we separated the final layer into two individual single-channel layers with their own unique activation function (sigmoid for PDFF and ReLU for R2*), denoted as dual-activation (DA). This was motivated by the fact that PDFF spans 0-100%, whereas R2* is strictly positive, the exact value depending on tissue type, field strength of the magnet and other parameters.

We trained four models: SA-cGAN, DA-cGAN, SA-cycleGAN and DA-cycleGAN. The discriminator function used for each GAN followed the PatchGAN implementation, as in the original references, except the stride in the third convolution layer was changed from (2,2) to (1,1), resulting in a patch size of 32⨉32. We followed the default configurations for both the cGAN and cycleGAN models with respect to the weights for each loss function as well as the learning rates. We used batch size 1 for all models and pool size 128 for the cycleGAN. Models were trained using the Adam optimiser with 40 epochs, by which time the training loss and validation metrics converged.

Results

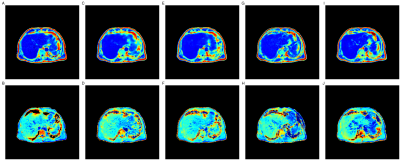

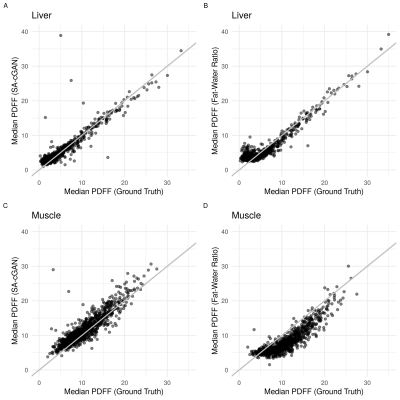

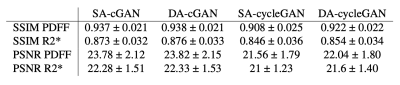

Figure 1 compares the predicted parameter maps from all four models with the ground truth estimates for a single participant in the UKBB. All models produced parameter maps that qualitatively matched the ground truth, highlighting the ability of GANs to map signal intensities from magnitude-only two-point Dixon data to quantitative parameters. This was confirmed using quantitative image-quality metrics (Table 1). The performance of SA- and DA-cGANs outperformed their equivalent cycleGAN models in both comparisons.Separating the final layer into two, with dedicated activation functions tailored to the dynamic range of the data, did not show improvement, since the SSIM and PSNR values in Table 1 between SA and DA models are essentially the same. Metrics for the R2* values were inferior to those for PDFF, probably reflecting subject selection,which was based on reflecting a broad BMI range, which correlates significantly with PDFF but not R2* ranges.

Discussion and Conclusion

Here we have shown that GANs can be used to generate accurate quantitative measurements of PDFF and R2*, using magnitude-only two-point Dixon MRI. The next step is to predict PDFF and R2* values from the Dixon acquisition that are outside the anatomical coverage of the original IDEAL acquisition. We expect the performance of the predictions to be similar to those reported here but may show some deterioration as the anatomy in the axial slice diverges from the training data.Acknowledgements

We thank Dr Johannes Riegler and Dr Nick van Bruggen for helpful comments and discussion. This research has been conducted using the UK Biobank Resource under Application Number 44584 and was funded by Calico Life Sciences LLC.References

Littlejohns TJ, Holliday J, Gibson LM, et al. The UK Biobank imaging enhancement of 100,000 participants: rationale, data collection, management and future directions. Nat Commun 2020; 11(1): 1-12.

SB Reeder, AR Pineda, Z Wen, et al. Iterative decomposition of water and fat with echo asymmetry and least-squares estimation (IDEAL): Application with fast spin-echo imaging. Magn Reson Med 2005; 54(3): 636-644.

Bydder M, Ghodrati V, Gao Y, et al. Constraints in estimating the proton density fat fraction. Magn Reson Imaging 2020; 66: 1-8.

Liu C-Y, McKenzie CA, Yu H, et al. Fat quantification with IDEAL gradient echo imaging: correction of bias from T1 and noise. Magn Reson Med 2007; 58(2): 354-364.

Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: A review. Medical Image Analysis 2019; 58: 101552.

Isola P, Zhu J-Y, Zhou T, Efros AA. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017. pp 1125-1134.

Zhu J-Y, Park T, Isola P, Efros AA. Unpaired image-to-image translation using cycle-consistent adversarial networks. In IEEE International Conference on Computer Vision (ICCV) 2017.

Lei Y, Harms J, Wang T, et al. MRI-only based synthetic CT generation using dense cycle consistent generative adversarial networks. Med Phys 2019; 46(8), 3565-3581.

Emami H, Dong M, Glide-Hurst CK. Attention-Guided generative adversarial network to address atyp-ical anatomy in synthetic CT generation. In IEEE 21st Int Conf Inf Reuse Integr Data Sci 2020. pp 188-193.

Figures