2231

Deep Learning-Based Automatic Lung Segmentation for MR Images at 0.55T1Cardiovascular Branch, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, MD, United States, 2Electrical Engineering and Computer Science, Massachusetts Institute of Technology, Cambridge, MA, United States

Synopsis

High-performance 0.55T systems are well suited for structural lung imaging due to reduced susceptibility and prolonged T2*. Lung segmentation is required to derive metrics of pulmonary function from lung MRI. Convolutional neural networks are effective for lung segmentation at higher field strengths, but they do not generalize to images acquired at 0.55T. This study develops a neural network for automated lung segmentation of T1 and proton density weighted ultrashort-TE MRI at 0.55T. Training data was generated using segmentations by active contours and manual corrections. The proposed network was fast (1.07s) and as accurate as existing semi-automated segmentation (dice coefficient 0.93).

Introduction

High-performance 0.55T MRI systems is well suited for structural lung imaging by virtue of the improved field homogeneity and reduced susceptibility gradients (1). Lung segmentation is an important image processing step, for example to derive metrics of pulmonary function such as ventilation, perfusion, and congestion from lung MR images. Previous studies at 0.55T have used active contour algorithms with added manual corrections for lung segmentation, but active contours can be inconsistent and manual corrections are time-consuming and observer-dependent (2, 3). A possible alternative is convolutional neural networks with a U-Net architecture, which have been proved effective for biomedical image segmentation and have successfully been used for lung segmentations at higher field strengths such as 1.5T and 3T (4,5). There is currently no network trained specifically for 0.55T images. This study aimed to develop a robust automated lung segmentation model for 0.55T images using deep learning.Methods

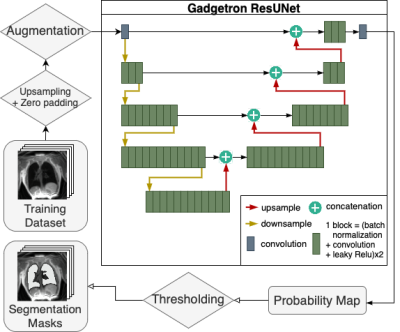

In this IRB-approved study, we retrospectively included lung images from 114 human subjects and 23 swine, acquired with various T1 and proton density weighted stack-of-spirals ultrashort echo time sequences using a high-performance 0.55T MRI system (prototype MAGNETOM Aera, Siemens) (1). Lung segmentation was performed with an active contour algorithm followed by manual corrections (2). A total of 467 3D images (~16k 2D image slices) with varying spatial resolution from healthy volunteers (n=40), lymphangioleiomyomatosis patients (n=74), and swine (n=23) were used in both coronal and sagittal slices. The dataset was randomly divided into training and validation sets, where 80% of image-mask pairs were used for training and the remaining 20% were used for validation. Furthermore, a separate test set of 761 3D images (~43500 2D slices) from 31 healthy volunteers and 9 swine was prepared to evaluate the segmentation model.For the model training, images and masks were upsampled to achieve a consistent pixel resolution between 1 mm2 and 1.5 mm2 for all images. Images were augmented by adding random permutations, blurring, scaling, rotating, and noise to improve generalization. The U-Net model was developed in Pytorch based on the Gadgetron AI for Cardiac MR Imaging repository (Figure 1) (6). The network was trained with 2D images as input. During training, the performance of the network was evaluated through validation loss calculated with Soft-Jaccard loss function. Hyperparameter sweep was performed to determine the batch size (32 or 64), the number of upsampling and downampling layers (2, 3 or 4), and the number of block computations for each layer (2, 3, 4, 6, 8, or 12) that resulted in the lowest validation loss. One block computation consists of two cycles of batch normalization, convolution, and leaky ReLU activation.

Results

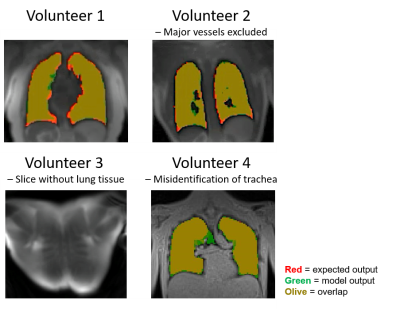

The final segmentation network had 3 downsampling layers, 1 branch layer, and 4 upsampling layers, and was trained for 50 epochs with a batch size of 64. The network had a mean validation loss of 0.096 and a mean dice coefficient of 0.93 ± 0.17 in the validation set, indicating good performance compared with the semi-automated method with manual corrections. When evaluated with a separate test dataset of 761 3D images, the final segmentation network had a dice coefficient of 0.93 ± 0.19. The difference in volume between the semi-automated segmentations and the final network segmentations was -0.11 ± 0.14 L. As shown in Figure 2, the U-Net was able to create accurate masks, including the exclusion of major blood vessels, and successfully detected image slices where no lung tissue was present. Average model inference time to segment a 3D lung image with the trained U-Net was 1.07 ± 0.1s on GPU. In comparison, active contour segmentations without manual corrections had a dice coefficient of 0.68 ± 0.32 while taking on average 82 seconds per 3D image, requiring approximately 10 minutes of processing time for additional manual corrections. This demonstrates the final segmentation network’s superior performance and significant decrease in processing time. The U-Net misidentified part of the lower trachea as lung tissue in some images, specifically in low contrast images with 3.5mm isotropic spatial resolution.Discussion

The proposed neural network successfully performed lung segmentation in MR images acquired at 0.55T. Additional training with more low-contrast images with isotropic spatial resolution in the training set is required, as the U-Net tend to misidentify the trachea as lung tissue in these images. Compared to other neural networks developed for images with higher field strengths, the U-Net has a similar average dice coefficient score but larger variation in the scores, which can potentially be improved by inclusion of more training data (4,5).Conclusions

A deep learning U-Net was developed for fast and accurate automatic lung segmentation model for MR images acquired at 0.55T. The test dataset showed that the final segmentation model has comparable performance to the semi-automated method while being significantly faster.Acknowledgements

We would like to acknowledge the assistance of Siemens Healthcare in the modification of the MRI system for operation at 0.55T, and in the stack-of-spirals UTE sequence, under an existing cooperative research agreement (CRADA) between NHLBI and Siemens Healthcare.

This study was supported by the Intramural Research Program, National Heart Lung and Blood Institute, National Institutes of Health, USA (Z01-HL006257 and ZIA-HL-006259-01).

References

1. Campbell-Washburn AE., Ramasawmy R., Restivo MC., et al. Opportunities in Interventional and Diagnostic Imaging by Using High-Performance Low-Field-Strength MRI. Radiology 2019;293(2):384–93. Doi: 10.1148/radiol.2019190452.

2. Bhattacharya I., Ramasawmy R., Javed A., et al. Oxygen‐enhanced functional lung imaging using a contemporary 0.55 T MRI system. NMR Biomed 2021;34(8). Doi: 10.1002/nbm.4562.

3. Bhattacharya I., Ramasawmy R., Javed A., et al. Assessment of Lung Structure and Regional Function Using 0.55 T MRI in Patients With Lymphangioleiomyomatosis. Invest Radiol 2021;Publish Ah. Doi: 10.1097/RLI.0000000000000832.

4. Tustison NJ., Avants BB., Lin Z., et al. Convolutional Neural Networks with Template-Based Data Augmentation for Functional Lung Image Quantification. Acad Radiol 2019;26(3):412–23. Doi: 10.1016/j.acra.2018.08.003.

5. Willers C., Bauman G., Andermatt S., et al. The impact of segmentation on whole‐lung functional MRI quantification: Repeatability and reproducibility from multiple human observers and an artificial neural network. Magn Reson Med 2021;85(2):1079–92. Doi: 10.1002/mrm.28476.

6. Xue H., Tseng E., Knott KD., et al. Automated detection of left ventricle in arterial input function images for inline perfusion mapping using deep learning: A study of 15,000 patients. Magn Reson Med 2020;84(5):2788–800. Doi: 10.1002/mrm.28291.

Figures