2227

Immersive and Interactive On-the-fly MRI Control and Visualization with a Holographic Augmented Reality Interface: A Trans-Atlantic Test

Jose Velazco-Garcia1, Nikolaos Tsekos1, Andrew Webb2, and Kirsten Koolstra2

1Medical Robotics and Imaging Lab, University of Houston, Houston, TX, United States, 2Leiden University Medical Center, Leiden, Netherlands

1Medical Robotics and Imaging Lab, University of Houston, Houston, TX, United States, 2Leiden University Medical Center, Leiden, Netherlands

Synopsis

We present the computational Framework for Interactive Immersion into Imaging Data (FI3D) for 3D visualization of MR data as they are collected and on-the-fly control of the MRI scanner. The FI3D was implemented to integrate an MRI scanner with the operator who used a customized holographic scene, generated by a HoloLens head mounted display (HMD), to (1) view images and image-generated outcomes (e.g., segmentation) and (2) control the MR scanner to update the acquisition protocol. In this study, the framework linked an MR scanner in Leiden (The Netherlands) and an operator in Houston (USA).

Introduction

Modern HMDs, by enabling computer-generated holographic 3D visualization, offer improved appreciation of complex 3D structures in mixed (MR) and augmented (AR) reality scenes, attracting an ever-growing interest in diagnostic imaging and image-guided interventions1–5. In this work, we focused on implementing holographics to immerse the operator on-the-fly into both (i) the collected and processed data and (ii) scanner control. This was motivated by the paradigm of an operator who views 3D multi-faceted image-based renderings of a pathology and customizes additional data collection (contrast, orientations, imaging fields-of-view, etc.) to better assess and characterize this pathology. Tetherless HMD are of limited computational and storage capacity for such complex human-to-scanner integration. Therefore, we developed the FI3D to perform all data processing and communication, while the HMD is only used for input/output (IO)6. Herein, we developed an FI3D module, so an operator (in Houston, USA) views holograms of 3D rendered multi-slice phantom MRI and use the grasping functionality of the HoloLens to select oblique scanning planes to control a state-of-art MR scanner in Leiden (The Netherlands) which transmits back images to the operator. Teleradiology and synchronous readings by multiple specialists and education/training are just a few of the potential applications.Methods

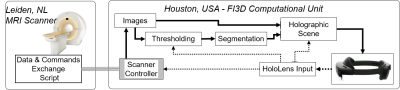

Setup and computational framework: Figure 1 shows the setup of the system as deployed for these studies. In Leiden the MR scanner, the feedback mechanism, and a local instance of the MR control client were located: in Houston, the FI3D server with the operator interfaced with a HoloLens 2 Holographic HMD. Communication between the two sites was performed via an SSL connection. The FI3D is a C++ object-oriented framework that enables an application with an AR interface through remote control using AR capable devices, such as the HoloLens. Figure 2 illustrates the architecture of the FI3D and its role as the communication fabric among components (framework wide services) and modules (applications built on the framework). The operator executes the FI3D which automatically sets up the used components and activates the MR control module6.Data acquisition: phantom experiments were performed on a 3T MR system (Philips, NL) using a 32-channel head coil. Multi-slice (and single-slice) fast field echo (FFE) data were acquired using the following scan parameters: FOV = 143×181×233 mm3, in-plane resolution = 0.6×0.7 mm2, slices = 39, slice thickness = 5 mm, TE/TR = 4.6/417 ms, flip angle = 80°, water-fat shift = 2 pixels, and scan time = 3 min 32 s.

Feedback mechanism: acquired images were automatically sent to an external computer in Leiden using the remote control software XTC7. On the external computer in Leiden, a Python script detects and reads the newly acquired images, after which they are automatically sent to the server in Houston. The server decodes received imaging data and renders them using its GUI. Simultaneously, an HMD connected to the server receives visual information to replicate it as a holographic scene which the user can interact with using hand gestures. Updated geometry parameters are sent back to the scanner computer via a text file and imported during scanning.

Results

Figures 3 to 5 show representative results from experimental sessions of interactive operation and immersive visualization with the FI3D system setup shown in Figure 1. The operator in Houston commands and receives the multi-slice set prescribed based on the scout images. As the slices arrived in Houston, they were segmented using SI thresholding and reconstructed as 3D lines using the Flying Edges algorithm. The operator, while inspecting the 3D holographic scene (any combinations of renderings with/without MR images), selected oblique slices. This information was then sent to Leiden via the SSL connection where a new scan request was generated to the scanner that collected updated slice orientation. Following this, the new scans were sent back to the server in Houston where they were fused with the previous images to improve the 3D rendering while simultaneously updating the holographic scene.Discussion

Interfacing to an MRI with a mixed/augmented reality HoloLens HMD was demonstrated for immersive on-the-fly data visualization and interactive control of the scanner. The trans-Atlantic run simulated potential use in teleradiology or surgical multisite planning/consultation. The holographic scene interface offers appreciation of structures and their 3D inter-relations, while being on-line enables interactive selection of acquisition parameters for generating a comprehensive multicontrast model of the pathology of interest. As all calculations, renderings, and fusions occur in the MR scanner coordinate system, registration and selection of spatial parameters is straightforward. The work had limitations which do not impact its objective: (1) only slice position and orientation were changed and (2) only a simple phantom was tested. Human studies are planned, and future versions will include a larger list of adjustable parameters8.Conclusion

Immersive and interactive remote control of an MRI scanner on-the-fly with an HMD is possible and intuitive. This can be used to establish new paradigms and resources in teleradiology, image-guided surgery, and education/training. Future work will focus on expanding on-the-fly interactive segmentation and rendering options and gaze/voice/grasp control of acquisition parameters. Current work include assessment of latencies and user studies to investigate ergonomics and functionality. An open source description of the framework and HoloLens/Android/iOS Apps will soon be available.Acknowledgements

This work was supported in part by NSF CNS-1646566 and DGE-1746046, Horizon 2020 ERC FET-OPEN 737180 Histo MRI, Horizon 2020 ERC Advanced NOMA-MRI 670629, Simon Stevin Meester Award and NWO WOTRO Joint SDG Research Programme W 07.303.101. We would like to thank Jeroen Eggermont and Michèle Huijberts for their help regarding obtaining technical approval.References

1. Velazco-Garcia JD, Navkar NV, Balakrishnan S, et al. Evaluation of User Interaction with an Augmented Environment for Planning Transrectal MR-Guided Prostate Biopsies. Int J Med Robot Comput Assist Surg. 2021. doi:10.1002/rcs.22902. Morales Mojica CM, Velazco-Garcia JD, Pappas E, et al. A Holographic Augmented Reality Interface for Visualization of MRI Data and Planning of Neurosurgical Procedures. J Digit Imaging. 2021. doi:10.1007/s10278-020-00412-3

3. Molina G, Velazco-Garcia JD, Shah D, Becker AT, Tsekos NV. Automated Segmentation and 4D Reconstruction of the Heart Left Ventricle from CINE MRI. In: Proceedings - 2019 IEEE 19th International Conference on Bioinformatics and Bioengineering, BIBE 2019. IEEE; 2019.

4. Kim KH. The Potential Application of Virtual, Augmented, and Mixed Reality in Neurourology. Int Neurourol J. 2016;20(3):169-170.

5. Liu J, Al’Aref SJ, Singh G, et al. An Augmented Reality System for Image Guidance of Transcatheter Procedures for Structural Heart Disease. Wang Y, ed. PLoS One. 2019;14(7):e0219174.

6. Velazco-Garcia JD, Shah DJ, Leiss EL, Tsekos N V. A Modular and Scalable Computational Framework for Interactive Immersion into Imaging Data with a Holographic Augmented Reality Interface. Comput Methods Programs Biomed. 2021;198:105779.

7. Smink J, Häkkinen M, Holthuizen R, et al. eXTernal Control ( XTC ) : A Flexible , Real-time , Low-latency , Bi-directional Scanner Interface. In: Proceedings of the 19th Annual Meeting of ISMRM. 2011;1755.

8. Koolstra K, Staring M, De Bruin P, Van Osch MJP. Individually optimized ASL background suppression using a real-time feedback loop on the scanner. In: ESMRMB. 2021;S137.

Figures

Figure 1. Diagram

of the bi-directional data/command communication pipeline of the FI3D as deployed

in these studies. The operator (JDV; in Houston) was interacting in real-time

with the holographic scene (Houston) to adjust segmentation parameters and the

MR scanner (Leiden) to adjust imaging parameters. In the holographic interface,

the operator can place the holographic scene at a preferable location.

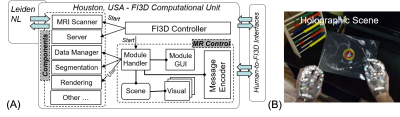

Figure 2. (A) Architecture of the FI3D server run in Houston;

the operator interfaces with the system through its GUI and remotely through

the holographic interface in the HMD. (B) Caption from the HoloLens HMD

connected to the FI3D. The operator manipulates a holographic scene composed of

a CINE MRI and a 3D segmentation of the left ventricle. In this example, the

operator used previously collected images and the image processing and

segmentation components6.

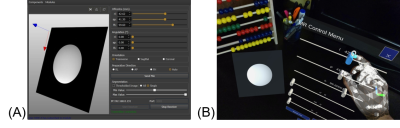

Figure 3. (A) Screenshot of the user interface of the MRI

scanner control module in the FI3D’s GUI. (B) Caption of the MRI control holographic

scene from the HoloLens 2 HMD.

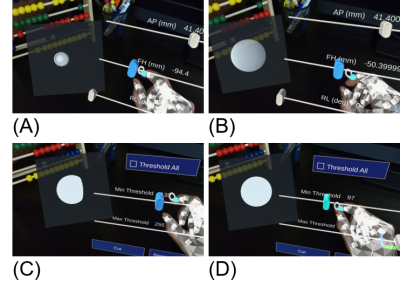

Figure 4. (A) A transverse slice from a

phantom multi-slice set as received from the connection to the MR scanner. (B) Interactively

thresholded image (using the HoloLens 2 grasping hand gesture, as in figures 3.B

and 2.B). (C) Segmentation applied on

the image. (D) A 3D view of the scene with all received images interactively

segmented to create a 3D frame of the scanned phantom.

Figure 5. Captions of the

holographic interface. In (A) and (B), the operator selects different FH

positions (from -98.4 mm to -50.4 mm). These selections are sent by the MR

control module to Leiden for scan collection, which are then sent back to

Houston for visualization. In (C) and (D), the operator adjusts thresholding

parameters, resulting in modifications to the segmentation routine.

DOI: https://doi.org/10.58530/2022/2227