2226

Automatic fibroglandular tissue segmentation in breast MRI using a deep learning approach1Department of Research & Innovation, Olea Medical, La Ciotat, France

Synopsis

An accurate fibroglandular tissue (FGT) segmentation model was designed using of a deep learning strategy on T1w series without fat suppression. The proposed method combined a dedicated preprocessing and the training of a two-dimensional U-Net architecture on a multi-centric representative database to achieve an automatic FGT segmentation. The final test of the generated model exhibited overall good performances with a median Dice similarity coefficient of 0.951. More contrasted performances were obtained when correlating the gland density with the discrepancy between ground truth and prediction. Indeed, the lower the breast density, the greater the uncertainty in the segmentation.

Introduction

Breast MRI screening relies on several criteria to assess the breast cancer risk thanks to BI-RADS classification (Breast Imaging-Reporting and Data System). Amongst others, breast density is considered as one of them [1]. Unlike 2D mammography, 3D MR exam provides fine spatial characteristics and sufficient tissues intensity contrast to segment the fibroglandular tissue (FGT) as demonstrated in previous studies [2-4]. The spatial extent of FGT can easily be translated in terms of breast density. Another important criterion, the breast parenchymal enhancement (BPE), can be quantified using the FGT segmentation applied through dynamic contrast enhancement time series. In this study, a deep learning approach was used to design a model for the FGT segmentation on T1w images without fat saturation using a multi-constructor and multi-centric dataset.Methods

The database consisted of 345 full MRI exams from different imaging centers using different system manufacturers and different sequence parametrization. For the purpose of this study, the T1w images without fat saturation were selected to build the used dataset. The native orientation is axial with an isotropic pixel spacing ranging between 0.33 and 0.94 mm with a median value of 0.74 mm. Also, the slice thickness ranged between 0.75 and 7 mm with a median value of 4 mm.The initial labeling process was performed in a semi-automatic fashion including a 2-class clustering using thresholding and k-means clustering. These coarse labels were then refined with the ImFusion Labels software, and finally, the FGT labels were corrected by an expert with significant experience in breast MRI.

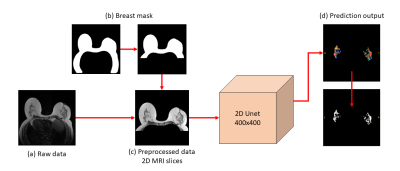

A dedicated preprocessing was used to focus mainly on the breast area. The thorax area was occulted in the native images and the breast area was cropped under the sternum bone as depicted in Figure 1b. Each case was categorized using the breast density BI-RADS score to monitor the proportion between the four class in the dataset: 17%, 27%, 32%, 24%, respectively for A, B, C, D classes. For training, the dataset was split into three sub-datasets namely the training, validation and testing sets with the respective proportions of 60%, 20% and 20%.

A two-dimensional U-Net architecture [5] was trained to differentiate FGT from the remaining breast tissues. The model weights were adjusted thanks to the binary cross-entropy loss function. The preprocessed 2D slices were resized to 400x400 pixels and underwent an intensity rescale. The learning monitoring and the model performances were assessed thanks to the Dice similarity coefficient (DSC) and the relative volume error (RVE) defined as $$$\frac{\mid~V_{prediction}-V_{ground truth}~\mid}{V_{ground truth}} \times 100$$$, both computed on the native 3D volumes.

Results

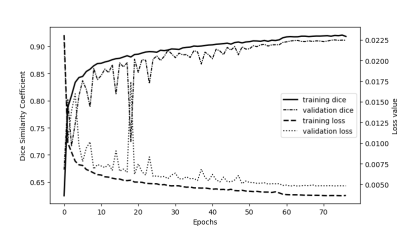

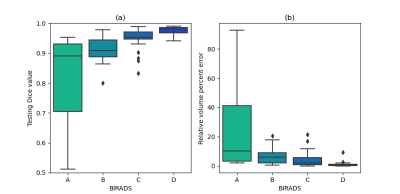

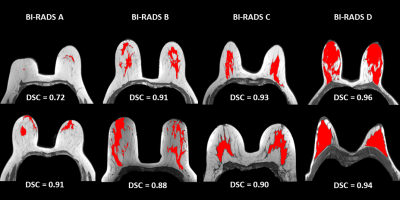

The neural network in conjunction with the dataset demonstrated the ability to learn the requested task with a final training and validation DSC of 0.918 and 0.911 respectively (Figure 2). The FGT segmentation model resulted on high overall performances in the test step. Indeed, average DSC was 0.917 with values ranging between 0.512 and 0.99. When analyzing DSC for each density class, DSC median was 0.890, 0.908, 0.954, and 0.981, respectively from A to D as shown in Figure 3a. The lower the breast density, the greater the uncertainty in the segmentation result. Such tendency is reflected in the RVE results shown in Figure 3b, with less than 10% error for B, C, D classes, and more than 20% error for A class. Across all classes, the overall median RVE is 2.11 %. Figure 4 displays several examples of the achieved segmentation results.Discussion

The FGT segmentation is a complicated task considering the thinness of the areas to segment. When comparing with previous studies [2-4], our results better demonstrated the fine ability of the 2D U-Net architecture to address the FGT segmentation task. Here, the multi-centric database intended to increase the dataset diversity to strengthen the algorithm’s robustness against real life use case. The overall DSC values (median: 0.951, average: 0.917) and the overall RVE values (median: 2.11%, average: 7.20%) showed a fine consistency with the ground truth. However, the model struggled with less dense breasts and induced more outliers and a growing dispersion from D to A classes (Figure 3a). Interestingly, the lower density cases have a mammary gland which tends to be mainly fiber tissues. The spatial extend may be delicate to identify at the labeling step and a lack of accuracy for the ground truth definition may have occurred which mitigates the worst DSC and RVE values for the A class. It is worth mentioning that the effectiveness of the preprocessing method relied on an accurate determination of the breast domain mask. As shown in [4], the FGT mask (Figure 4) can later be exploited to determine the mammary density and the BPE classes and proposed as a computer-aided diagnostic tool to assess breast cancer risk.Conclusion

This study demonstrated the efficiency of the 2D UNet network to segment fibroglandular tissue on a multi-centric database. The good overall results showed that the model exhibited good performances among all breast densities with increasing outliers for least dense breasts. Such model intends to incorporate real life features diversity and can help to guide the BI-RADS scoring to assess the breast cancer risk.Acknowledgements

The authors would like to thank the following collaborators from Olea Medical for their help on the manual segmentation task: Florence FERET, Manon SCHOTT and Emily GEYLER.References

- V. A. McCormack. Breast density and parenchymal patterns as markers of breast cancer risk : A metaanalysis. Cancer Epidemiology Biomarkers & Prevention, 15(6) :1159–1169, June 2006. doi : 10.1158/1055-9965.epi-06-0034.

- Yang Zhang, Jeon-Hor Chen, Kai-Ting Chang, Vivian Youngjean Park, Min Jung Kim, Siwa Chan, Peter Chang, Daniel Chow, Alex Luk, Tiffany Kwong, and Min-Ying Su. Automatic breast and fibroglandular tissue segmentation in breast MRI using deep learning by a fully-convolutional residual neural network u-net. Academic Radiology, 26(11) :1526–1535, November 2019. doi : 10.1016/j.acra.2019.01.012.

- Tatyana Ivanovska, Thomas G. Jentschke, Amro Daboul, Katrin Hegenscheid, Henry Völzke, and Florentin Wörgötter. A deep learning framework for efficient analysis of breast volume and fibroglandular tissue using MR data with strong artifacts. International Journal of Computer Assisted Radiology and Surgery, 14(10) :1627–1633, March 2019. doi : 10.1007/s11548-019-01928-y.

- D. Wei et al., “Fully automatic quantification of fibroglandular tissue and background parenchymal enhancement with accurate implementation for axial and sagittal breast MRI protocols,” Med. Phys., vol. 48, no. 1, pp. 238–252, Jan. 2021.

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Lecture Notes in ComputerScience (Including Subseries Lecture Notes in Articial Intelligence and Lecture Notes in Bioinformatics). Vol 9351. Springer Verlag;2015:234-241. doi:10.1007/978-3-319-24574-4_28

Figures

Figure 1: Schematic of the segmentation process

Raw data (a) are T1w series without fat saturation. Thanks to a dedicated deep learning breast segmentation model, the volume was preprocessed by applying the mask of the breast, leading to the removal of the thorax and the images background (b). The 2D UNet network operates on 400x400 pixels cropped T1w slices (c) and returns a prediction map for both breast which is finally binarized to recover the FGT mask.

Figure 2: Plot of loss and dice curves as a function of the number of epochs

The training and validation curves along epochs with the binary cross-entropy loss and the Dice similarity coefficient.

Figure 3: Model performances depending on the BIRADS score

The test dataset is split between the four density categories corresponding to the different breast densities class of the BI-RADS. Model performances were evaluated on each individual category using the Dice similarity coefficient and the relative volume error.

Figure 4: FGT segmentation results for the testing set.

The whole range of density is present in the test dataset, from very fatty breasts to very dense ones (i.e., from A to D). Illustration shows up the central slice of the axial T1w volume with the overlayed segmentation result and the DSC value.