2139

Interactive Real-Time MRI-Guided Needle Tracking using Scanner Remote Control1Biomedical Engineering, University of Toronto, Toronto, ON, Canada, 2Radiology, Brigham and Women’s Hospital and Harvard Medical School, Boston, MA, United States, 3Siemens Medical Solutions USA Inc., Boston, MA, United States, 4Siemens Healthcare Ltd, Vineland, ON, Canada, 5Siemens Medical Solutions USA Inc., Newton, MA, United States, 6University of Toronto, Toronto, ON, Canada

Synopsis

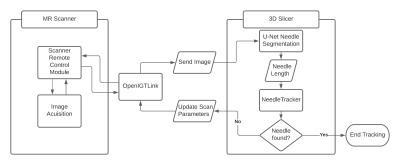

MRI offers the gold standard for delineating cancerous lesions in soft tissue. Minimally invasive needle-based interventions require the accurate placement of multiple long, flexible needles at the target site. The manual tracking of needles in MR images is a time-consuming task that is further challenged by needle deflection. Automated needle segmentation offers the means to evaluate the alignment of the needle to the scan plane. This work demonstrates automatic needle tracking using remote scan control to dynamically update the scan plane of a real-time MR sequence. Automatic scan plane alignment is validated, and the feasibility of needle tracking is assessed.

Introduction

Magnetic resonance imaging provides excellent visualization of soft tissue targets for needle-based interventions such as prostate brachytherapy. The accurate placement of needles according to a treatment plan is vital for the safety and efficacy of treatment. In practice, needle deflection limits the targeting accuracy [1]. Real-time MRI offers a means to track the needle during insertion and provide feedback for needle steering strategies. However, alignment of the real-time MR slice to the expected path may not capture the full extent of the needle as the needle tip can deviate out of the expected imaging plane. Previously, Li et al. demonstrated a deep-learning approach to guide scan plane alignment with misaligned needles [2]. In this work, we demonstrate the feasibility of near real-time MR needle tracking, using a prototype Scanner Remote Control (SRC) add-in to interactively update the scan plane from a stand-alone PC during image acquisition.Methods

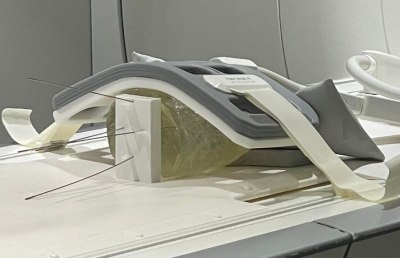

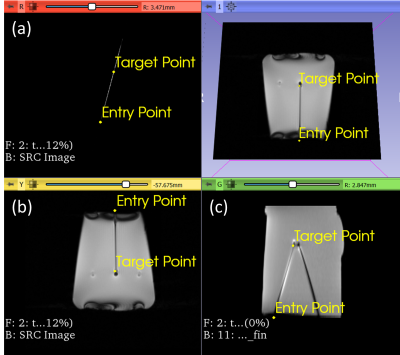

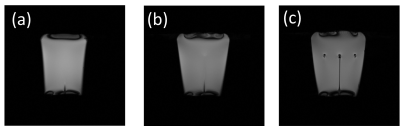

Images were acquired on a 1.5T MRI scanner (Magnetom Aera, Siemens Healthcare, Erlangen, Germany). The scan parameters were updated via SRC using OpenIGTLink [3] as a communication bridge between the scanner and a stand-alone PC. The user interface is built in 3D Slicer [4]. Three slice configurations are available for tracking: Single slice, 3 parallel slices and 3 independent slice groups. The planned targets and entry points were defined on a multi-slice T2-weighted TSE, and the respective slice positions and orientations were computed in 3D Slicer. The needle was segmented automatically on each time step using a previously described U-Net mode that was adapted for real-time imaging [5]. The difference between the length of the needle in the real-time image and the expected needle length is used to guide the needle tracking algorithm. (1) Validation of Automatic Slice Alignment. A cylindrical gelatin phantom was placed at the isocenter of the magnet. A custom 3D-printed template was positioned at the flat face of the phantom (Fig. 2). The guide holes in the template define 4 fixed angle needle paths (±10° from the vertical and ±15° from the horizontal). For each target path, a 6F titanium alloy needle was inserted to a depth of 100 mm, at an average rate of 2.5 mm/s. Real-time MR images were acquired using a BEAT sequence (TR/TE=861.56/2.22ms, FA=70°, FOV=300mm2, slice thickness=5mm, matrix=192⨉192, TA=0.8625s/frame). During acquisition, the scan plane was updated to the expected needle trajectory via 3D slicer and validated against the known position and orientation of the inserted needles. (2) Preliminary Evaluation of Automated Needle Tracking. To confirm the feasibility of tracking needle deflection, the scan plane was initialized to a coronal plane capturing the expected entry point of the needle. The overall tracking time and accuracy of localization of the automated algorithm was compared to the manually tracked scan plane of the needle. In the case of automated tracking, needle tracking commenced, and the sequence was terminated when the software reported that the needle was found. In the manual case, the needle was localized using a prototype interactive real-time sequence to adjust the position and orientation of the scan plane until the operator was satisfied that the needle had been found. The needle tracking error was measured by comparing the automatically defined needle and the manually-defined needle on the real time images to the ground truth reference needle determined by manual segmentation on a final multi-slice T2-weighted TSE (TR/TE=4770/59ms, FA=160°, FOV=150mm2, slice thickness=3mm, matrix=192⨉192).Results

(1) Validation of Automatic Slice Alignment The scan plane successfully aligns to the expected needle path as the full shaft of the needle is captured after the scan plane updates (Fig.3). The real-time imaging successfully captured the shaft of the needle with a visualization latency of 1.72s on the MR inline viewer after click-based update of the scan plane. (2) Evaluation of Automated Needle Tracking. The tracking error for automated and manual slice alignment were 2.9±1.8mm, and 2.0±1.2mm, respectively. The scan plane was automatically updated on each image acquisition with a refresh rate of 0.86s for the complete process of acquisition, reconstruction, image transfer, rendering, needle localization and scan plane update. The overall time for needle tracking was 6.90s for the automated case and 39.13s for the manual case.Discussion and Conclusion

The results demonstrate the feasibility of closed-loop feedback control of scan plane updates using remote control of an interactive real-time MR sequence. The open-source network interface enables adaptation to a variety of needle insertion strategies. The needle deviation observed in the scan plane alignment may be attributed to the printing resolution of the ground-truth template. Further tuning will be performed to optimize the search and coordinate with feedback from the MR. Currently, the success of needle tracking and the overall tracking time is sensitive to the initialization of the scan plane for both manual and automated tracking. The algorithm requires that the needle is at least partially in view at the start of tracking. More robust needle segmentation may be achieved by training the U-Net on a larger and more diverse dataset. The real-time image-based feedback needle deflection will support robotic control strategies for needle insertion while ensuring safe needle placement with minimum placement error.Acknowledgements

The study was funded in part by the National Institutes of Health (4R44CA224853, R01EB020667, R01CA235134, P41EB015898) and Siemens Healthineers. The SRC package was provided by Siemens as Work-in-Progress.References

[1] H. Xu et al., “Accuracy analysis in MRI-guided robotic prostate biopsy,” Int. J. Comput. Assist. Radiol. Surg., vol. 8, no. 6, pp. 937–944, 2013, doi: 10.1007/s11548-013-0831-9.

[2] Li X, Lee Y-H, Tsao T-C, Wu HH. Deep Learning-Driven Automatic Scan Plane Alignment for Needle Tracking in MRI-Guided Interventions . Proceedings of the ISMRM 29th Annual Meeting, Vancouver, Canada 2021:0861.

[3] Tokuda J, Fischer GS, Papademetris X, et al. OpenIGTLink: an open network protocol for image-guided therapy environment. Int J Med Robot Comput Assist Surg. 2009;5(4):423-434. doi:10.1002/rcs.274

[4] Fedorov A, Beichel R, Kalpathy-Cramer J, et al. 3D Slicer as an image computing platform for the Quantitative Imaging Network. Magn Reson Imaging. 2012;30(9):1323-1341. doi:https://doi.org/10.1016/j.mri.2012.05.001

[5] Aleong A, Weersink R. Machine learning based segmentation of multiple needles for MR-guided prostate brachytherapy. WMIC 2019, Montreal, Quebec, Sep 4 – 7, 2019.

Figures