1972

Artificially-generated Consolidations and Balanced Augmentation increase Performance of U-Net for Lung Parenchyma Segmentation on MR Images1Institute of Diagnostic and Interventional Radiology, Medical School Hannover, Hannover, Germany, 2Biomedical Research in Endstage and Obstructive Lung Disease Hannover (BREATH), Member of the German Centre for Lung Research (DZL), Hannover, Germany, Hannover, Germany

Synopsis

Accurate fully automated lung segmentation is needed to facilitate Fourier-Decomposition employment-based techniques in clinical routine among different centers. However, the lung parenchyma segmentation remains challenging for convolutional neural networks (CNN) when consolidations are present. To improve training balanced augmentation (BA) and artificially-generated consolidations (AGC) were introduced. The proposed CNN was compared to conventional CNNs without BA and AGC using Sørensen-Dice coefficient (SDC) and Hausdorff coefficient (HD). The SDC / HD of the proposed model is significantly higher (p of 0.0001 and p of 0.0146 / p of 0.0009 and p of 0.0152) when compared to CNNs without BA and AGC.

Introduction

Phase-resolved functional lung1 (PREFUL) MR imaging allows assessment of pulmonary ventilation and perfusion dynamics in free breathing without the use of contrast agents or gas inhalation. An important step during post-processing is the segmentation of the lung parenchyma. However, the presence of consolidations2 can pose a challenge for correct lung parenchyma segmentation. MR images with artificially-generated consolidations (AGC) might be a viable solution to improve automated lung segmentation3. Since there are not enough examinations with real consolidations in our database, we decided to create artificial consolidations. In addition, 96% of the 2D MR datasets in our database are coronal slices centered to the trachea. Thus, slices further anterior or posterior are underrepresented, which might be corrected by a balanced augmentation4 (BA).Therefore, the aim of this study was to develop a CNN that employs AGC and BA in training process in order to reliably segment lung parenchyma even with real consolidations.Methods

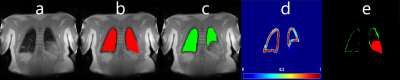

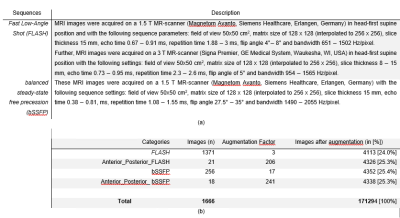

233 (134 female, 99 male) healthy volunteers and 100 (56 female, 44 male) patients were included. The patient cohort included subjects with different pathologies: chronic obstructive pulmonary disease (COPD) (n=54; 34 female, 20 male), cystic fibrosis (CF) (n=35; 20 female, 15 male) and chronic thromboembolic pulmonary hypertension (CTEPH) (n=11; 6 female, 5 male). MR images were acquired using fast low angle shot (FLASH) and balanced steady-state free precession (bSSFP) sequence with sequence parameters stated in Table 1a.All images of each slice were registered towards an intermediate respiratory state using Advanced Normalization Tools5. The registered images of each slice were averaged in the time domain resulting in a single averaged image per slice. Afterwards, a total of 1891 averaged coronal MR images were manually segmented by a scientist with two years’ experience in lung MRI (C.C.) supervised by a radiologist (J.V.C., >18 years of MRI experience). For training, in 1666 ($90%$) MRI images AGC were inserted (see Figure 1) and BA (see Table 1b) was performed. The testing dataset consisted of 187 (10%) MR images without consolidations and 38 extra MR images with real consolidations. For the training, two-classes were defined; 1) “contour of the lungs” (ground truth) and 2) “background” (outside and within the lung), see Figure 2. The output of the CNN was the probability matrix of the lung parenchyma’s contour.

The network architecture used in this work was the U-Net6. The input layer was resized to 256 x 256 and output layer size was 256 x 256. Batch size 64, epochs 100 and accuracy as metric.

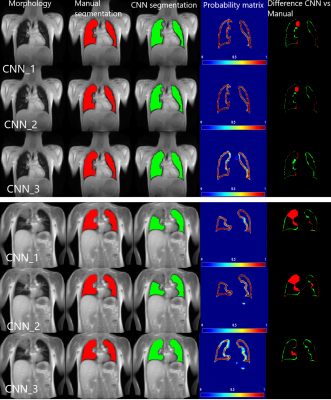

The training was performed on a server with: 2 x NVIDIA Quadro RTX 6000 24 GB and was computed in 3 hours. The proposed CNN (CNN_3: with AGC and with BA) was compared to two other CNNs: CNN_1 - without AGC and without BA and CNN_2 - without AGC and with BA.

The Sørensen-Dice coefficient (SDC) and Hausdorff coefficient (HD) for all three CNNs were tested using paired t-test with Bonferroni corrected alpha value (a = 0.016).

Results

Regarding images without consolidations, the SDC of CNN_1 was significantly higher compared to the SDC of CNN_2 ( SDC of 92.0±6.6 vs 94.0±5.3, p of 0.0013 and HD of 7.1mm±4.5mm) and SDC of CNN_1 compared to SDC of CNN_3 was also significantly higher (SDC of 92.0±6.6 vs 94.3±4.1, p of 0.0001 and HD of 6.4mm±4.2 mm). The SDC of CNN_2 and CNN_3 were not significantly different (SDC of 92.0±6.6 vs 94.3±4.1, p of 0.54 and HD of 10.3mm±6.2mm). In images with consolidations, the SDC of CNN_1 was not significantly different compared to SDC of CNN_2 (SDC of 89.0±7.1 vs 90.2±9.4, p of 0.53 and HD of 9.8mm±7.9mm). The SDC of CNN_1 compared to SDC of CNN_3 was significantly higher (SDC of 89.0±7.1 vs 94.3±3.7, p of 0.0013 and HD of 7.2mm±4.7mm) and the SDC between CNN_2 and CNN_3 was also significantly higher (SDC of 90.2±9.4 vs 94.3±3.7, p of 0.0146 and HD of 6.7mm±5.8mm). Exemplary segmentation results are depicted in Figure 3. Interestingly, one out of the 38 MR images with consolidations resulted in an inadequate segmentation of the proposed model, see Figure 4.Discussion:

The proposed training procedures (AGC and a BA strategy) provide an increased segmentation accuracy with visible lung parenchyma pathologies.Our study did have some important limitations. The segmentation of vessels was not implemented due to the low spatial resolution of the MR images.

Secondly, the training and test of our CNN included only data acquired with FLASH and bSSFP at 1.5T and 3T, data from other sequences as well as data from low field MRI or a neonate application might be challenging for the presented CNN.

Thirdly, when voxels in the structure of the lung closely match the voxels in the diaphragm, inadequate segmentations occur (Figure 4). Therefore, regardless of the high rate of accurate segmentations, a human expert control is still essential especially in the clinical context.

Conclusion

Accuracy of lung segmentation of 2D datasets can be improved by balanced augmentation and by artificially-generated consolidations. The proposed method can be used to create robust automated lung MRI analysis pipelines, which could be integrated into the clinical workflow in the near future.Acknowledgements

This work was funded by the German Center for Lung Research (DZL).References

1. Voskrebenzev, A. et al. Feasibility of quantitative regional ventilation and perfusion mapping with phase‐resolved functional lung (PREFUL) MRI in healthy volunteers and COPD, CTEPH, and CF patients. Magn. Reson. Med. 79, 2306–2314 (2018).

2. Hansell, D. M. et al. Fleischner Society: Glossary of terms for thoracic imaging. Radiology 246, 697–722 (2008).

3. Thambawita, V. et al. SinGAN-Seg: Synthetic Training Data Generation for Medical Image Segmentation. (2021).

4. Gao, J. I. E. Data Augmentation in Solving Data Imbalance Problems. (2020).

5. Avants, B. B. et al. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage 54, 2033–2044 (2011).

6. Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. in 234–241 (2015). doi:10.1007/978-3-319-24574-4_28.

Figures