1970

EPI Nyquist Ghost Artifact Correction for Brain Diffusion Weighted Imaging (DWI) using Deep Learning1Medical Image Processing Research Group (MIPRG), Department of Electrical and Computer Engineering, COMSATS University, Islamabad, Pakistan, 2Service of Radiology, Geneva University Hospitals and Faculty of Medicine, Hospital University of Geneva and University of Geneva, Geneva, Switzerland, 3OncoRay – National Center for Radiation Research in Oncology, Faculty of Medicine and University Hospital Carl Gustav Carus, Technische Universität Dresden, Helmholtz-Zentrum Dresden –Rossendorf, Dresden, Germany, Dresden, Germany, 4German Cancer Research Center (DKFZ), Heidelberg, Germany, Dresden, Germany

Synopsis

Echo-planar imaging suffers from Nyquist ghost (i.e., N/2 ghost) artifacts because of poor system gradients and delays. Many conventional methods have been used in literature to remove N/2 artifacts in Diffusion Weighted Imaging (DWI) but often produce erroneous results. This paper presents a deep learning approach to eliminate the phase error of k-space for removing the Nyquist ghost artifacts in DWI. Experimental results show successful removal of the ghost artifacts with improved SNR and reconstruction quality with the proposed method.

Introduction

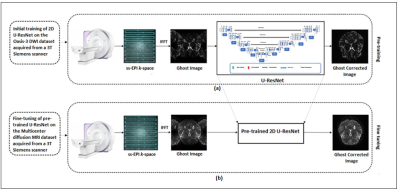

Nyquist ghost artifacts in EPI originate due to distortion in main magnetic field ( ) occurring because of eddy currents that causes a phase mismatch between the even and odd echoes [1]. Because of this phase disparity, multiple copies of the image overlap with the original image that causes artifacts, affects the SNR causing difficulties in disease diagnosis [2]. This paper proposes 2D U-Net with residual connections (2D U-ResNet) for the correction of ghost artifacts in DW images. The proposed 2D U-ResNet is firstly trained on the unidirectional DWI dataset and later fine-tuned for multidirectional DWI data. The results of the proposed 2D U-ResNet are compared with conventional U-Net [1] and reference-less ghost/object minimization (G/O) method [3].Method

In the first phase of our research, the proposed 2D U-ResNet (shown in Figure 1) having 34 dense convolution layers is initially trained on the Oasis-3 DWI dataset [4] of 14 b-values ranging between 0 and 800s/mm2; acquired from a 3T Siemens scanner with ss-EPI sequence, TR=14.5msec, TE=0.11msec, and Flip angle=90o. Here, the 2D U-ResNet is trained with a training set of 2016 images (of three Patients). The training set was divided into training data having 1814 images and validation data of 202 images to optimize the losses during network training. The ghost images (given as input to 2D U-ResNet) are generated by simulating phase error between the even and odd lines of k-space via MATLAB2018 (The MathWorks, Natick, MA) [3]. The ghost free data [4, 5] was used as a ground truth (label) in our experiments. In the proposed method, RMSProp optimizer, ReLU activation function and a learning rate of 1×10-3 is used for optimizing the weights of the network. The proposed network training is performed with a batch size of 5 and no of epochs=150. These parameters were chosen empirically after performing experiments for a range of numbers to find the optimum values of batch size and number of epochs.In the second phase of this research, the pre-trained 2D U-ResNet is used to remove the ghost artifacts from multicenter DWI dataset [5] via end-to-end fine-tuning with 8372 images. Fine-tuning is carried out by using the pre-trained weights obtained from the first phase. The fine-tuning training set was divided into the training data having 7533 images and a validation data of 836 images to optimize the losses during network training. This dataset included the DW images with four different b-values i.e. 0, 1000, 2000, and 3000 s/mm2 with 30 directions. The data is acquired using a 3T Siemens scanner with the following parameters: sequence=EPI, TR=5.4sec, TE=71msec, and Flip angle=90o [5]. In this phase of experiments, RMSProp optimizer with the same parameters as in the initial training was used except the number of epochs=50 and learning rate of 1×10-4 for optimizing the weights of the network. The training of the 2D U-ResNet is performed with Python 3.7.12 by Keras 2.6.0 using TensorFlow 2.6.0 as a backend.

Results and Discussion

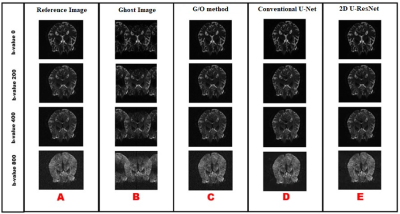

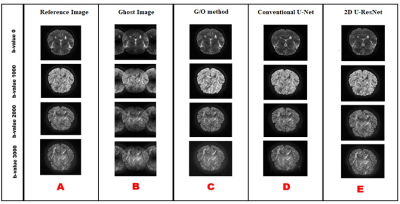

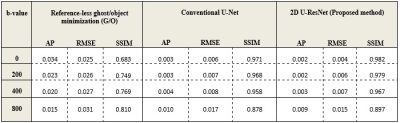

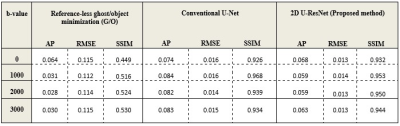

The experiments are performed on the Oasis-3 [4] and Multicenter [5] DWI datasets. The pre-trained network (shown in Figure 1(a)) is tested on four patients with a total of 2688 images while the fine-tuned network (shown in Figure 1(b)) is tested on three patients having 25116 images. The results (shown in Figures 2 and 4) of the proposed 2D U-ResNet are compared with conventional U-Net [1] and reference-less ghost/object minimization method [3]. Table-1 demonstrates that the 2D U-ResNet (proposed method) provides 77.60% lower mean AP, 71.64% lower mean RMSE, and 27.73% higher mean SSIM values than the reference-less ghost/object minimization method [3]. Further, the proposed method provides 25.4% lower mean AP, 17.94% lower mean RMSE, and 1.339% higher mean SSIM values (shown in Table-1) than conventional U-Net [1] for human brain DWI Oasis-3 data in our experiments. Table-2 demonstrates that the 2D U-ResNet provides 77.61% lower mean AP, 88.36% lower mean RMSE, and 90.26% higher mean SSIM values than the reference-less ghost/object minimization method [3]. Also, the proposed method provides 22.49% lower mean AP, 12.93% lower mean RMSE, and 1.016% higher mean SSIM values (shown in Table-2) than conventional U-Net [1] for human brain multicenter diffusion MRI data in our experiments.Conclusion

A new CNN based method for ghost free DWI reconstruction is proposed and the results are compared with conventional U-Net and reference-less ghost/object minimization method [3]. The results show that 2D U-ResNet works well on both the Oasis and Multicenter datasets as compared to the conventional U-Net and reference-less ghost/object minimization method. The proposed method not only reconstructs the ghost free image, but also improves the SNR and image quality.Acknowledgements

Authors thank central XNAT and Figshare for providing publicly open access to the DWI datasetReferences

[1]. X. Chen, Y. Zhang, H. She, and Y. P. Du, “Reference-free correction for the nyquist ghost in echoplanar imaging using deep learning,” ACM Int. Conf. Proceeding Ser., pp. 49–53, 2019, doi: 10.1145/3375923.3375927.

[2]. J. Lee, Y. Han, J. K. Ryu, J. Y. Park, and J. C. Ye, “k-Space deep learning for reference-free EPI ghost correction,” Magn. Reson. Med., vol. 82, no. 6, pp. 2299–2313, 2019, doi: 10.1002/mrm.27896.C.

[3]. J. A. McKay, S. Moeller, L. Zhang, E. J. Auerbach, M. T. Nelson, and P. J. Bolan, “Nyquist ghost correction of breast diffusion weighted imaging using referenceless methods,” Magn. Reson. Med., vol. 81, no. 4, pp. 2624–2631, 2019, doi: 10.1002/mrm.27563.

[4].https://central.xnat.org

[5]. https://figshare.com/articles/dataset/Multicenter_dataset

Figures