1968

A Mask Guided Attention Generative Adversarial Network for Contrast-enhanced T1-weight MR Synthesis1MR Clinical Science, Philips Healthcare, Suzhou, China

Synopsis

Image synthesis methods based on deep learning has recently achieved success in reducing the dosage of gadolinium-based contrast agents (GBCAs). However, these methods cannot focus on the region of interest to synthesize realistic images. To address this issue, a mask guided attention generative adversarial network (MGA-GAN) was proposed to synthesize contrast enhanced T1-weight images from the multi-channel inputs. Qualitive and quantitative results indicate that the proposed MGA-GAN can improve the synthesized images with higher quality for details of brainstem glioma, compared with state-of-the-art methods.

Introduction

Gadolinium-based contrast agents (GBCAs) are widely used in the MRI diagnosis of brainstem gliomas. However, they may cause the nephrogenic systemic fibrosis1. Reduce the GBCAs dose is relevant to the patients who need repeated contrast administration. Recently, the gadolinium deposition has raised concern 2. Therefore, it is essential to reduce the dosage while preserving the contrast information. Recently, several methods based on deep learning have been proposed to synthesize contrast enhanced T1-weight (ceT1w) images from multi-channel inputs3,4.Methods

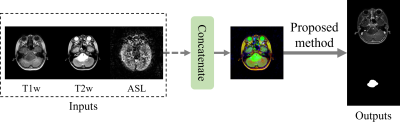

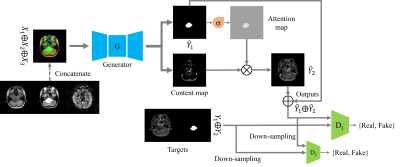

Thirty patients are introduced in this retrospective study. For each patient, pre-contrast T1 weight (T1w), T2 weight (T2w), Arterial Spin Labeling (ASL) and ceT1w images were collected. All images were aligned and rigid registration was conducted to reduce the alignment variations. We employed two experienced radiologists to annotate the segmentation mask of brainstem glioma lesion. 24 patients’ data were randomly selected for training and the remaining were selected for testing. Fig. 1 shows the input data that concatenated by three channels in axial direction used as inputs, and ceT1w concatenated with brainstem glioma mask used as outputs.The proposed MGA-GAN, as show in Fig. 2, learned a mapping from pre-contrast T1w, T2w and ASL to ceT1w and mask. It consists of a mask guided generator and multi-scale discriminators. The mask guided attention generator is an encoder-decoder architecture based on Wang et al5. The generator is composed of a down-sampling path, 10 residual blocks and an up-sampling path. It takes the concatenation of multi-modalities (which contains 3 channels) as inputs and outputs a content map (which contains 3 channels) and an attention map (which contains 3 channels). The attention map is generated by adding a sigmoid layer behind the synthesized mask. It is continuous between [0,1] and reflects the discriminative region. Finally, the ceT1w images is synthesized by multiplication operator $$$\hat{Y}_2 = C_{map} \otimes C_{map}$$$. The multi-scale discriminators distinguish the synthesized images with target images on the high resolution of 512x512 and low resolution of 256x256.

In addition to feature matching LFM5 between the synthesized images and target images, we used adversarial loss LADV6 and perceptual loss LPER 7 from the discriminator to encourage more realistic outputs. The overall loss is defined as L = LADV + λ1LFM + λ2LPER, where the λ1 and λ2 are set to 10.

Results

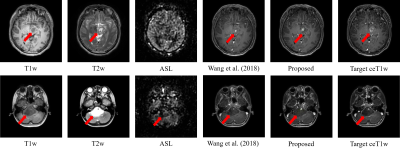

For quantitative evaluation, we use two metrics to measure between the synthesized ceT1w images and the target ceT1w images: Structural Similarity Index Measurement (SSIM), Peak Signal Noise Ratio (PSNR). The synthesized ceT1w images have PSNR of 25.21±2.29, SSIM of 0.84±0.05, compared to target ceT1w images. The quantitative results by proposed method are higher than that of wang et al. (PSNR of 25.09±0.85, SSIM of 0.83±0.06). For qualitative evaluation, we compare the proposed method with the baseline model, i.e., Wang et al. From the Fig. 3, the proposed method is able to synthesize more realistic ceT1w images. Furthermore, the proposed method can enhance the region of brainstem glioma (indicated by red arrows).Discussion and Conclusion

The proposed MGA-GAN use the mask followed by a sigmoid layer to generate an attention mask. The above results demonstrate that the attention map can help with synthesizing ceT1w images with discriminative brainstem glioma region. However, the quality of synthesized ceT1w images is still need to improve. In this work, we have demonstrated that the ability of a novel MGA-GAN for ceT1w MR synthesis in brainstem glioma cases. Compare with the state of art method of pix2pixHD, the MGA-GAN is shown to synthesize more realistic ceT1w MR images.Acknowledgements

No.References

1. Khawaja, A.Z., et al., Revisiting the risks of MRI with Gadolinium based contrast agents—review of literature and guidelines. 2015. 6(5): p. 553-558.

2. Boyd, A.S., J.A. Zic, and J.L.J.J.o.t.A.A.o.D. Abraham, Gadolinium deposition in nephrogenic fibrosing dermopathy. 2007. 56(1): p. 27-30.

3. Gong, E., et al., Deep learning enables reduced gadolinium dose for contrast-enhanced brain MRI. J Magn Reson Imaging, 2018. 48(2): p. 330-340.

4. Chen, C., et al., Synthesizing MR Image Contrast Enhancement Using 3D High-resolution ConvNets. 2021. abs/2104.01592.

5. Wang, T.-C., et al. High-resolution image synthesis and semantic manipulation with conditional gans. in Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.

6. Isola, P., et al. Image-to-image translation with conditional adversarial networks. in Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

7. Johnson, J., A. Alahi, and L. Fei-Fei. Perceptual losses for real-time style transfer and super-resolution. in European conference on computer vision. 2016. Springer.

Figures