1963

Learning motion correction from YouTube for real-time MRI reconstruction with AUTOMAP1ACRF Image X Institute, Faculty of Medicine and Health, The University of Sydney, Sydney, Australia, 2A. A. Martinos Center for Biomedical Imaging, Charlestown, MA, United States, 3Dipartimento di Elettronica, Informazione e Bioingegneria, Politecnico di Milano, Milan, Italy, 4Department of Physics, Harvard University, Cambridge, MA, United States, 5Harvard Medical School, Boston, MA, United States

Synopsis

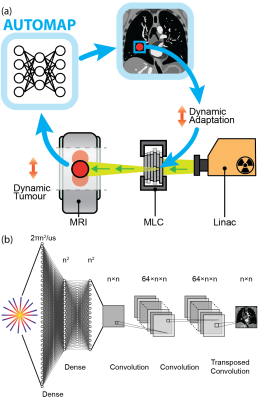

Today’s MRI does not have the spatio-temporal resolution to image the anatomy of a patient in real-time. Therefore, novel solutions are required in MRI-guided radiotherapy to enable real-time adaptation of the treatment beam to optimally target the cancer and spare surrounding healthy tissue. Neural networks could solve this problem, however, there is a dearth of sufficiently large training data required to accurately model patient motion. Here, we use the YouTube-8M database to train the AUTOMAP network. We use a virtual dynamic lung tumour phantom to show that the generalized motion properties learned from YouTube lead to improved target tracking accuracy.

Introduction

Real-time tumour targeting with MRI-guidance has recently become possible with the advent of the MRI-Linac, which combines the unrivalled image quality of MRI with a linear accelerator (Linac) for x-ray radiation therapy.1 However, the relatively low spatio-temporal resolution of real-time MRI reduces the accuracy with which radiation beams can be adapted to tumour motion.2 New low-latency imaging techniques are thus essential to improving the quality of MRI-Linacs treatments.3,4Recently, neural networks have enabled fast reconstruction of undersampled data with accuracy equivalent to slower, iterative approaches such as compressed sensing (CS).5 While neural networks show promise for real-time reconstruction, a scarcity of patient data is a barrier to training, especially for acquisitions with nonrigid motion, where a static ground truth cannot be acquired.6

Here, we leverage the YouTube-8M database to synthesize radial k-space data acquired in the presence of generic motion but with a known ground truth. We use this dataset to train AUTOMAP,7 a deep-learning framework, to perform motion robust image reconstruction. We validate our motion-trained AUTOMAP model using a virtual lung cancer phantom by simulating MRI-Linac image acquisition and target tracking (see Fig. 1(a)).8,9 We show that the YouTube-trained network corrects for motion, resulting in improved target tracking accuracy over alternative approaches.

Methods

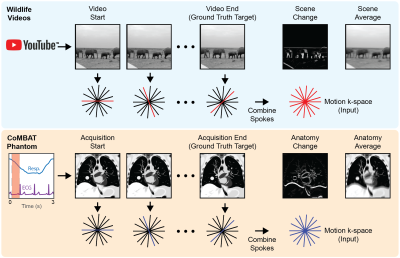

Model architecture and training: We implemented AUTOMAP in Keras with the inputs sized to reconstruct 128×128 images from 4× undersampled, single-coil radial data (architecture summarized in Fig 1(b)).7 AUTOMAP was trained to minimize the root mean square error between outputs and target images for 300 epochs on a 48 GB GPU with the Adam optimizer and a learning rate of 0.00001. Two models were trained: (1) a conventional, static-trained “AUTOMAP” model and (2) a video-trained “AUTOMAP-motion” model.Training and validation data: Training, validation and test k-space data was retrospectively simulated for a 2D golden-angle radial acquisition from either static images or motion videos.10 For static training, 20,000 natural images were taken from ImageNET. For motion training, 7,856 sequences were extracted from 767 videos in the `wildlife' class of the YouTube-8M database.11 The extraction process ensured that the video sequences contained continuous, smooth motion. K-space lines were combined across video sequences to create motion-encoded k-space data with a known ground truth (see Fig. 2).

As a validation tool, a time-series of 2D lung cancer images were generated using the digital CT/MRI breathing XCAT (CoMBAT) phantom for a balanced steady-state free precession (bSSFP) sequence with TR/TE = 10/5 ms. Images were used to synthesize motion-encoded k-space data (see Fig. 2).

As a ground truth image for motion training and validation we selected the last frame of the acquisition. We reason that, for real-time tumour tracking on an MRI-Linac, images of the most recent anatomical state are desired for beam adaptation.

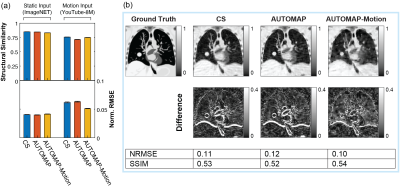

Image reconstruction: AUTOMAP reconstructions were performed via inference with trained models. Data were additionally reconstructed with conventional L1-wavelet regularized CS reconstruction as a reference standard.12 Normalized root-mean-square error (NRMSE) and structural similarity (SSIM) metrics were calculated to evaluate reconstruction quality.

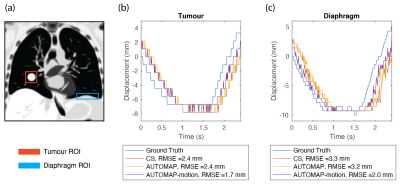

Target tracking: Regions of interest were defined for the tumour and diaphragm in the virtual phantom and a correlation-based template match algorithm used to find the closest matching location in the 240 images reconstructed across one breathing cycle.

Results

CS and AUTOMAP reconstruction accuracy tests on static data from ImageNET and motion data from YouTube show that reconstruction quality is higher for static input data than motion input data (Fig. 3(a)). The best performing technique on motion input data was the AUTOMAP-motion model, which had a mean NRMSE 21% lower than the CS reconstructions. Reconstruction with the AUTOMAP models took 7±1 ms, while CS took 235±8 ms.Results from the lung cancer phantom show the AUTOMAP-motion model outperforming conventional AUTOMAP and CS reconstructions (Fig. 3(b)). Inspecting difference images, we see that the output of the motion-trained AUTOMAP model has the least discrepancy with the ground truth (anatomy at acquisition end) around the diaphragm and tumour.

Target tracking results based on AUTOMAP, AUTOMAP-motion and CS reconstructed images are shown in Fig. 4. Template matching with motion-trained AUTOMAP gives an RMSE error from the ground truth 0.7 mm smaller for the tumour and 1.3 mm smaller for the diaphragm than when reconstructing with CS.

Discussion

Our experiments show that AUTOMAP can learn general properties of motion from YouTube videos. This motion training leads to higher tracking accuracy in a virtual lung cancer phantom than is achieved with CS or conventional AUTOMAP.While our study has focused on lung cancer, we believe our results are extensible to other high-motion sites such as the liver and prostate, where tumor movement could be optimally managed by real-time adaptive radiotherapy.13

There is significant scope to improve on tracking performance through utilization of more in-domain training data via synthetically generated motion applied to lung images. Temporal k-space filtering,14 optical flow techniques15 and neural networks tailored to radial reconstruction could also improve performance.16

Conclusion

In conclusion, we have developed a generalizable approach to training neural networks such as AUTOMAP to perform motion corrected reconstructions. These results will guide future development of deep-learning based methods for real-time motion management in MRI-guided radiotherapy.Acknowledgements

D.E.J.W. is supported by a Cancer Institute of NSW Early Career Fellowship 2019/ECF1015. We acknowledge funding from the Australian National Health and Medical Research Council (APP1132471). The information, data, or work presented herein was funded in part by the Advanced Research Projects Agency-Energy (ARPA-E), U.S. Department of Energy, under Award Number DE-AR0000823. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States Government or any agency thereof.

References

1. Liney GP, Whelan B, Oborn B, Barton M, Keall P. MRI-Linear Accelerator Radiotherapy Systems. Clin Oncol. 2018; 30: 686-691.2. Fast M, van de Schoot A, van de Lindt T, Carbaat C, van der Heide U, Sonke JJ. Tumor Trailing for Liver SBRT on the MR-Linac. Int J Radiat Oncol Biol Phys. 2019; 103: 468-478.

3. Colvill E, Booth JT, O’Brien RT, et al. Multileaf Collimator Tracking Improves Dose Delivery for Prostate Cancer Radiation Therapy: Results of the First Clinical Trial. Int J Radiat Oncol Biol Phys. 2015; 92: 1141-1147.

4. Booth J, Caillet V, Briggs A, et al. MLC tracking for lung SABR is feasible, efficient and delivers high-precision target dose and lower normal tissue dose. Radiother Oncol. 2021; 155: 131-137.

5. Chandra SS, Lorenzana MB, Liu X, Liu S, Bollmann S, Crozier S. Deep learning in magnetic resonance image reconstruction. J Med Imaging Radiat Oncol. 2021; 65: 564-577.

6. Haskell MW, Cauley SF, Bilgic B, et al. Network Accelerated Motion Estimation and Reduction (NAMER): Convolutional neural network guided retrospective motion correction using a separable motion model. Magn Reson Med. 2019; 82: 1452-1461.

7. Zhu B, Liu JZ, Rosen BR, Rosen MS. Image reconstruction by domain transform manifold learning. Nature. 2018; 555: 487-492.

8. Paganelli C, Summers P, Gianoli C, Bellomi M, Baroni G, Riboldi M. A tool for validating MRI-guided strategies: a digital breathing CT/MRI phantom of the abdominal site. Med Biol Eng Comput. 2017; 55: 2001-2014.

9. Segars WP, Sturgeon G, Mendonca S, Grimes J, Tsui BMW. 4D XCAT phantom for multimodality imaging research. Med Phys. 2010; 37: 4902-4915.

10. Guerquin-Kern M, Lejeune L, Pruessmann KP, Unser M. Realistic analytical phantoms for parallel magnetic resonance imaging. IEEE Trans Med Imaging. 2012; 31: 626-636.

11. Abu-El-Haija S, Kothari N, Lee J, et al. YouTube-8M: A Large-Scale Video Classification Benchmark. arXiv. 2016: 1609.08675.

12. BART Toolbox for Computational Magnetic Resonance Imaging. DOI: 10.5281/zenodo.592960.

13. Bertholet J, Knopf A, Eiben B, et al. Real-time intrafraction motion monitoring in external beam radiotherapy. Phys Med Biol. 2019; 64.

14. Bruijnen T, Stemkens B, Lagendijk JJW, Van Den Berg CAT, Tijssen RHN. Multiresolution radial MRI to reduce IDLE time in pre-beam imaging on an MR-Linac (MR-RIDDLE). Phys Med Biol. 2019; 64: 055011.

15. Terpstra ML, Maspero M, D’Agata F, et al. Deep learning-based image reconstruction and motion estimation from undersampled radial k-space for real-time MRI-guided radiotherapy. Phys Med Biol. 2020; 65: 155015.

16. Yoo J, Jin KH, Gupta H, Yerly J, Stuber M, Unser M. Time-Dependent Deep Image Prior for Dynamic MRI. IEEE Trans Med Imaging. 2021: 10.1109/TMI.2021.3084288.

Figures