1959

Self-Assisted Priors with Cascaded Refinement Network for Reduction of Rigid Motion Artifacts in Brain MRI1Department of Electrical and Electronic Engineering, College of Engineering, Yonsei University, Seoul, Korea, Republic of, 2GE Healthcare, Seoul, Korea, Republic of, 3Department of Radiology, Seoul National University Hospital, Seoul, Korea, Republic of

Synopsis

MRI is sensitive to motion caused by patient movement. It may cause severe degradation of image quality. We develop an efficient retrospective deep learning method called stacked U-Nets with self-assisted priors to reduce the rigid motion artifacts in MRI. The proposed work exploits the usage of additional knowledge priors from the corrupted images themselves without the need for additional contrast data. We further design a refinement stacked U-Nets that facilitates preserving of the image spatial details and hence improves the pixel-to-pixel dependency. The experimental results prove the feasibility of self-assisted priors since it does not require any further data scans.

Introduction

Magnetic Resonance Imaging (MRI) is sensitive to motion caused by patient movement. This is due to the relatively long data acquisition time required to acquire the k-space data 1. Motion artifacts manifest as ghosting, ringing, and blurring and may cause severe degradation of image quality. As a result, it becomes a challenge for radiologists to accurately interpret patients with motion artifacts 2. Physiological and physical motions are the main sources of MRI motion artifacts 3. Both the involuntary motions and the conscious sudden motions due to discomfort are unavoidable during data acquisition. Thus, correction of motion artifacts is being more of interest for MRI society.Methods

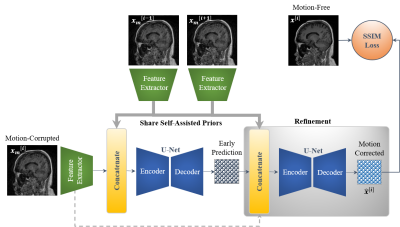

[Proposed Network]In this work, we designed efficient stacked U-Nets with self-assisted priors to solve the problem of motion artifacts in MRI. The proposed work aims to exploit the usage of additional knowledge priors from the corrupted images themselves without the need of additional contrast data. This is achieved by sharing the structural details from the contiguous slices of the same distorted subject with each corrupted input image. More specifically, the proposed network initiates by concatenating multi-inputs (i.e., the corrupted image and its adjacent slices) and eventually yields a single corrected image. In this case, the network could reveal some missed structural details throughout the assistance of the information that exists in the adjacent slices, especially in the case of 3D imaging. Furthermore, we design a refinement stage via developing the stacked U-Nets, which facilitates the generation of better motion-corrected images with superior maintaining of the image details and contrast. Compared to works 4,5, the proposed self-assisted priors approach has the following advantages: (i) it eliminates the need for additional MRI scans to be used as image priors, and (ii) it also reduces the computational cost since it does not require any further image pre-processing such as image registration and alignment. An overview of the proposed motion correction network is illustrated in Figure 1.

[Simulation of Motion Artifacts]

To accomplish the motion artifact correction task, we have collected a set of 83 clinical brain MRI subjects at Seoul National University Hospital. Simulation of MRI motion artifacts is inevitable to fulfill the network training. 3D rigid motion artifacts were generated by applying sudden rotational motions in the range of [-7o, +7o] as well as by applying translational motions between -7 and +7 mm. A total of 9,996 and 3,390 images were utilized for training and testing (Group 1), respectively. Fortunately, there were 38 patients among our dataset that have additional Contrast-Enhanced (CE) data. We also investigated the importance of using the CE data as an additional image prior to the self-assisted priors. We have split this new dataset (Group 2) based on the subject level into 80% for training (3,684 images) and 20% for testing (1,378 images).

Results

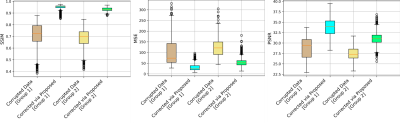

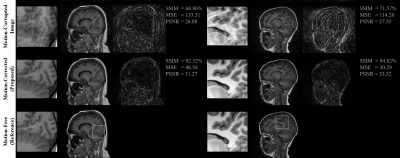

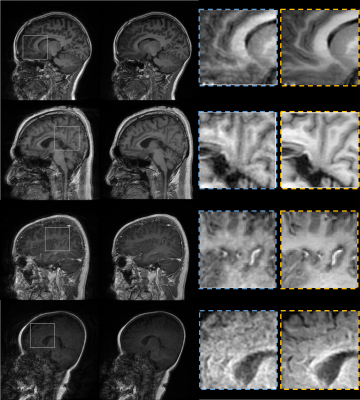

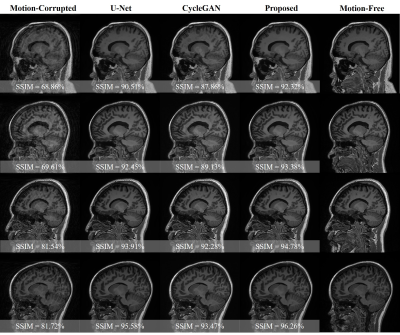

This section shows the motion artifacts correction performance of the proposed stacked U-Nets using two testing groups. The first testing set includes only the self-assisted priors, while the second group involves the self-assisted priors with the incorporation of additional prior from CE data. We illustrate the motion correction performances for each testing image throughout both testing groups in Figure 2. These boxplots show the SSIM, MSE, and PSNR indices before and after correction of motion artifacts. The proposed deep learning network was able to learn additional knowledge of the motion patterns from various image priors, leading to achieve good results in the motion artifacts correction task. The proposed network provides promising results in motion artifacts correction with SSIM improvement rates of 23.37% and 24.50% compared to the simulated motion-corrupted data for both testing groups, respectively.Figure 3 illustrates some exemplar motion correction results of the proposed network compared to the reference motion-free images. This figure clearly shows how the proposed method can significantly improve the image quality and reduce the motion artifacts. Figure 4 presents the results of motion correction for some real patient examples with random motions. The motion artifacts are significantly reduced and can be observed visually in the motion-corrected images.

Discussion

The experimental results demonstrated the significance of incorporating the additional priors information to improve the overall performance of motion correction. These inclusions of image priors from the adjacent slices of the same corrupted subjects or from additional CE data enable the network to share some missing structural patterns such as borders of the white and grey matters in the brain. A qualitative comparison is illustrated in Figure 5. CycleGAN generated results with some structural deterioration. However, CycleGAN obtained good motion correction performances in the cases of moderate motions. Nevertheless, the proposed method overcomes the CycleGAN and U-Net on different motion levels.The main limitation of this work is that even though the proposed self-assisted priors’ strategy was beneficial to improve the motion correction by sharing some missing structural details, the utilized adjacent slices were derived from the same corrupted data. That implies there is still a loss of complete information.

Conclusion

We conclude that if additional MRI scans are available, they can be used as image priors besides the self-assisted priors to further enhance the performance of motion artifacts correction.Acknowledgements

This study is supported in part by GE Healthcare research funds.References

1 Zaitsev, M., Maclaren, J. & Herbst, M. Motion artifacts in MRI: A complex problem with many partial solutions. Magn Reson Imaging 42, 887-901, doi:10.1002/jmri.24850 (2015).

2 Andre, J. B. et al. Toward Quantifying the Prevalence, Severity, and Cost Associated With Patient Motion During Clinical MR Examinations. J Am Coll Radiol 12, 689-695, doi:10.1016/j.jacr.2015.03.007 (2015).

3 Godenschweger, F. et al. Motion correction in MRI of the brain. Phys Med Biol 61, R32-56, doi:10.1088/0031-9155/61/5/R32 (2016).

4 Lee, J., Kim, B. & Park, H. MC(2) -Net: motion correction network for multi-contrast brain MRI. Magn Reson Med 86, 1077-1092, doi:10.1002/mrm.28719 (2021).

5 Chatterjee, S. et al. in 34th Conference on Neural Information Processing Systems (NeurIPS 2020) (arXiv preprint arXiv:2011.14134, Vancouver, Canada, 2020).

Figures